What is technical seo – What is technical ? It’s the behind-the-scenes work that makes your website a search engine dream. From website architecture to page speed and security, technical ensures your WordPress site is optimized for search engines, helping you rank higher in search results. This isn’t about stuffing or flashy design; it’s about building a strong foundation for long-term success.

We’ll explore the crucial components, from crawlability to mobile-friendliness, to understand how technical impacts your WordPress site.

This comprehensive guide dives into the essential elements of technical , providing practical insights and actionable strategies for improving your WordPress site’s visibility and performance. We’ll break down how website architecture, page speed, mobile-friendliness, and security all contribute to your site’s search engine ranking and user experience. Learn how to troubleshoot common issues and implement best practices to boost your rankings and attract more organic traffic.

Defining Technical

Technical is the practice of optimizing your website’s technical infrastructure to improve its visibility and searchability in search engine results pages (SERPs). It’s about ensuring search engine crawlers can easily access, understand, and index your website’s content. This differs from other approaches, like content optimization or link building, as it focuses on the backend elements of your site.Technical is crucial because a poorly optimized website can hinder search engine crawlers, preventing them from properly indexing your content, even if your content is high-quality.

This ultimately affects your website’s ranking, limiting its visibility to potential customers.

Core Components of Technical

Technical encompasses several interconnected elements. These elements work together to ensure a seamless user experience and efficient search engine crawling. Understanding and optimizing these aspects are fundamental to improving your website’s overall performance.

- Crawlability: Ensuring search engine crawlers can access and follow all pages on your website is paramount. This involves creating a sitemap, using appropriate robots.txt directives, and fixing broken links.

- Indexability: Search engines must be able to understand and index the content of your website’s pages. Key aspects include using appropriate meta tags, schema markup, and avoiding duplicate content.

- Website Speed: A fast-loading website improves user experience and positively impacts search engine rankings. Optimizing images, leveraging browser caching, and minimizing HTTP requests are crucial steps.

- Mobile Friendliness: With a significant portion of searches occurring on mobile devices, ensuring your website is responsive and optimized for mobile viewing is essential. A mobile-friendly design is vital for user experience and search engine rankings.

- URL Structure: Well-structured URLs are crucial for crawlers to understand the hierarchy and organization of your website. This also aids users in easily navigating the site.

Relationship Between Technical and Website Performance

A technically sound website translates directly into better performance. Fast loading times, easy navigation, and mobile responsiveness contribute to a positive user experience, encouraging longer visits and higher engagement rates. This, in turn, positively influences search engine rankings. Search engines often prioritize websites that provide a seamless user experience.

Importance of Technical in Search Engine Visibility

Technical directly impacts your website’s visibility in search engine results. A well-optimized website is more likely to be crawled, indexed, and ranked higher by search engines. This improved visibility leads to more organic traffic, which ultimately drives conversions and revenue.

Comparison of Technical with Other Types

| Aspect | Technical | Content | Link Building |

|---|---|---|---|

| Focus | Website architecture, crawlability, and site speed | High-quality, relevant content creation | Earning backlinks from reputable sources |

| Methods | Optimizing sitemaps, robots.txt, URL structure, and site speed | Creating blog posts, articles, and other engaging content | Building relationships with other websites, guest blogging, and earning citations |

| Goal | Improve search engine crawlability and indexability | Attract and engage target audience | Increase domain authority and credibility |

Website Architecture and Crawlability

A well-structured website is crucial for search engine optimization (). Search engines rely on understanding the site’s architecture to effectively crawl and index its content. A clear and logical structure ensures search engines can easily navigate and process information, leading to better rankings and increased organic traffic. A well-organized site also enhances the user experience, which is a factor search engines consider.Search engines use bots (crawlers) to discover and process web pages.

These bots follow links from one page to another, building an index of the web. The ease with which these bots can traverse your website directly impacts your site’s visibility in search results. A well-organized site architecture significantly improves crawl efficiency, enabling search engines to quickly find and process your content.

Significance of Website Architecture for Search Engines

Website architecture significantly impacts search engine crawlability. A well-organized site with clear navigation allows search engine bots to easily discover and understand the hierarchy of pages, content, and relationships between them. This enables effective indexing, which is crucial for improved search engine rankings. Conversely, a poorly structured site can hinder crawling, leading to incomplete indexing and lower rankings.

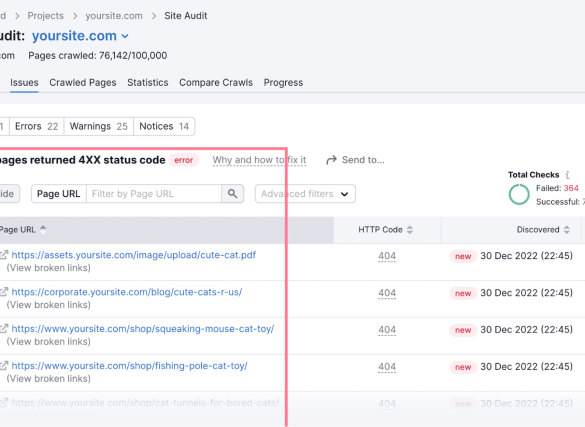

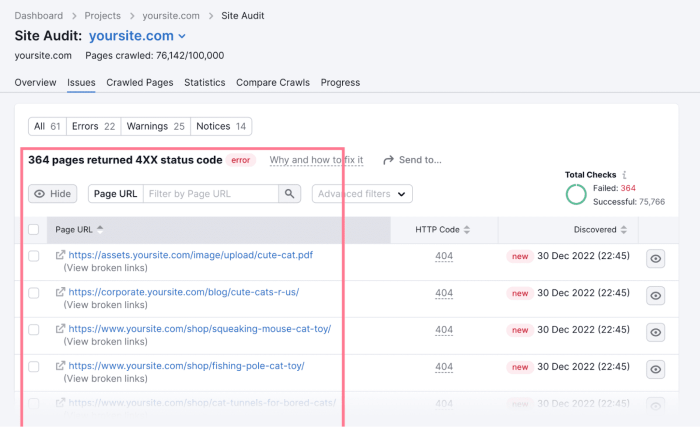

How Sitemaps Impact Search Engine Crawling

Sitemaps are essential tools for guiding search engine crawlers through your website. They act as a roadmap, providing a list of all the important pages on your site. By submitting a sitemap, you explicitly tell search engines about the pages you want them to index. This significantly speeds up the crawling process and ensures that crucial pages aren’t overlooked.

Creating an Optimal Sitemap

A well-crafted sitemap should include all important pages, especially those that are new or recently updated. It should be structured in a format that’s easily readable and understandable by search engines. A well-maintained sitemap is a crucial aspect of ensuring search engines can access and index all your content. Avoid including pages that are dynamically generated or irrelevant, as this can overload the search engine crawlers.

Technical SEO is all about optimizing your website’s backend for search engines. It’s the behind-the-scenes work that ensures your site is easily crawlable and understood by Google. One key aspect of this is understanding how Google’s tools, like the google sunsetting assistant driving mode dashboard , impact your SEO strategy. By staying informed about these updates, you’ll be better positioned to optimize your site for the best possible results.

Ultimately, understanding technical SEO is crucial for any website hoping to rank high.

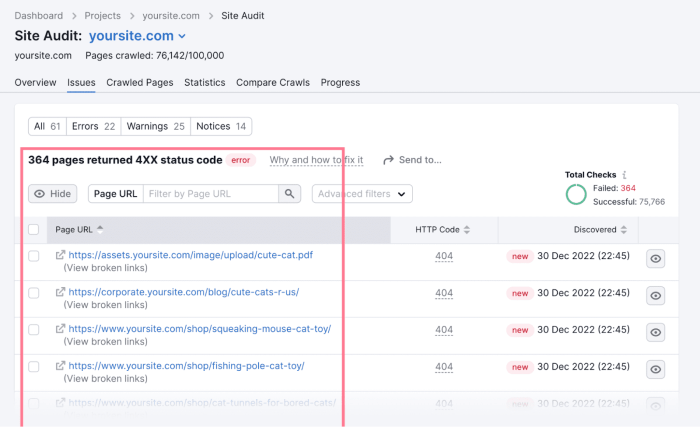

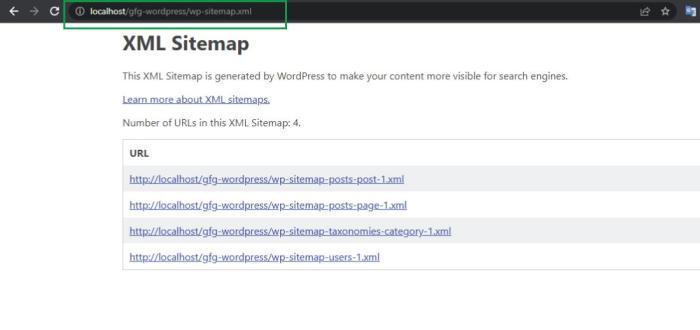

Common Crawl Errors and Solutions

Several crawl errors can negatively impact your website’s visibility. One common error is crawl errors like “404 Not Found” errors, indicating broken links. These errors signal to search engines that pages are unavailable, which can negatively impact your site’s ranking. Regularly checking for and fixing these errors ensures that search engines can access all necessary content and improves the overall crawl experience.

Another frequent error is the excessive use of JavaScript, which might hinder the ability of crawlers to fully understand the structure and content.

Optimizing URL Structures for Search Engines

URL structures should be concise, descriptive, and user-friendly. Use s relevant to the page’s content to enhance discoverability. Clear and descriptive URLs make it easy for both search engines and users to understand the context of a page, improving both crawl efficiency and user experience. Using short and -rich URLs improves and readability, which ultimately improves user engagement and rankings.

Different Types of Sitemaps and Their Use Cases

| Sitemap Type | Description | Use Case |

|---|---|---|

| XML Sitemap | A structured file containing a list of URLs on your website. | Primary method for guiding search engine crawlers to index pages. |

| HTML Sitemap | A human-readable sitemap presented on your website. | Provides users with an overview of your website’s content. |

| Video Sitemap | Lists video URLs for video-focused websites. | Crucial for YouTube and other video platforms to correctly index videos. |

| News Sitemap | Provides information about news articles on your site. | Helps news aggregators and search engines index news content quickly. |

Crawling and Indexing: What Is Technical Seo

Search engines use sophisticated robots, often called crawlers or spiders, to explore the vast expanse of the web. These automated programs systematically visit web pages, following links to discover new content and update their vast databases. This process, crucial for search results, relies on a multitude of factors, including website architecture, sitemaps, and the robots.txt file. Understanding how crawling and indexing work is paramount for ensuring your website is discoverable by search engines.

Search Engine Crawling Process

Search engine crawlers follow a structured process to discover and index web pages. They start with a seed list of known websites and follow the links found on those pages. This iterative process continues, exploring more pages and links as they are encountered. The crawlers prioritize pages based on various factors, including the frequency of updates, the importance of the site, and the presence of sitemaps.

This process ensures that frequently updated and relevant content is more likely to be discovered and indexed sooner.

Common Issues Hindering Search Engine Crawling

Several factors can impede the effectiveness of search engine crawling, potentially impacting a website’s visibility. These include technical errors, server issues, and poor site architecture. Crawl errors can be categorized as server-side or client-side, and understanding these categories is crucial for identifying and addressing the root causes.

Diagnosing and Fixing Crawl Errors

Diagnosing crawl errors requires a systematic approach, often involving the use of tools provided by search engines and third-party services. These tools can identify specific errors, such as 404 errors, server response issues, or broken links, offering insights into the reasons behind the problems. Troubleshooting these errors often involves resolving server-side issues, fixing broken links, and improving website structure to enhance crawlability.

Impact of robots.txt on Crawling

The robots.txt file acts as a crucial instruction manual for search engine crawlers, guiding them on which parts of your website to crawl and which to ignore. This file is essential for preventing unwanted crawling of sensitive content, maintaining website performance, and directing crawlers to prioritize important sections. It’s crucial to ensure the robots.txt file is properly configured to avoid blocking essential pages from being indexed.

Common Crawl Errors and Potential Causes

| Crawl Error | Potential Causes |

|---|---|

| 404 errors (Page Not Found) | Broken links, missing files, incorrect URLs, or changes in website structure. |

| Server Errors (e.g., 500 errors) | Server overload, database issues, or temporary server downtime. |

| Slow loading times | Large images, inefficient code, or server response delays. |

| Blocked by robots.txt | Incorrect configuration of the robots.txt file, blocking important pages. |

| Crawl depth issues | Poor site structure, insufficient internal linking, or complex website architecture. |

Page Speed and Performance

Page speed is a critical factor in both user experience and search engine rankings. A slow-loading website frustrates visitors, leading to high bounce rates and lost conversions. Search engines, recognizing the importance of user experience, prioritize fast-loading sites in their results. Faster websites generally translate to a better user experience, and search engines reward this.

Importance of Page Speed for Search Rankings

Page speed directly impacts search engine rankings. Slow loading times negatively affect user experience, increasing bounce rates and decreasing time on site. Search engines like Google consider page speed a significant ranking factor, favoring websites that load quickly. Faster websites often lead to better engagement metrics, which algorithms interpret as a sign of a high-quality site. This positive feedback loop benefits sites with optimized page speed.

Measuring Page Speed and Performance

Accurate measurement of page speed and performance is crucial for optimization. Various tools provide comprehensive reports detailing website performance metrics. These reports identify bottlenecks, enabling targeted improvements. Tools offer insights into loading times, server response times, and other crucial performance indicators.

Techniques for Optimizing Page Load Times

Several techniques can optimize page load times. Minimizing HTTP requests reduces the time needed to fetch resources. Combining CSS and JavaScript files into fewer files minimizes requests. Caching strategies store frequently accessed resources, speeding up future loads. Using a Content Delivery Network (CDN) distributes website content across a global network of servers, improving response times for users worldwide.

Image Optimization Best Practices

Image optimization is essential for fast page loading. Compressing images reduces file sizes without significant quality loss. Using appropriate image formats like WebP (a modern format that often offers better compression than JPEG) can further optimize file sizes. Optimizing image dimensions for the intended use reduces file sizes without affecting visual quality. These techniques lead to quicker loading times.

Impact of Server Response Time on Search Rankings

Server response time directly influences page load times. A slow server response translates to a longer time for the browser to receive and process website content. Consequently, search engines penalize websites with slow server response times, impacting their rankings. Websites with optimized servers consistently provide faster load times, benefiting their search ranking.

Page Speed Testing Tools

| Tool | Features | Pros | Cons |

|---|---|---|---|

| Google PageSpeed Insights | Analyzes page speed based on Google’s performance metrics, provides recommendations for improvement, and offers mobile-specific insights. | Free, widely used, provides actionable recommendations, integrates with Google Search Console. | Limited advanced features, relies on Google’s metrics. |

| GTmetrix | Provides a detailed report on page speed, including performance metrics and recommendations, offering comprehensive analysis. | Detailed report, various performance metrics, provides actionable recommendations, user-friendly interface. | Subscription-based, limited free trial. |

| WebPageTest | Allows for testing from various locations globally, providing a thorough analysis of page speed, useful for different user locations. | Allows for testing from different locations, provides detailed analysis, helpful for geographically diverse users. | Can be more technical, requires more user knowledge. |

| Pingdom | Offers a comprehensive performance analysis with reports on load times and server response, provides detailed insights. | User-friendly interface, comprehensive reports, provides load time and server response metrics. | Subscription-based, may not offer the most detailed analysis compared to other tools. |

Mobile-Friendliness and Responsiveness

In today’s digital landscape, a significant portion of online traffic originates from mobile devices. This necessitates a website’s seamless adaptation to various screen sizes and orientations. Failing to prioritize mobile-friendliness can lead to a poor user experience, impacting both user engagement and search engine rankings.Mobile-friendliness is no longer a nice-to-have; it’s a critical component of a successful online presence.

Search engines, recognizing the prevalence of mobile users, heavily favor websites designed for optimal mobile viewing. A responsive design ensures that your website adapts flawlessly to different screen sizes, from smartphones to tablets and desktop computers, offering a consistent and user-friendly experience across all platforms.

Importance of Mobile-Friendliness for Search Engines

Search engines, like Google, prioritize mobile-friendly websites. This prioritization stems from the recognition that a majority of users now access the web through mobile devices. Mobile-friendliness directly impacts user experience, engagement, and conversion rates.

How Responsive Design Impacts Search Rankings

Responsive design is a key factor in search engine rankings. Search engines reward websites that provide a positive user experience across all devices. A responsive website adapts its layout and content dynamically to different screen sizes, ensuring readability and usability regardless of the device used. This adaptability contributes significantly to higher rankings in search results, as it signals to search engines that the site is user-friendly and optimized for mobile users.

How to Test Mobile-Friendliness

Numerous tools are available to assess mobile-friendliness. Google’s Mobile-Friendly Test is a free and readily accessible tool. Simply enter your website URL, and the tool will analyze its mobile-friendliness and provide recommendations for improvement. Other browser extensions and developer tools also provide detailed insights into the site’s responsiveness and identify potential issues. These tools aid in pinpointing areas needing adjustments to optimize mobile viewing.

Best Practices for Mobile-First Indexing

Mobile-first indexing is a crucial aspect of modern . It means that search engines primarily use the mobile version of your website for indexing and ranking. This highlights the importance of optimizing the mobile version of your website for a superior user experience. Developing a mobile-first design approach from the start ensures a website that is intuitive and navigable on various mobile devices.

This ensures that the mobile site is not just a scaled-down version of the desktop site, but a truly mobile-centric experience.

Significance of Mobile Page Speed, What is technical seo

Mobile page speed significantly influences user experience and search rankings. Slow-loading pages lead to high bounce rates and reduced user engagement. Search engines recognize the importance of fast loading times and often reward sites that prioritize page speed. Users expect a quick and seamless browsing experience on their mobile devices. Optimization for mobile page speed, alongside mobile-friendliness, is crucial for a positive user experience and better search rankings.

Advantages of Responsive Design

| Feature | Advantages |

|---|---|

| Improved User Experience | Provides a seamless browsing experience across various devices, resulting in higher user engagement and satisfaction. |

| Enhanced Search Rankings | Responsive design is a key ranking factor for search engines, leading to higher visibility and organic traffic. |

| Reduced Development Costs | One website design serves all devices, reducing the need for separate mobile and desktop versions. |

| Simplified Maintenance | Updates and changes are applied to a single codebase, simplifying website maintenance. |

| Increased Accessibility | Responsive websites are easily accessible from various devices and screen sizes, including smartphones and tablets. |

Structured Data Markup

Structured data markup is a crucial aspect of technical that helps search engines understand the context and meaning of content on your website. By providing structured data, you essentially give search engines a detailed description of your content, enabling them to display richer results in search engine results pages (SERPs). This improves the visibility and click-through rate of your website, ultimately driving more traffic.Using structured data is like providing a detailed map for search engines.

Instead of guessing the meaning of your content, they can precisely understand what each piece of information represents, leading to better comprehension and enhanced search results.

Structured Data Markup Formats

Structured data markup uses a standardized format that allows search engines to understand the meaning of different parts of your content. This standard format ensures consistent interpretation by search engines, irrespective of the website or content. The most common format is Schema.org, a collaborative project involving Google, Bing, and other search engines.

- Schema.org provides a vocabulary of terms and formats that can be used to describe various types of content. These include products, events, recipes, articles, and much more.

Benefits of Using Structured Data

Implementing structured data markup offers numerous advantages for website owners.

- Enhanced Search Engine Understanding: Structured data markup clearly defines the meaning of content, allowing search engines to interpret it accurately.

- Rich Results in SERPs: Rich snippets, which include images, star ratings, and other details, appear in search results, enhancing the visual appeal and click-through rates of your website. Users are more likely to click on results with rich snippets.

- Improved Click-Through Rates (CTR): Rich results can significantly improve click-through rates from search results, leading to increased website traffic.

- Increased Visibility: Rich results make your website more prominent in search engine results pages, attracting more potential visitors.

- Enhanced User Experience: Rich results provide users with more context and information directly in the search results, improving the user experience.

Examples of Schema.org Markup

Schema.org provides various types of markup for different types of content. Here are some examples.

- Product Schema: This markup is used to describe products, including their name, price, availability, and images.

- Article Schema: This markup describes articles, specifying author, publication date, and related articles.

- Event Schema: This markup is used for events, defining details like date, time, location, and description.

How Structured Data Helps Search Engines

Structured data markup helps search engines understand the meaning and context of content elements. This structured format enables search engines to accurately associate the content with specific categories, topics, and entities. By providing this extra context, search engines can present more relevant results to users.

Implementing Structured Data Markup

Implementing structured data involves adding specific tags to your HTML code. These tags describe the content in a format that search engines can understand.

- Using a Structured Data Markup Generator: Tools like Google’s Structured Data Markup Helper can assist in generating the necessary code. This tool guides you through the process of adding the appropriate tags to your website.

- Manually Adding Markup: While tools exist, you can also manually add structured data markup to your website’s HTML. This requires understanding the specific Schema.org vocabulary and the proper syntax for implementing the markup.

- Validating Markup: Ensure your structured data markup is correctly formatted by using validation tools. This process helps avoid common errors and ensures that search engines can correctly interpret your data.

Types of Structured Data and Use Cases

| Type of Structured Data | Use Case |

|---|---|

| Product | Describing products on an e-commerce website, including details like price, availability, and images. |

| Article | Providing information about articles, including author, publication date, and related articles. |

| Event | Listing details about events, including date, time, location, and description. |

| Recipe | Describing recipes, including ingredients, instructions, and preparation time. |

| Person | Describing individuals, including their name, profession, and contact information. |

Security and HTTPS

Website security is paramount in the digital age. It’s not just about protecting user data; it’s also a crucial factor for search engine optimization (). A secure website instills trust in both users and search engines, which can directly influence rankings and overall online visibility. This section delves into the significance of website security, the advantages of HTTPS, and strategies to bolster your site’s defenses.Search engines prioritize secure websites not only for user trust but also because they recognize the inherent security benefits of HTTPS.

A secure connection ensures that data exchanged between the user’s browser and the website server is encrypted, protecting sensitive information from unauthorized access. This enhanced security contributes to a positive user experience, a key factor in modern .

Importance of Website Security for Search Engines

Search engines like Google recognize the importance of website security as a critical factor in determining the quality and trustworthiness of a website. A secure website, indicated by the HTTPS protocol, demonstrates a commitment to user data protection. This signals a responsible and trustworthy online presence, boosting the website’s credibility in the eyes of both users and search engines.

Benefits of Using HTTPS

Implementing HTTPS offers numerous advantages beyond just improved security. It fosters user trust and confidence, which translates to higher engagement and conversions. The encryption provided by HTTPS safeguards sensitive data, such as login credentials and payment information, protecting users from malicious actors. Moreover, search engines often prioritize HTTPS-enabled sites in their ranking algorithms, contributing to a higher search engine ranking.

Technical SEO is all about making sure your website is easily crawlable and indexable by search engines. It’s like building a well-organized library for search bots, ensuring they can easily find and understand everything. Recently, the news about the trump extends tiktok sale shutdown 75 days has some people wondering about the impact on digital marketing strategies, which directly ties back to the importance of technical SEO for maintaining a strong online presence.

Ultimately, technical SEO is about creating a smooth and efficient user experience, as well as making your site readily available to search engines.

Techniques for Securing a Website

Ensuring website security involves a multi-faceted approach. These are crucial steps for protecting your site from vulnerabilities:

- Secure Hosting Providers: Choosing a reputable hosting provider with robust security measures is fundamental. They typically employ firewalls, intrusion detection systems, and other security protocols to protect against potential threats.

- Regular Security Audits: Regularly assessing your website for vulnerabilities is essential. Penetration testing, vulnerability scanning, and code reviews help identify and address potential weaknesses before they are exploited.

- Strong Passwords and Access Control: Robust passwords for all accounts and strict access controls are critical. Employing multi-factor authentication adds an extra layer of security.

- Secure Content Delivery Network (CDN): Utilizing a CDN can enhance website performance and security by distributing content across various servers. This approach often includes measures to mitigate DDoS attacks.

Impact of Security Issues on Search Rankings

Security vulnerabilities can severely impact a website’s search rankings. Compromised sites often face penalties from search engines, leading to lower rankings and reduced visibility. This can significantly affect organic traffic and revenue. For example, a website with a known security breach may see a drop in rankings as search engines devalue its trustworthiness.

Technical SEO is all about ensuring your website is easily crawlable and indexable by search engines. A crucial aspect of this is understanding user behavior and website performance. This is where tools like an analytics dashboard come in, enabling you to see how visitors interact with your site, which pages are popular, and what improvements can be made.

By leveraging insights from a platform like analytics dashboard unlocking data driven decision making , you can make informed decisions that optimize your website’s structure for better search engine rankings. Ultimately, these data-driven decisions are key to improving technical SEO performance and helping your site rank higher.

How Search Engines Prioritize Secure Websites

Search engines increasingly prioritize secure websites in their ranking algorithms. HTTPS is now a significant ranking factor, rewarding sites that prioritize user data protection. This prioritization reflects a broader trend toward secure browsing and a commitment to user trust. Websites without HTTPS often experience reduced visibility compared to those with secure connections.

Security Protocols and Their Levels of Protection

The following table Artikels different security protocols and their associated levels of protection:

| Protocol | Description | Level of Protection |

|---|---|---|

| HTTPS | Hypertext Transfer Protocol Secure | High |

| SSL (Secure Sockets Layer) | Predecessor to TLS | Moderate to High (depending on version) |

| TLS (Transport Layer Security) | Successor to SSL, newer and more secure version | High |

| SFTP (Secure File Transfer Protocol) | Secure file transfer protocol | High |

Technical Tools and Audits

Technical is more than just a checklist; it’s about understanding your website’s inner workings and optimizing them for search engines. Tools and audits are essential for identifying technical issues that might be hindering your site’s performance. A thorough understanding of these tools allows you to proactively address problems before they negatively impact your rankings.A robust technical strategy involves regular audits to identify and resolve issues like crawl errors, slow page speed, and mobile-friendliness problems.

Using the right tools helps you pinpoint these issues efficiently, saving time and resources. Proactive maintenance through audits ensures your website remains search engine-friendly.

Popular Technical Tools

Various tools are available to help you assess your website’s technical health. These tools provide insights into crucial aspects like site structure, crawlability, and performance. Choosing the right tool depends on your specific needs and budget.

- Google Search Console: A free tool from Google that provides invaluable insights into how Googlebot crawls and indexes your website. It highlights crawl errors, mobile usability issues, and indexation problems. This is a crucial tool for understanding how search engines perceive your site.

- SEMrush: A comprehensive toolkit offering a wide range of features, including site audits, research, and rank tracking. SEMrush’s technical audit feature examines aspects like site speed, mobile-friendliness, and redirects, offering actionable recommendations.

- Ahrefs: Another powerful suite with a strong focus on backlink analysis. Ahrefs also provides detailed technical audits, including checks for crawl errors, broken links, and mobile responsiveness. It’s particularly helpful for large and complex websites.

- Screaming Frog: A dedicated crawler specializing in website analysis. It excels at identifying crawl errors, broken links, and sitemap issues. Its ability to gather data from a large number of pages makes it efficient for large websites.

- DeepCrawl: A sophisticated crawler offering advanced functionalities like custom crawling patterns, comprehensive reporting, and integrations with other tools. It’s beneficial for complex sites requiring detailed analysis of specific aspects.

Technical Audit Process

A technical audit is a systematic evaluation of your website’s technical elements from a search engine’s perspective. It involves using tools to identify issues and implement solutions.

- Crawl the website: Use a crawler tool (like Screaming Frog) to systematically navigate your website’s pages and gather data. This process generates valuable information about your site’s structure, links, and content.

- Analyze the results: Review the data gathered by the crawler to identify any errors or issues. Look for broken links, crawl errors, slow loading times, and mobile responsiveness problems.

- Prioritize issues: Not all issues are created equal. Identify the most critical problems that could significantly impact your website’s visibility and rankings. Focus on resolving these issues first.

- Implement solutions: Based on the analysis, implement solutions to address the prioritized problems. This could involve fixing broken links, optimizing page speed, improving mobile responsiveness, or updating sitemaps.

- Monitor progress: Regularly monitor your website’s performance after implementing changes. Use Google Search Console and other tools to track improvements and identify any new issues.

Key Metrics to Track During an Audit

Tracking specific metrics during a technical audit helps to quantify the effectiveness of your improvements. These metrics provide valuable data to measure the impact of changes.

- Crawl errors: Identifying and resolving crawl errors ensures Googlebot can effectively access and index your site’s content.

- Page speed: Optimizing page load times enhances user experience and improves rankings. A fast site is crucial for both users and search engines.

- Mobile-friendliness: Ensuring your site is mobile-friendly is essential for reaching a broader audience and maintaining a good user experience.

- Indexation status: Monitoring your site’s indexation status helps you identify and resolve any issues preventing Google from correctly indexing your content.

- Broken links: Addressing broken links prevents users from encountering errors and keeps your site functioning smoothly. This improves the overall user experience.

Best Practices for Using Technical Tools

Using technical tools effectively requires understanding their strengths and limitations. Applying best practices ensures that you get the most out of these tools.

- Understand the tool’s capabilities: Before using a tool, understand its specific functionalities and limitations. Different tools excel in different areas.

- Regular audits: Conduct regular audits to identify and resolve potential issues proactively.

- Thorough analysis: Don’t just scan; analyze the results deeply to identify the root cause of any problems.

- Actionable recommendations: Focus on actionable recommendations provided by the tools to effectively address the identified problems.

- Stay updated: The landscape is constantly evolving, so staying updated on new tools and best practices is crucial.

Comparison of Technical Auditing Tools

The following table provides a concise comparison of various technical auditing tools:

| Tool | Features | Pros | Cons |

|---|---|---|---|

| Google Search Console | Crawl errors, indexation, mobile usability | Free, direct insights from Google | Limited advanced features |

| SEMrush | Comprehensive audit, research, rank tracking | Wide range of features, actionable recommendations | Paid service, steep learning curve |

| Ahrefs | Backlink analysis, technical audits, crawl errors | Robust features, large dataset | Paid service, steep learning curve |

| Screaming Frog | Crawling, broken links, sitemaps | Efficient for large sites, specific crawler | Limited advanced features, no comprehensive reporting |

| DeepCrawl | Custom crawling, comprehensive reporting, integrations | Advanced features, tailored for large sites | High cost, complex interface |

Ending Remarks

In conclusion, understanding what is technical is vital for any WordPress website owner. By optimizing your website’s technical aspects, you create a user-friendly, efficient, and search-engine-friendly experience. This guide provided a comprehensive overview of the key components. Remember, technical is an ongoing process, and continuous monitoring and adjustments are key to maintaining and improving your search engine rankings.