How long to run an ab test – How long to run an A/B test is a crucial question for any marketer or product manager. A poorly timed or executed test can lead to wasted resources and missed opportunities. This guide delves into the factors that determine the optimal duration for your A/B tests, from understanding the intricacies of different test types to managing external factors like seasonal trends.

We’ll explore the statistical concepts behind calculating the necessary sample size, examine external factors that might influence your test, and provide practical strategies for managing expectations and handling unexpected delays. Ultimately, this guide equips you with the knowledge to make data-driven decisions about your A/B test duration.

Defining the Scope of an A/B Test

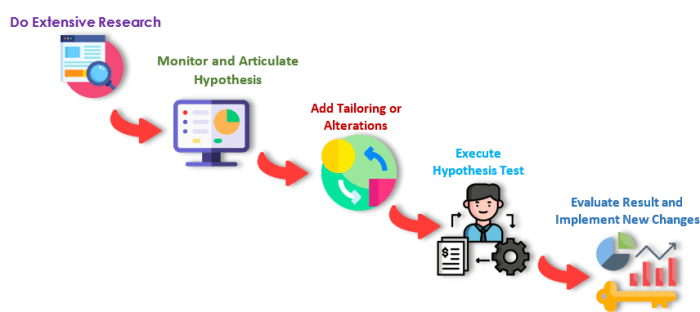

A/B testing is a powerful tool for optimizing website design, marketing campaigns, and product features. Understanding the scope of an A/B test, however, is crucial for ensuring that the results are reliable and actionable. A well-defined scope encompasses the specific hypotheses being tested, the metrics used to measure success, and the duration of the experiment.Defining the scope of your A/B test is more than just choosing two versions; it’s about meticulously planning the entire experiment to maximize the value of the data collected.

It’s about understanding what you want to learn and how you will measure success. This careful planning minimizes the risk of inconclusive results or wasted effort.

Types of A/B Tests and Their Goals

Different types of A/B tests address various objectives. A/B tests can compare two versions of a webpage element, such as a button or a headline. Multivariate tests, on the other hand, evaluate the effect of multiple variations of different elements simultaneously. Finally, A/B tests can also be employed to evaluate different marketing campaigns or to compare various product features.

Each test type serves a distinct purpose, ranging from improving user experience to boosting conversion rates. The specific goal will influence the metrics tracked and the duration of the test.

Factors Influencing A/B Test Duration

Several factors influence the optimal duration of an A/B test. The expected effect size plays a critical role. A larger expected effect size, meaning a more significant difference between the variations, often requires a shorter test duration. Conversely, a smaller effect size necessitates a longer test period to gather sufficient data to reliably detect the difference. Variability in user behavior also impacts the test duration.

High variability necessitates a longer test to ensure that the observed difference isn’t simply due to random fluctuations in user behavior. The desired statistical power, the probability of detecting a true effect if one exists, is another important consideration. Higher power requires a longer test duration.

Figuring out the perfect duration for an A/B test can be tricky. It really depends on factors like expected variation in results and the desired level of statistical confidence. To boost your company’s online presence and share valuable insights, consider creating a company personal blog, like this one. A well-maintained blog can help you track and analyze user behavior, providing valuable data to inform your A/B test duration decisions, leading to more effective and quicker results.

Determining Appropriate Sample Size

The sample size for an A/B test directly affects the test’s duration. A larger sample size provides more reliable data, leading to more statistically significant results. A smaller sample size may not provide enough data to detect a true difference, leading to inaccurate conclusions. To determine the appropriate sample size, you need to consider the effect size you expect to see, the variability in user behavior, and the desired statistical power.

Statistical power analysis tools can help you calculate the required sample size. This ensures you achieve statistically significant results within a reasonable timeframe.

A crucial concept in A/B testing is the calculation of the required sample size. This involves estimating the expected effect size, the variability of the metric being measured, and the desired level of statistical significance (typically 95%).

Relationship Between Effect Size, Sample Size, and Duration

The table below illustrates the relationship between effect size, sample size, and estimated duration for A/B tests. The estimated duration is a rough estimate and can vary based on specific traffic patterns.

| Effect Size | Sample Size | Estimated Duration |

|---|---|---|

| Large (e.g., 10% increase in conversion rate) | 1,000-2,000 users | 1-2 weeks |

| Medium (e.g., 5% increase in conversion rate) | 2,000-5,000 users | 2-4 weeks |

| Small (e.g., 1% increase in conversion rate) | 5,000-10,000+ users | 4-8 weeks or longer |

Calculating the Duration

Knowing how long to run an A/B test is crucial for making informed decisions. Simply launching a test and hoping for results isn’t sufficient. A well-structured test requires a planned duration, allowing for enough data to be collected to draw statistically significant conclusions.

Statistical Significance in A/B Testing

Statistical significance, in the context of A/B testing, refers to the likelihood that observed differences between the control and variant groups are not due to random chance. Two key concepts underpin this: confidence intervals and p-values.

Confidence intervals provide a range of values within which the true difference in conversion rates between the control and variant likely falls. A narrower interval indicates greater precision. A 95% confidence interval, for example, means that if the experiment were repeated many times, the true difference would fall within the calculated interval in 95 out of 100 trials.

P-values represent the probability of observing results as extreme as, or more extreme than, those obtained from the experiment, assuming there is no real difference between the control and variant. A low p-value (typically below 0.05) suggests a statistically significant difference, meaning the observed results are unlikely to have occurred by chance.

Calculating Minimum Sample Size

Determining the required sample size is essential to ensure sufficient data for accurate statistical analysis. A larger sample size generally leads to a more precise and reliable estimate of the difference between the groups, but also increases the test duration. The formula for calculating the minimum sample size needed for a given level of statistical significance often involves:

n = (Z2

– p

– (1-p)) / (margin of error) 2

Where:

- n represents the minimum sample size required for each group.

- Z is the Z-score corresponding to the desired confidence level (e.g., 1.96 for 95%).

- p is the estimated conversion rate of the control group.

- margin of error is the acceptable difference between the observed and true conversion rates.

Using a realistic estimate of the control group’s conversion rate, along with the desired margin of error, and the chosen confidence level, we can calculate the required sample size.

Determining Test Duration

Calculating the test duration involves considering the expected daily traffic or conversions and the minimum sample size calculation. It requires an understanding of how many conversions are needed daily to meet the required sample size.

- Estimate Daily Conversions: Based on historical data, predict the average daily conversions for each group.

- Determine Required Sample Size: Calculate the minimum sample size for each group using the formula above.

- Estimate Test Duration: Divide the minimum sample size by the daily conversions. For example, if 10,000 samples are needed, and 100 conversions occur per day, the test would need approximately 100 days.

Impact of Significance Levels on Duration

| Significance Level | Required Sample Size | Estimated Duration (Example) |

|---|---|---|

| 90% | ~5,000 | ~50 days (assuming 100 conversions/day) |

| 95% | ~10,000 | ~100 days (assuming 100 conversions/day) |

| 99% | ~25,000 | ~250 days (assuming 100 conversions/day) |

This table demonstrates that higher significance levels require larger sample sizes and, consequently, longer test durations.

External Factors Affecting Test Duration: How Long To Run An Ab Test

A/B testing isn’t a one-size-fits-all process. External factors can significantly impact the duration of a test, often requiring adjustments to maintain its validity and reliability. Understanding these influences allows you to make informed decisions about when to conclude a test and avoid drawing inaccurate conclusions from skewed data.External factors introduce variability and complexity to the data collected during an A/B test.

Figuring out the ideal duration for an A/B test can be tricky, but it’s crucial for accurate results. Ultimately, the length depends heavily on the expected impact and the specific metrics you’re tracking. Understanding how to leverage growth hacking techniques, like the ones discussed in this comprehensive guide on how to use growth hacking to attract and retain customers , can help you identify potential growth opportunities and inform your testing strategy.

This will ultimately help you decide on the necessary time frame for a meaningful A/B test, avoiding both overly short and overly long experiments.

Ignoring these influences can lead to erroneous conclusions about the effectiveness of the different versions being tested. A thoughtful approach to managing these external factors is critical for successful A/B testing.

Seasonality

Seasonality plays a crucial role in user behavior and conversion rates. Certain products or services experience peaks and troughs in demand based on the time of year, holidays, or specific events. For example, a travel agency might see a surge in bookings during the summer months. If an A/B test is conducted during a low-demand period, the results may not reflect the true performance of the variations during peak seasons.

Conversely, running a test during a period of high demand might yield skewed results that are inflated or artificially low. Careful consideration of the seasonal trends is needed to accurately interpret the results.

Marketing Campaigns

Concurrent marketing campaigns can significantly impact user engagement and conversion rates. Promotions, advertisements, and other marketing efforts can attract more users and potentially influence their choices during the test period. For instance, a promotional discount running alongside an A/B test might artificially boost conversions for one variation, making it seem more effective than it truly is. Conversely, a lack of marketing activity might reduce engagement and skew results towards a less effective variation.

Changes in User Behavior

User behavior is constantly evolving. Emerging trends, shifts in preferences, or changes in the overall market landscape can influence the results of an A/B test. For example, a new social media platform might attract a large number of users and change the way people interact with products online, potentially altering conversion rates. Monitoring user behavior and its correlation with test results is essential to adjusting the duration appropriately.

Analyzing how user behavior changes during the test duration is crucial.

Figuring out how long to run an A/B test can be tricky. It often depends on the scale of your changes and the expected impact. For example, if you’re looking for subtle improvements, you’ll likely need more data to be confident in your results. Learning how to create truly valuable in-depth content can help in this process, such as by guiding your A/B test design to get meaningful data faster.

Check out our comprehensive guide on in depth content tips for more insights on optimizing your content strategy. Ultimately, the optimal duration for an A/B test is the amount of time needed to collect statistically significant results.

Table: External Factors Affecting A/B Test Duration

| Factor | Description | Potential Impact |

|---|---|---|

| Seasonality | Variations in user demand and behavior across different times of the year. | Can inflate or deflate conversion rates, potentially skewing results if the test period doesn’t align with typical user activity. |

| Marketing Campaigns | Concurrent promotional activities, advertisements, and other marketing efforts. | Can artificially boost or reduce conversion rates, making it difficult to isolate the impact of the variations being tested. |

| Changes in User Behavior | Evolving user preferences, emerging trends, or market shifts. | Can influence conversion rates and user engagement, potentially requiring adjustments to the test duration. |

Practical Considerations for Test Duration

A/B testing is a powerful tool for optimizing website performance and user experience. However, determining the appropriate duration for an A/B test is crucial for achieving meaningful results and avoiding wasted resources. This section explores practical strategies for managing expectations, setting realistic timelines, and handling potential delays. Understanding these considerations will empower you to run successful and efficient A/B tests.Careful planning and realistic expectations are essential for successful A/B testing.

Misaligned expectations between stakeholders and the testing team can lead to frustration and ultimately, suboptimal results. By proactively addressing potential challenges and communicating transparently, teams can ensure that A/B testing initiatives are well-received and yield valuable insights.

Managing Stakeholder Expectations, How long to run an ab test

Effective communication is key to managing stakeholder expectations. Clearly defined goals and timelines, coupled with transparent reporting, will help build trust and maintain momentum.

- Clearly Articulate Goals: Instead of vague statements like “improve conversions,” quantify the desired outcome. For example, “increase conversion rate by 10%.” This clarity helps stakeholders understand the scope and potential impact of the test.

- Establish Realistic Timelines: Base timelines on historical data, anticipated traffic volume, and the required sample size. Overly optimistic timelines can lead to unrealistic expectations and pressure on the testing team.

- Regular Reporting and Communication: Provide stakeholders with regular updates on test progress, including key metrics and observations. Use visualizations to present data effectively and highlight any significant trends or deviations.

Setting Realistic Goals and Timelines

A/B testing should be driven by well-defined goals and realistic timelines. Ambiguous objectives can lead to unproductive testing and wasted resources.

- Define Specific Objectives: Clearly state the specific metric to be improved (e.g., conversion rate, click-through rate). Define the desired improvement percentage or value. For example, “increase the conversion rate from 2% to 2.5% within a 2-week period.”

- Determine Sample Size: The required sample size for statistical significance depends on the expected effect size, significance level, and desired power. Calculate this accurately using appropriate formulas or tools to ensure the test has enough data to draw valid conclusions.

- Project Realistic Timeframes: Consider factors like expected traffic volume, the significance level, and desired power to accurately estimate the necessary duration. If a significant effect is not anticipated, adjust the sample size and timeframe accordingly.

Handling Unexpected Delays and Issues

Unforeseen circumstances can impact test duration. Having contingency plans and adaptable strategies is essential for navigating these challenges.

- Identify Potential Roadblocks: Proactively identify potential delays, such as unexpected website maintenance, technical glitches, or changes in user behavior. Anticipate and plan for these situations.

- Develop Contingency Plans: Artikel alternative strategies for addressing delays or issues. For instance, if the test is significantly delayed, consider adjusting the scope or adding more resources. Adjusting the sample size if necessary can help ensure statistical significance while adapting to delays.

- Monitor for Issues: Regularly monitor the test for any unexpected issues or delays. Early detection allows for timely adjustments and prevents the test from running unnecessarily long.

Best Practices for Managing A/B Test Duration

Implementing best practices for managing A/B test duration ensures efficiency and minimizes wasted resources.

| Best Practice | Description | Example |

|---|---|---|

| Clear Communication Plan | Establish a consistent communication channel and frequency for updates to stakeholders. | Weekly email reports with key metrics and progress updates. |

| Contingency Planning | Develop a plan for handling potential delays or unexpected issues. | If a crucial feature is unavailable, re-evaluate the test scope and adjust timelines. |

| Flexible Timelines | Be prepared to adjust timelines based on the test’s progress and unforeseen circumstances. | Extend the test duration if necessary to achieve statistical significance. |

| Data-Driven Decisions | Continuously analyze data during the test and adjust strategies as needed. | If one variant performs significantly better than others, consider ending the test early. |

Reporting and Analysis

A/B testing isn’t just about collecting data; it’s about extracting actionable insights. Thorough reporting and analysis are crucial to understanding if the test duration was sufficient and to make informed decisions based on the results. Effective analysis helps identify statistically significant differences between variations, justifying conclusions and informing future strategies.Effective analysis goes beyond simply observing numbers. It requires careful consideration of the data collected, the chosen metrics, and the context of the test itself.

This ensures that the conclusions drawn are reliable and relevant to the business objectives.

Analyzing Data for Sufficient Duration

Understanding if the test duration was sufficient hinges on a robust analysis of the collected data. This involves scrutinizing key metrics and assessing their trends over the test period. A critical aspect of this analysis is determining if the data shows a clear pattern or if the results are still fluctuating. Fluctuating results might indicate the need for a longer test period to achieve statistical significance.

Determining Test Conclusion

Determining when to conclude an A/B test, even when exceeding initial estimates, requires a methodical approach. One common method is to use statistical significance tests, like the t-test or chi-squared test, to assess whether the observed differences between variations are likely due to chance or a genuine effect. These tests provide a measure of confidence in the results, enabling a more objective decision-making process.

Key Metrics and Interpretation

Tracking specific metrics is vital to monitoring the progress of an A/B test and evaluating its duration. Key metrics include conversion rates, average order value, bounce rates, and click-through rates. The interpretation of these metrics in relation to the test duration involves observing their trends over time. For instance, a sudden, significant increase in conversion rate for one variation might signal that the test duration was sufficient.

Conversely, if metrics remain relatively stable or show no significant differences across variations after a prolonged period, it might indicate the need for a longer test. Analyzing the rate of change in these metrics is as important as the absolute values.

Reporting to Stakeholders

Reporting on the test duration to stakeholders should be clear, concise, and actionable. A report should Artikel the initial test duration estimate, the reasons behind it (e.g., statistical power calculations), and any deviations from the plan. Explain the rationale behind extending the test duration, if applicable, including the observed trends in key metrics that prompted the extension.Present the findings from statistical significance tests in a clear and accessible manner.

For example, if a t-test showed a statistically significant difference with a p-value of 0.05 or lower, clearly communicate this to stakeholders. This level of clarity ensures that stakeholders understand the rationale behind the conclusions and can use the information to make informed decisions. Avoid overly technical jargon and focus on the implications for business outcomes. A table summarizing key metrics, test duration, and statistical significance results is an effective way to present data concisely.

| Metric | Variation A | Variation B | Statistical Significance |

|---|---|---|---|

| Conversion Rate | 10% | 12% | p<0.05 |

| Average Order Value | $50 | $55 | p>0.05 |

This table presents a simple example. In a real-world scenario, the table would include more detailed data and context, such as the duration of the test, sample size, and specific statistical tests performed.

Closure

In conclusion, determining the optimal duration for an A/B test requires careful consideration of multiple factors, from the desired effect size to external influences. By understanding the statistical principles, recognizing potential external factors, and implementing practical strategies, you can ensure your A/B tests deliver reliable results within a reasonable timeframe. Remember to continuously monitor your test, analyze data, and adjust your duration as needed.

This approach will lead to more informed decisions and a greater return on investment.