How does duplicate content affect seo – How does duplicate content affect ? This deep dive explores the multifaceted impact of identical content on search engine rankings. From unintentional redundancy to deliberate copying, we’ll dissect the various forms of duplicate content, examining their effects across different platforms, including websites, social media, and e-commerce. We’ll uncover the strategies search engines employ to identify and penalize duplicate content, and discuss the implications for website traffic and overall user experience.

Learn how to prevent duplicate content issues, implement technical solutions like canonical tags and redirects, and create unique content strategies to avoid problems.

Understanding the nuances of duplicate content is crucial for maintaining a strong online presence. We’ll analyze the different types of duplicate content, from identical content on multiple pages to near-identical content, and show how these variations impact search engine crawlers. We’ll also explore how different content types, like product listings and blog posts, are affected differently, and provide practical examples and strategies to address these issues.

By the end, you’ll have a clear understanding of the dangers of duplicate content and the strategies needed to avoid them.

Understanding Duplicate Content

Duplicate content, a common pitfall, occurs when substantial portions of text or code are identical across multiple web pages. This can stem from various sources, impacting search engine rankings and potentially harming a website’s credibility. Understanding the different forms of duplicate content, their implications, and how to identify them is crucial for maintaining a healthy strategy.

Various Forms of Duplicate Content

Duplicate content isn’t always deliberate. It can arise from unintentional errors or seemingly innocent practices. Understanding these variations is essential for effective management. Different types of duplicate content have varying degrees of negative impact on search rankings.

- Exact Duplicate Content: This involves identical text and code on multiple pages. This is the clearest form of duplication, often arising from content management system (CMS) errors, content reuse across different platforms, or accidental copying. For example, a blog post published on a website’s homepage and then on a product page, without any modifications, constitutes exact duplication. The search engine may not know which version to rank, leading to potential penalties.

- Near-Duplicate Content: This form features similar but not identical content. This could be achieved through minor changes to existing text, such as replacing synonyms or rephrasing sentences. This might occur due to a website using automated content generation tools, or a website reusing articles with slight modifications to avoid copyright issues, or through the use of paraphrasing tools.

While not as obviously problematic as exact duplicates, search engines can detect and penalize this. For instance, if two articles on the same topic use 90% of the same text with minor changes, it’s likely considered near-duplicate content.

- Content Syndication: This involves publishing the same content on multiple websites, often with attribution. While not always detrimental, excessive syndication without appropriate measures can lead to issues with search engines perceiving the content as duplicate. Republishing articles on multiple platforms, especially without significant edits, is a form of content syndication. Syndication with proper attribution, along with the presence of unique content on the original site, mitigates this risk.

- Dynamically Generated Content: This is content created on the fly based on user input or parameters. If not managed correctly, this can lead to numerous pages with identical or near-identical content, harming . E-commerce sites, for instance, generate product pages based on a product database. If the same information is repeated for many products without modification, it can be considered duplicate content.

Dynamic content should be properly structured and differentiated to prevent this issue.

Impact on Search Engine Rankings

Search engines, particularly Google, prioritize unique and high-quality content. Duplicate content negatively affects rankings by making it difficult for search engines to determine which version of the content is most relevant to a user’s search query. This ambiguity can lead to lower rankings for all affected pages. Duplicate content, whether accidental or intentional, can cause search engines to penalize the website, potentially affecting the overall visibility and traffic.

Identifying Intentional vs. Unintentional Duplication

Distinguishing between unintentional and intentional duplicate content can be challenging. Unintentional duplication often stems from technical errors, while intentional duplication is a deliberate attempt to manipulate search rankings. Identifying the cause is key to resolving the issue. Manual checks of website content, comparing pages for similarities, and examining sitemaps can help uncover duplicate content.

| Type of Duplicate Content | Description | Example | Impact |

|---|---|---|---|

| Exact Duplicate | Identical content across multiple pages. | Same blog post on homepage and category page. | Lower rankings, potential penalties. |

| Near-Duplicate | Similar content with minor variations. | Two articles on the same topic with 90% overlap. | Lower rankings, potential penalties. |

| Content Syndication | Content republished on multiple sites. | Republishing a news article on several news portals. | Lower rankings, especially if not properly attributed. |

| Dynamically Generated Content | Content generated based on user input. | Product pages in an e-commerce store. | Lower rankings if not properly differentiated. |

Implications of Duplicate Content: How Does Duplicate Content Affect Seo

Duplicate content, while seemingly innocuous, poses significant challenges for websites seeking to rank well in search engine results. Understanding the implications of duplicate content is crucial for maintaining a healthy online presence and attracting organic traffic. Search engines like Google prioritize unique and valuable content, and duplicate content can hinder this process.Search engines interpret duplicate content as a sign of low-quality or even potentially malicious intent.

This perception can lead to penalties that negatively impact website rankings and overall performance. By understanding how search engines identify and penalize duplicate content, website owners can take proactive steps to mitigate the risks and safeguard their online visibility.

Negative Impact on Search Engine Crawlers

Search engine crawlers, the automated bots that explore the web, encounter significant challenges when faced with duplicate content. They are designed to index unique content, and duplicate content confuses these bots. The bots might spend time crawling and indexing identical or near-identical pages, wasting resources. This ultimately dilutes the search engine’s understanding of the site’s overall value. The crawler may struggle to determine which version of the content is most authoritative or relevant.

Furthermore, duplicate content can lead to a diluted backlink profile, as the search engine may not be able to correctly attribute the backlinks to the correct and most relevant page.

Strategies Used by Search Engines to Identify and Penalize Duplicate Content

Search engines employ sophisticated algorithms to identify duplicate content. These algorithms analyze various factors, including the content’s similarity to other pages, the source of the content, and the context surrounding the duplicate content. If the search engine detects significant overlap in content across multiple pages, it may consider this a violation of its quality guidelines. This detection may lead to a variety of penalties, from reduced rankings to complete removal from search results.

Duplicate content hurts SEO rankings, a major concern for any marketing strategy. It essentially confuses search engines, making it harder for your site to rank well. Understanding how to avoid duplicate content, whether through smart content strategies or advanced technical SEO, is crucial. This directly impacts the profitability of agencies and brand marketers, and the current and future compensation models for agencies and brand marketers here are adapting to these challenges.

Ultimately, businesses need to invest in high-quality, unique content to maintain a strong online presence.

The strategies used by search engines include analyzing text similarity, comparing HTML structure, and examining the content’s source. Search engines are continuously refining their algorithms to detect more subtle forms of duplicate content, making proactive measures for website owners crucial.

Duplicate content hurts your SEO rankings, no doubt. Think about how important a well-crafted thank you page is for user experience, especially after a purchase or form submission. Creating a clear, engaging thank you page in WordPress, like how to create a thank you page in wordpress , can really help boost your site’s credibility. This, in turn, positively impacts your search engine optimization.

If your thank you page is a carbon copy of other pages on your site, you’re essentially adding to the problem of duplicate content.

Potential Consequences for Website Rankings, How does duplicate content affect seo

The consequences of duplicate content can range from minor to severe. A website with substantial duplicate content may experience a drop in search engine rankings, making it more challenging to attract organic traffic. This drop in ranking can lead to a significant decrease in website visibility and, consequently, a reduction in potential customers or users. Severe cases of duplicate content can result in the complete removal of a website from search results, severely impacting the website’s ability to generate any traffic.

The penalty’s severity often depends on the extent and nature of the duplication.

Duplicate content hurts your SEO rankings, no doubt. It confuses search engines, making it hard for them to understand what your site is actually about. To combat this, building an email list is crucial. A strong email list helps you build a loyal audience and keep them engaged with high-quality content. For tips on growing your list to a key 100 subscribers, check out this helpful guide on how to get your first 100 email subscribers.

This will directly help you create unique content that avoids any duplicate issues and boosts your site’s authority in the long run.

Correlation between Duplicate Content and Decreased Organic Traffic

Duplicate content often leads to a noticeable decrease in organic traffic. When search engines struggle to distinguish between similar pages, they may not display the website in search results, leading to a decline in organic visits. Furthermore, users may be less likely to click on results that display identical or similar content, further diminishing the website’s visibility. This correlation underscores the importance of maintaining unique and high-quality content across all website pages.

Websites with diverse, unique, and relevant content are better positioned to attract and retain organic traffic.

Table Comparing Duplicate Content Scenarios and Potential Penalties

| Scenario | Description | Potential Impact | Mitigation Strategies |

|---|---|---|---|

| Exact Duplicate | Identical content across multiple pages. | Significant ranking drop, possible removal from search results. | Identify and remove duplicate pages, ensure unique content on each page. |

| Near-Duplicate | Content with high similarity, but not identical. | Lower ranking, reduced visibility. | Revise content to make it unique, focus on original research and perspectives. |

| Content Syndication | Content published on multiple sites without proper attribution. | Potential for penalties depending on the degree of attribution. | Obtain proper licenses and permissions, ensure clear attribution. |

| Dynamically Generated Content | Content generated by scripts, with little or no unique content. | Ranking drops, low user engagement. | Ensure unique content generation, employ canonical tags. |

Duplicate Content on Different Platforms

Duplicate content isn’t just a problem on a single website; it can spread across multiple platforms, impacting your efforts significantly. This often goes unnoticed, leading to penalties and a diminished online presence. Understanding the ramifications of duplicate content on different platforms is crucial for maintaining a strong strategy.

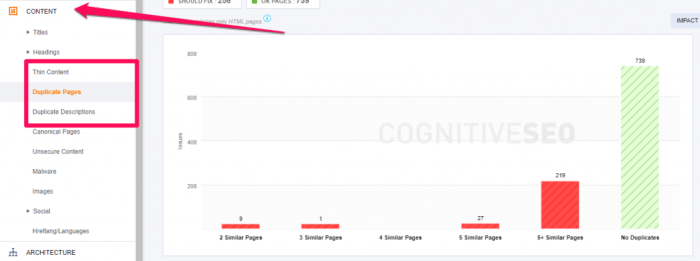

Impact of Duplicate Content Across Website Pages

Duplicate content on different pages of a single website is a common pitfall. Search engines often struggle to identify the most authoritative version of a page, potentially leading to a decrease in rankings for all affected pages. This is especially true if the duplicate content is virtually identical or very similar. For example, if a product description is repeated across multiple product pages, search engines might not understand which page is the most valuable.

This can lead to a diluted search presence and a negative impact on the overall performance.

Effects of Duplicate Content on Multiple Social Media Platforms

Duplicate content on social media platforms, while not directly affecting website rankings in the same way, can still harm your brand’s reach and overall visibility. Sharing identical or near-identical content across various social media channels dilutes the value of each post. Search engines may view this as less valuable content, reducing the visibility of your social media profiles and potentially impacting your brand’s social media engagement.

Consequences of Duplicate Content on Product Listings vs. Blog Posts

The impact of duplicate content varies based on the type of content. Duplicate product listings on an e-commerce site can harm by confusing search engines. They may struggle to identify the most accurate and up-to-date product information, potentially leading to lower rankings. Duplicate blog posts, while less detrimental to product listings, can still have a negative impact on , especially if the content is identical or near-identical.

The negative impact stems from search engines having difficulty determining which version to prioritize.

Preventing Duplicate Content Issues on E-commerce Websites

E-commerce websites are particularly susceptible to duplicate content issues due to the large volume of product listings. Implementing robust content management strategies is key. Dynamically generated product pages with unique content, such as variations in product descriptions, images, and even subtle differences in wording, is crucial. Also, using canonical tags to direct search engines to the preferred version of a page can significantly improve .

Table Outlining Different Website Sections Where Duplicate Content Can Arise

| Platform | Example | Description | Prevention Strategy |

|---|---|---|---|

| Website Pages | Multiple product pages with identical descriptions | Search engines may struggle to identify the authoritative version of a page. | Use canonical tags to specify the preferred version. |

| Social Media | Sharing the same blog post across multiple social media platforms without modifications. | Dilutes the value of each post. | Adapt the content to suit the specific platform and target audience. |

| Product Listings | Multiple listings with identical descriptions and images | Search engines struggle to identify the most relevant version. | Implement unique descriptions, images, and even slight variations in wording. |

| Blog Posts | Publishing the same or similar content on different pages or platforms. | Search engines may rank the content lower, reducing visibility. | Ensure every post has unique content. |

Content Strategies to Avoid Duplicate Content

Avoiding duplicate content is crucial for success. Search engines penalize websites with identical or near-identical content, hindering their ability to rank well. This often results in decreased visibility and reduced organic traffic. A strategic approach to content creation and adaptation across various platforms is essential for maintaining a healthy profile.A proactive approach to unique content generation is key to maintaining a strong online presence.

This involves not only creating original content but also adapting existing material for different platforms and target audiences without sacrificing its value or authenticity. Careful planning and implementation are vital to ensure that every piece of content contributes positively to the website’s overall health.

Unique Content Planning for Every Page

Creating a comprehensive content strategy is paramount for ensuring every page on a website offers unique and valuable information. A detailed plan should encompass the target audience, specific s, and the overall message the page aims to convey. This includes conducting thorough research and mapping each page to a specific theme. Understanding the unique value proposition of each page is essential to avoid redundant or repetitive content.

Content Adaptation Strategies Across Platforms

Maintaining a consistent brand voice and message while adapting content for different platforms requires careful consideration. Content duplication can be avoided by tailoring the tone, style, and length of content to match the platform’s specific audience and format. Social media posts, for example, often benefit from shorter, more engaging formats compared to blog posts or in-depth articles. Visual elements and interactive components should also be strategically integrated to enhance engagement and avoid repetitive content.

Unique Content Creation and Adaptation

A key aspect of avoiding duplicate content is creating unique content tailored to each platform. This involves understanding the nuances of different platforms and adjusting the content to resonate with their specific audience. For instance, a blog post might be adapted into shorter social media snippets, or an infographic could be transformed into a more detailed article. By focusing on the unique value proposition of each platform, content creators can avoid redundancy and maintain high engagement.

Adapting Content for Different Target Audiences

Understanding different target audiences and their specific needs is crucial in avoiding content duplication. Tailoring content to resonate with each audience segment ensures that the message remains engaging and valuable. While maintaining the core message, content should be adapted to reflect the specific vocabulary, interests, and concerns of each audience. Researching audience preferences and tailoring content accordingly is essential for avoiding duplication and promoting engagement.

Content Creation Process for Diverse Platforms and Website Sections

Implementing a structured process for creating unique content is critical for managing the creation and adaptation across various platforms and website sections. This includes establishing clear guidelines for content creation, adaptation, and approval. Using a content calendar can help ensure that content is planned and scheduled strategically, maintaining a steady stream of unique content across different channels. A content review process should be implemented to ensure accuracy and originality.

Content Adaptation Strategies Table

| Platform | Strategy | Example | Benefits |

|---|---|---|---|

| Social Media (Twitter) | Shorten blog post excerpts; use relevant hashtags | Summarize a blog post about sustainable living into a series of tweets highlighting key tips and using hashtags like #sustainability #ecofriendly | Increased engagement; broader reach; enhanced visibility |

| Blog | Expand social media snippets into in-depth articles; add unique insights | Take a tweet about a new product and create a blog post exploring its features, benefits, and potential use cases in more detail. | Increased traffic; enhanced ; deeper engagement |

| Email Newsletters | Repurpose blog posts; include exclusive content | Send a summary of the most recent blog posts with additional insights or exclusive information not shared publicly. | Increased email open rates; nurtured leads; enhanced brand loyalty |

| Infographics | Convert blog posts into visual representations; add interactive elements | Transform a blog post about the history of a specific technology into an infographic showcasing key timelines and developments. | Improved readability; enhanced understanding; wider audience appeal |

Technical Solutions for Duplicate Content Issues

Duplicate content can severely harm your website’s search engine rankings. Fortunately, several technical solutions can effectively combat this issue, allowing you to maintain a strong online presence. These strategies focus on modifying your website’s architecture and code to prevent search engines from indexing duplicate content, ultimately improving your performance.Implementing these technical strategies is crucial for a healthy website.

By strategically using canonical tags, robots.txt files, redirects, and hreflang tags, you can significantly improve your site’s . This proactive approach ensures your valuable content is accurately represented in search results, leading to enhanced visibility and increased organic traffic.

Canonical Tags

Canonical tags are HTML elements that instruct search engines which version of a page is the preferred one. Using them effectively is vital for managing duplicate content across different URLs. A well-implemented canonical tag system helps search engines understand your site’s content structure, preventing them from indexing multiple versions of the same page and potentially diluting your ranking signals.

- Proper Implementation: The canonical tag is placed within the ` ` section of the HTML document. It points to the authoritative URL using the `rel=”canonical”` attribute. For example, if you have a page available at

https://www.example.com/product/red-shoesand a duplicate athttps://www.example.com/product/red-shoes?color=red, you’d add the canonical tag to the duplicate page:

- Specificity: It’s essential to ensure that canonical tags are used consistently and accurately across your site. This avoids confusion for search engines and helps them index the correct page versions.

Robots.txt Files

A robots.txt file instructs search engine crawlers on which parts of your website they should or shouldn’t crawl. This is a powerful tool for preventing search engines from indexing duplicate content. By explicitly excluding unwanted pages, you can prevent them from being considered by search algorithms.

- Preventing Crawling: You can use the robots.txt file to prevent search engine crawlers from accessing specific pages or directories containing duplicate content. A simple example might look like this:

Disallow: /duplicate-content/ - Important Considerations: Using robots.txt effectively is essential, as it allows you to prevent the indexing of specific content, thereby preventing duplicate content issues. However, robots.txt does not prevent search engines from indexing content if it’s accessible via other means.

301 Redirects

redirects permanently redirect one URL to another. They’re a powerful tool for managing duplicate content by pointing users and search engines to the preferred version of a page.

- Permanent Redirection: Using a 301 redirect is crucial for maintaining value when you change URLs. This tells search engines to treat the new URL as the permanent home of that content.

- Example: If a page with duplicate content is located at

https://www.example.com/old-product, you can redirect it tohttps://www.example.com/new-productwith a 301 redirect. This ensures that search engines and users are seamlessly directed to the updated location.

Hreflang Tags

Hreflang tags are essential for multilingual websites. They specify the language and region of the content, preventing duplicate content issues that arise from different language versions of the same page.

- Multilingual Support: Hreflang tags provide search engines with crucial information about the different language versions of your content, enabling them to display the appropriate version to users based on their location and language preferences.

- Example: If your website has English and Spanish versions, you’d use hreflang tags to specify the language for each version. This helps avoid search engine penalties for duplicate content.

Comparison of Technical Solutions

The effectiveness of these technical solutions depends on the specific circumstances of the duplicate content issue. Canonical tags are generally suitable for minor duplicate content issues like those arising from query strings. Robots.txt is more appropriate for preventing crawling of entire directories or sections of the site with duplicate content. 301 redirects are essential for handling permanent URL changes and managing duplicate content.

Hreflang tags are specifically designed for multilingual sites.

Technical Solutions Table

| Solution | Description | Implementation | Benefits |

|---|---|---|---|

| Canonical Tags | Specify the preferred version of a page to search engines. | Add `` to the `` of the duplicate page. | Avoids duplicate content penalties, maintains value. |

| Robots.txt | Prevent search engine crawlers from accessing specific content. | Edit the robots.txt file to disallow access to duplicate content. | Prevents crawling of unwanted content, reduces indexing issues. |

| 301 Redirects | Permanently redirect users and search engines from one URL to another. | Configure redirects using web server configurations or plugins. | Maintains value, directs users and search engines to the correct page. |

| Hreflang Tags | Specify language and region for multilingual content. | Add hreflang tags to pages with different language versions. | Avoids duplicate content issues for different language versions, improves international . |

Impact on User Experience

Duplicate content significantly harms user experience, often leading to frustration and a decline in engagement. Users expect fresh, original content that provides value. When presented with repeated information, their trust in the site and its credibility diminishes. This negatively impacts the overall user journey and discourages repeat visits.Users instinctively sense when content is not unique.

This perception can stem from subtle differences or even identical phrasing, triggering a feeling of being misled or cheated. This negative impression undermines the user’s experience and may dissuade them from exploring further content on the website.

User Frustration and Reduced Engagement

Users are often put off by duplicate content, perceiving it as lazy or unprofessional. This can lead to a decrease in user engagement metrics such as time spent on site, page views, and ultimately, conversions. The feeling of being presented with recycled information can be a major deterrent to further interaction.

Impact on Trust and Credibility

Users form opinions about a website based on the content they encounter. Duplicate content can damage the perceived credibility and trustworthiness of a site. When users find repeated information, they might assume the site lacks originality, research, or commitment to quality, potentially impacting the site’s reputation.

Loss of User Retention

Unique, high-quality content is crucial for user retention. When users find value in the information presented, they are more likely to return to the site and engage with future content. This repeat engagement fosters loyalty and builds a strong relationship between the user and the website. The absence of original content can drive users away.

Examples of Unique Content Enhancing User Satisfaction

Unique content can manifest in various forms. A blog post delving into a specific topic with original research and insights offers a superior user experience compared to a simple rehash of existing articles. Similarly, a well-researched product review that provides in-depth analysis and comparisons is far more valuable than a superficial, copied description. These examples demonstrate how original content strengthens user satisfaction.

High-Quality Content and Engagement Metrics

High-quality, unique content directly impacts user engagement metrics. Users who find the content informative, insightful, and engaging are more likely to spend more time on the site, interact with other content, and potentially make purchases or take other desired actions. This correlation between content quality and engagement metrics is undeniable.

User Reactions to Duplicate Content

Users react to duplicate content in various ways. They might perceive it as a lack of effort or even a sign of spam. This negative perception can deter users from interacting further with the website. This is often reflected in a drop in page views, reduced time on site, and a decrease in conversion rates.

Conclusion

In conclusion, duplicate content can severely damage a website’s performance, impacting organic traffic and search engine rankings. This comprehensive guide has covered various aspects of duplicate content, including its types, implications, and prevention strategies. By understanding how different platforms and content types are affected, you can implement proactive measures to ensure unique and high-quality content that aligns with search engine guidelines.

Remember, maintaining unique content is vital for both search engine optimization and delivering a positive user experience. From content strategies to technical solutions, we’ve provided actionable insights to mitigate the risks and improve your website’s visibility and user engagement.