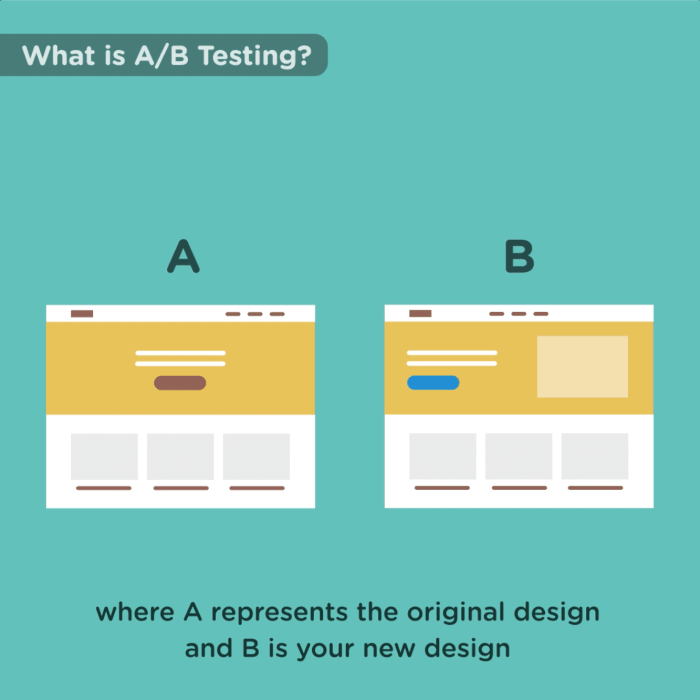

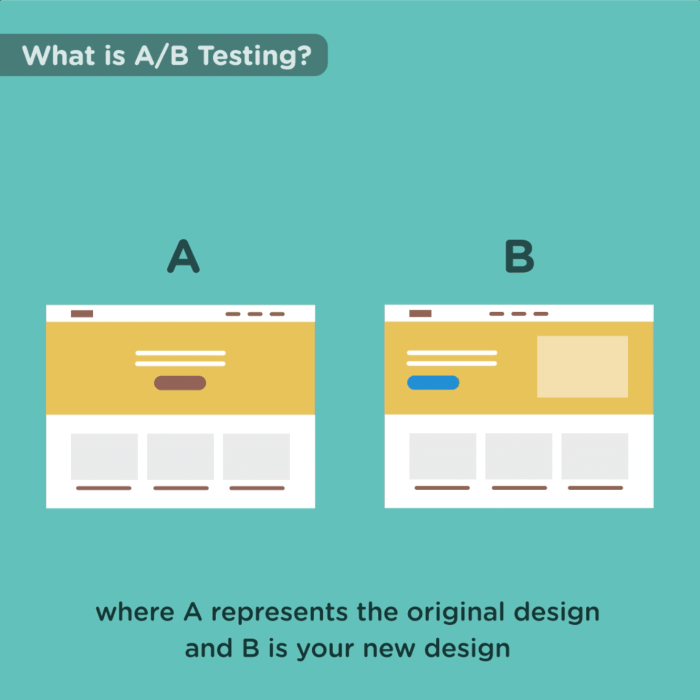

How ab testing works – How A/B testing works is a crucial element in optimizing online experiences. It’s a powerful method for comparing different versions of a webpage, app, or marketing campaign to determine which performs best. Understanding the process, from setup to analysis, is key to maximizing results and making data-driven decisions.

This comprehensive guide will explore the entire A/B testing journey, covering everything from the fundamental principles to advanced strategies. We’ll delve into the different types of tests, how to design a winning test plan, and the crucial steps in running and analyzing results. Case studies and examples will illustrate the real-world applications and impact of A/B testing.

Setting up an A/B Test

A/B testing is a powerful tool for optimizing website performance and user experience. Setting up a well-structured A/B test is crucial for obtaining reliable results. Careful planning and execution ensure that the insights gained from the test accurately reflect the impact of changes and lead to data-driven decisions.Successful A/B testing relies on a methodical approach that considers various factors.

This includes defining clear hypotheses, selecting appropriate metrics, and ensuring a statistically significant sample size.

A/B testing is basically showing two versions of something—like a webpage or an ad—to different groups of people to see which performs better. It’s a powerful tool for understanding what resonates with your audience and improving conversions. This can directly impact customer retention by identifying elements that encourage repeat business, which is key to long-term success. For instance, tweaking your website’s layout or call-to-actions to boost engagement can be a game-changer.

You can learn more about solving customer retention problems by checking out this helpful guide: solve customer retention problem. Ultimately, understanding what motivates your customers through rigorous A/B testing will ultimately boost your bottom line.

Choosing the Right Metrics

Defining the key performance indicators (KPIs) that accurately reflect the success of the experiment is paramount. Choosing the right metrics directly impacts the interpretation of results. Metrics should align with the specific objectives of the test. For instance, if the goal is to improve conversion rates, metrics like conversion rate, click-through rate (CTR), and bounce rate should be tracked.

Alternatively, if the focus is on reducing cart abandonment, metrics such as the cart abandonment rate, average order value, and time spent on the checkout page should be monitored. A good metric should be quantifiable, relevant to the experiment’s goals, and easily trackable.

Creating a Test Plan

A comprehensive test plan ensures the integrity and reliability of the A/B test results. A well-structured test plan lays out the details of the experiment, from the initial hypothesis to the expected duration. The plan provides a roadmap for executing the test and interpreting the results. The following table Artikels the essential components of a robust test plan.

| Element | Description | Example |

|---|---|---|

| Hypothesis | A testable statement that predicts the outcome of the experiment. | “A new headline will increase conversion rates by 10%.” |

| Target Audience | The specific group of users the test will be conducted on. | Users who have visited the website in the past month. |

| Metrics | The key performance indicators used to measure the success of the test. | Conversion rate, bounce rate, average time on page. |

| Control Group | The group of users who experience the original version of the website or app. | Users who see the current headline. |

| Variant Group | The group of users who experience a modified version of the website or app. | Users who see the new headline. |

| Duration | The timeframe for running the test. | 4 weeks |

Importance of Sample Size Calculation

Calculating the appropriate sample size is critical for ensuring statistically significant results. An insufficient sample size can lead to unreliable results, while an excessively large sample size can waste resources. The sample size calculation takes into account factors such as the expected effect size, the desired level of confidence, and the acceptable margin of error. A larger sample size generally leads to more precise results, but it also increases the time and cost of the test.

For example, a small sample size might incorrectly indicate a positive effect, while a large sample size provides a more accurate assessment of the impact. A reliable sample size calculation is essential for making data-driven decisions based on statistically significant results. Using a statistical power analysis tool can help determine the appropriate sample size. The formula for sample size calculation can be complex, but online calculators can simplify the process.

Running and Analyzing the Test

Now that your A/B test is set up, it’s time to launch it and gather data. This crucial phase involves monitoring the test’s progress, ensuring data integrity, and eventually, drawing meaningful conclusions about which variation performs best. Proper analysis is key to informed decision-making, optimizing conversions, and ultimately, boosting your website’s effectiveness.

Running the Test

Running the A/B test involves activating both variations of your element and carefully tracking user interactions with each. This period requires constant monitoring to ensure that everything is functioning correctly and that the test is gathering the data needed for accurate analysis. Be prepared to address any technical issues or unexpected problems that might arise. Crucially, maintaining a consistent test environment is essential for reliable results.

Interpreting Results

Interpreting the results of an A/B test involves more than just identifying the winning variation. A deep dive into the statistical significance of the difference is necessary. Simply observing a slight difference in conversion rates may not indicate a meaningful improvement. Statistical significance measures the probability that the observed difference between variations isn’t due to random chance.

A statistically significant result provides confidence that the observed difference is real and actionable.

Analyzing A/B Test Data

Analyzing data from an A/B test requires a structured approach. The primary goal is to identify patterns and trends that indicate which variation performs better. This process often involves calculating various statistical measures, evaluating their significance, and visualizing the findings in a clear and compelling way. Data visualization tools help to make complex data easily digestible, allowing for a more intuitive understanding of the results.

Common Statistical Measures

| Metric | Description | Interpretation | Example |

|---|---|---|---|

| Conversion Rate | The percentage of users who complete a desired action (e.g., making a purchase). | Higher conversion rate generally indicates better performance. | Variation A: 5%, Variation B: 6% |

| Click-Through Rate (CTR) | The percentage of users who click on a specific element (e.g., a button). | Higher CTR indicates better engagement. | Variation A: 2%, Variation B: 3% |

| Average Order Value (AOV) | The average value of orders placed. | Higher AOV suggests more valuable conversions. | Variation A: $50, Variation B: $55 |

| Statistical Significance (p-value) | The probability of obtaining results as extreme as those observed if there is no real difference between variations. | A low p-value (typically below 0.05) suggests a statistically significant difference. | p-value = 0.03 (significant difference), p-value = 0.15 (not significant) |

Data Visualization Methods, How ab testing works

Visualizing A/B test results is crucial for understanding and communicating findings effectively. Different types of charts and graphs provide insights into the performance of variations. For example, bar charts are suitable for comparing conversion rates across variations, while line graphs can track performance over time. Choosing the right visualization tool depends on the specific data and the message you want to convey.

A/B testing is all about comparing two versions of something – like a webpage or an ad – to see which performs better. It’s a crucial tool for any marketing department, helping you optimize your campaigns and get the most out of your budget. Knowing how to effectively implement A/B testing is vital when building a marketing department, as it allows for data-driven decisions and continuous improvement.

This data-driven approach, which should be part of every successful marketing campaign, is key to maximizing your ROI. For a deeper dive into building a solid marketing foundation, check out this guide on building a marketing department. Ultimately, A/B testing is about learning from your audience and refining your approach.

Example: A bar chart comparing conversion rates for two variations of a website button clearly shows which button leads to more conversions. A line graph depicting the CTR of both variations over time can reveal trends or patterns in user engagement.

Key Considerations in A/B Testing: How Ab Testing Works

A/B testing, while a powerful tool, isn’t foolproof. Understanding the potential pitfalls and how to mitigate them is crucial for successful implementation. Ignoring external factors or misinterpreting results can lead to wasted resources and flawed conclusions. This section delves into vital considerations for robust and reliable A/B testing.Thorough planning, meticulous execution, and careful analysis are paramount for extracting actionable insights.

A/B testing is all about comparing two versions of something, like a webpage or a signup button, to see which performs better. It’s a crucial tool for optimizing your product, and often used in tandem with product tutorials to activate signups. For instance, if you’re unsure which signup method is most effective, product tutorials to activate signups can be a great way to guide users and collect data.

Analyzing the results of these tests helps you make data-driven decisions and improve conversion rates. It’s a continuous cycle of testing, learning, and refining, all rooted in A/B testing principles.

By understanding the potential pitfalls and applying the right strategies, you can maximize the effectiveness of your A/B tests and make data-driven decisions.

Common Pitfalls and Errors to Avoid

A/B testing requires meticulous attention to detail. Common errors can skew results and lead to inaccurate conclusions. These errors often stem from a lack of planning or inadequate analysis.

- Insufficient Sample Size: A small sample size can lead to unreliable results. A statistically significant result may not reflect the true population response. A larger sample size increases the likelihood of accurately capturing the user behavior.

- Ignoring External Factors: External factors, like seasonal trends or marketing campaigns, can significantly impact test results. Failure to account for these factors can lead to misleading conclusions. Proper controls and careful consideration of concurrent events are essential.

- Poorly Defined Hypothesis: A vague or ill-defined hypothesis can lead to an ineffective test. A clear, specific, and measurable hypothesis is crucial for determining the success or failure of a test.

- Testing Too Many Variables Simultaneously: Trying to test too many variables at once makes it difficult to isolate the impact of each individual change. Testing multiple variables can cause overlapping effects, obscuring the effect of the specific changes being analyzed. A phased approach, testing one variable at a time, yields clearer and more accurate results.

- Inadequate Data Collection and Analysis: Incomplete or poorly collected data can lead to skewed results. A meticulous data collection process is essential, along with robust data analysis techniques.

Controlling for External Factors

External factors can significantly impact the outcome of an A/B test. It is crucial to account for these factors to ensure accurate results.

- Using Control Groups: A control group provides a baseline for comparison, allowing you to isolate the impact of the changes being tested. The control group is crucial for measuring the effect of the changes made.

- Randomization: Randomly assigning users to different groups helps ensure that external factors are distributed evenly across the groups. This process ensures a fair comparison between the test and control groups, minimizing the influence of external factors.

- Statistical Adjustment Techniques: Statistical techniques can be employed to account for the effects of external factors. These techniques, such as regression analysis, can help to adjust the results for the influence of external factors, increasing the accuracy of the findings.

Statistical Significance and Confidence Levels

Statistical significance and confidence levels are crucial for determining the validity of A/B test results.

- Understanding Statistical Significance: Statistical significance indicates the likelihood that the observed results are not due to chance. A p-value, typically set at 0.05, helps to define the significance level. Results with a p-value below 0.05 are generally considered statistically significant.

- Interpreting Confidence Levels: Confidence levels provide a range within which the true population parameter is likely to fall. A higher confidence level indicates a narrower range, suggesting greater precision in the results.

- Example: A 95% confidence interval means that if the test were repeated numerous times, the true population value would fall within the calculated interval 95% of the time.

Comparing A/B Testing Tools

Various A/B testing tools are available, each with its own strengths and weaknesses. Choosing the right tool depends on specific needs and resources.

- Functionality and Features: Tools vary in their features, from basic A/B testing to more advanced functionalities like multivariate testing, surveys, and segmentation. Consider the specific functionalities needed for your testing strategy.

- Ease of Use: Some tools are easier to use than others. Choose a tool that aligns with your team’s technical expertise.

- Cost and Scalability: Different tools come with different pricing models. Consider the scalability and cost implications when making your decision.

User Experience in A/B Testing

User experience (UX) is paramount in A/B testing. Changes should not compromise the user experience.

- Prioritizing User Needs: Any changes made should align with user needs and preferences. A focus group or usability testing can be useful to gather user feedback on the proposed changes.

- Maintaining User Experience Consistency: Changes should not disrupt the user experience, leading to a positive user experience and increased engagement.

Case Studies and Examples

A/B testing, when done correctly, can provide valuable insights for optimizing website performance and user experience. Analyzing successful and failed case studies can highlight the nuances of this process and help you avoid common pitfalls. This section will explore real-world examples, examining both positive and negative outcomes to provide a practical understanding of A/B testing effectiveness.

A Successful A/B Test Case Study

A major e-commerce platform wanted to improve the conversion rate on its product pages. They ran an A/B test comparing two different product page layouts. The original layout (A) featured a large, prominent image of the product and a concise description. The variant (B) included a more detailed product description, a customer review section, and a video demonstrating the product in use.

The results showed a significant increase in conversions for layout B. The variant (B) saw a 15% increase in conversions compared to the control group. This improvement in conversion rates directly translated into increased revenue. The success was attributed to the addition of social proof (customer reviews) and interactive elements (video), which fostered user trust and confidence in the product.

An A/B Test That Failed

A SaaS company tested a new pricing model. The original pricing model (A) was a tiered structure with different features and price points. The variant (B) proposed a single flat-rate option for all features. The test showed a decrease in conversions. The single flat-rate option, despite being appealing to some customers, confused others.

They lacked the clear value proposition and transparency of the tiered structure. The failure wasn’t in the A/B testing method itself, but in the inadequate design of the variant, failing to consider the needs of the diverse customer base.

Comparison of A/B Test Case Studies

| Case Study | Description | Results | Key Takeaways |

|---|---|---|---|

| E-commerce Product Page Redesign | Compared a simple image-centric layout (A) to a detailed layout (B) with reviews and video. | Variant B saw a 15% increase in conversion rate. | Adding social proof and interactive elements can significantly boost conversion rates. |

| SaaS Pricing Model | Tested a single flat-rate pricing model (B) against a tiered structure (A). | Variant B saw a decrease in conversions. | A clear value proposition and transparency are crucial in pricing strategies; one-size-fits-all approaches may not work for all customers. |

| Blog Post Headline Optimization | Tested different headline variations for a blog post to see which attracted more clicks. | Variant C, using a question-based headline, received 20% more clicks than the control. | Using question-based headlines can improve click-through rates, engaging users with curiosity. |

| Newsletter Signup Form | Compared a short, concise signup form (A) to a longer form with extra fields (B). | Variant A saw a 10% increase in signup conversions. | Keeping the signup process simple and frictionless is critical for maximizing conversions. |

Impact of A/B Testing on Various Businesses

A/B testing has demonstrably improved various aspects of businesses across industries. Improved user experience leads to higher customer satisfaction, increased conversions, and ultimately, higher revenue. For e-commerce platforms, optimized product pages can drive significant sales increases. In SaaS businesses, better pricing models can improve customer acquisition and retention. The ability to adapt and refine based on user feedback translates to stronger market positioning and greater profitability.

Wrap-Up

In conclusion, A/B testing is a dynamic process that empowers businesses to fine-tune their strategies for optimal performance. By understanding the principles, setting up effective tests, and analyzing results rigorously, organizations can make data-driven decisions to enhance user experience, increase conversions, and achieve their business objectives. Mastering A/B testing is a continuous learning journey, requiring ongoing evaluation and adaptation.