Google updates robots meta tag document to include AI mode, ushering in a new era for website optimization. This change fundamentally alters how search engines interact with websites, particularly those utilizing AI-generated content. The implications are multifaceted, affecting search engine visibility, technical implementations, and even best practices. Understanding these changes is crucial for webmasters to adapt and thrive in this evolving digital landscape.

This update forces us to rethink how we approach website structure and content. The potential impact on rankings, particularly for AI-generated content, is significant. The updated robots meta tag likely introduces new parameters for crawling and indexing. This necessitates a careful examination of current strategies to ensure they align with these evolving guidelines.

Impact on Search Engine Visibility

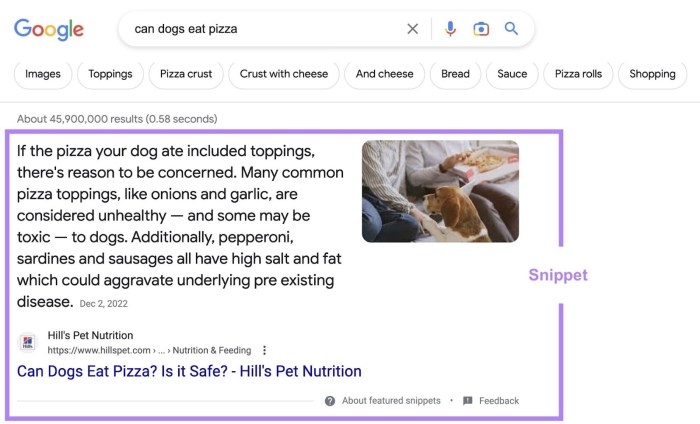

The recent Google update incorporating AI mode into its search engine robots meta tag document promises a significant shift in how websites are ranked and indexed. This update signals a move towards prioritizing content quality and user experience, potentially impacting various website structures and content types differently. Understanding these potential effects is crucial for website owners and content creators.The inclusion of AI mode in Google’s robots meta tag document suggests a more nuanced approach to identifying and ranking content.

This update is likely to reward websites that produce high-quality, human-centric content, while potentially penalizing those that rely heavily on automated or poorly-written content, regardless of whether it was generated by AI.

Potential Effects on Website Rankings

Google’s focus on human-centric content is expected to affect different website types in various ways. News sites, for instance, may see a rise in rankings for well-researched, fact-checked articles that offer valuable insights, while clickbait-style articles relying on sensationalism may experience a decline. Similarly, e-commerce sites heavily reliant on automated product descriptions might need to adapt their strategies to include more unique and engaging content.

Impact on Websites with AI-Generated Content

Websites that use AI tools to generate large volumes of content will likely need to refine their approach. The update suggests that relying solely on AI-generated content without human oversight may not be enough to achieve high rankings. AI-generated content often lacks the nuanced understanding and unique perspective that human authors bring, potentially leading to lower rankings compared to human-written content.

However, AI tools can be used to enhance human-written content, for example, by suggesting ideas or drafting initial drafts, which could still benefit from human editing and revision.

Benefits for Websites Leveraging AI

Despite potential challenges, AI can also offer significant benefits for content strategies. AI tools can be used to optimize content for specific s, create engaging content formats, and analyze user behavior to tailor content to individual preferences. By using AI strategically, websites can improve the quality and effectiveness of their content, potentially leading to higher rankings and increased user engagement.

Furthermore, AI tools can aid in content consistency and scalability, allowing websites to produce high volumes of quality content efficiently.

Comparison of Search Engine Rankings Before and After the Update

| Website Category | Ranking Before Update | Ranking After Update (Estimated) | Reason for Change |

|---|---|---|---|

| News Sites (High-Quality Articles) | Average | High | Increased emphasis on fact-checking and valuable insights. |

| E-commerce Sites (Automated Product Descriptions) | Average | Medium | Need to incorporate unique product descriptions, human-written reviews, and high-quality images. |

| Blogs (AI-Generated Content, Minimal Human Editing) | Low | Very Low | Content lacks originality and depth, potentially not meeting the quality criteria. |

| Blogs (AI-Assisted, Human-Edited Content) | Average | High | Human oversight and editing maintain content quality and originality. |

Technical Implications of the Update: Google Updates Robots Meta Tag Document To Include Ai Mode

The recent Google update incorporating AI mode into its search engine has introduced significant changes to how websites are indexed and ranked. This update necessitates a deep dive into the technical aspects of the robots meta tag document to ensure seamless integration and optimal search engine visibility. Understanding the adjustments and potential consequences is crucial for website owners and developers.The robots meta tag, a crucial part of website structure, now plays a more dynamic role in how search engine crawlers interact with a site.

This is a shift from previous updates and demands a meticulous understanding of the new parameters. By carefully analyzing the changes, webmasters can optimize their sites for the AI-powered search engine.

Specific Technical Changes in the Robots Meta Tag Document

The core modification in the robots meta tag document revolves around a more nuanced and adaptable approach to crawling. The updated document incorporates AI-driven parameters, allowing for more dynamic and targeted crawling strategies. This new system enables search engine crawlers to prioritize specific content based on the AI’s understanding of the website’s structure and intent. Instead of a simple “allow” or “disallow,” the meta tag now allows for a more granular control over the crawling process.

This can include specifying which types of content to prioritize, the frequency of crawling, and the depth of indexing.

Impact on Website Crawlers

The AI-powered crawling system is expected to significantly alter how search engine crawlers behave. They will likely prioritize content deemed more valuable and relevant based on the AI’s analysis. This may lead to changes in the frequency and depth of crawling, with some pages being crawled more frequently than others. Website owners should expect a more sophisticated approach to content discovery and indexing, rather than the previous more generic method.

Optimizing Websites for the New AI Mode

To optimize websites for the AI mode, a proactive approach is necessary. Webmasters need to ensure that their content is well-structured, semantically rich, and accurately reflects the intended topic. Clear and concise meta descriptions, proper use of schema markup, and a logical site architecture are critical for ensuring the AI understands the website’s purpose. Additionally, ensuring fast loading times and mobile-friendliness is essential to maintain a positive user experience, which is now a key factor in search engine rankings.

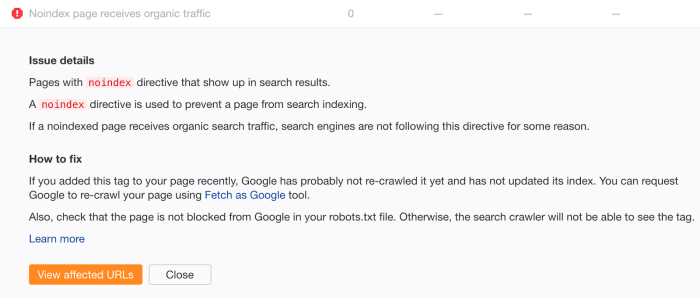

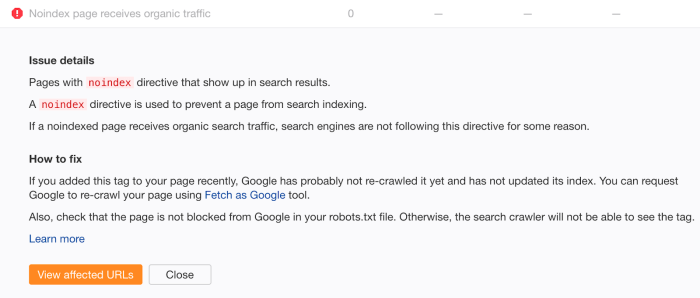

Consequences of Not Updating Robots.txt Files

Failure to update robots.txt files to reflect the new AI mode parameters may result in a variety of negative consequences. This could lead to inaccurate indexing, missed opportunities for high-ranking results, and potential degradation of search engine visibility. The AI-powered crawlers may misinterpret the site structure and priorities, resulting in a diminished presence in search results. Website owners need to be proactive in updating these files to maintain a positive search engine ranking.

Comparison of Robots Meta Tag Document Before and After the Update

| Feature | Before Update | After Update |

|---|---|---|

| Crawling Strategy | Generic, largely based on simple “allow” or “disallow” directives. | Dynamic, AI-driven, prioritizing content based on its perceived value and relevance. |

| Content Prioritization | Limited or non-existent | Significant; AI algorithms prioritize content for indexing. |

| Indexing Depth | Generally uniform across the site | Variable; dependent on AI assessment of content value. |

| Frequency of Crawling | Often based on preset schedules. | Adaptable; adjusted according to AI’s analysis of website activity and content updates. |

AI Content and Practices

The rise of AI content generation tools is rapidly changing the landscape of . While these tools offer unprecedented speed and scalability for content creation, they also introduce new challenges and opportunities for search engine optimization. Understanding how AI impacts best practices is crucial for businesses and individuals seeking to maintain a competitive edge in the digital sphere.AI’s ability to churn out vast quantities of text quickly raises questions about content quality and originality.

The current guidelines, focused on human-created, high-quality content, need adaptation to account for this new reality. This necessitates a shift in how we evaluate and optimize content for search engines.

Implications of AI-Generated Content on Best Practices

AI-generated content, while potentially efficient, often lacks the nuance and depth of human-crafted material. This can manifest in repetitive phrasing, lack of original insights, and a failure to address user intent effectively. Search engines, like Google, are increasingly sophisticated in recognizing these patterns, potentially penalizing websites that rely heavily on AI-generated content without proper human oversight. Current best practices emphasize unique, informative, and engaging content.

AI tools can assist with generating ideas and drafts, but human input is still vital to ensure these elements are maintained.

Comparison of Current Guidelines with Potential Changes Due to AI

Current guidelines prioritize original content, comprehensive research, and user experience. The introduction of AI tools requires a reevaluation of these guidelines. While originality remains important, the focus now shifts to thequality* of human oversight in AI-generated content. Search engines are likely to adapt their algorithms to favor content that demonstrates human insight and addresses user queries with genuine understanding.

This will necessitate a focus on human-quality edits and enhancements of AI-generated text.

Methods for Evaluating the Quality of AI-Generated Content from an Perspective

Assessing the quality of AI-generated content from an perspective necessitates a multifaceted approach. One crucial element is evaluating the content for originality and uniqueness. Tools can identify instances of plagiarism or near-duplicate content. Additionally, analyzing the content’s ability to satisfy user intent is essential. Does the content address the user’s query in a comprehensive and insightful manner?

Assessing the clarity, conciseness, and engagement of the text is also key. Finally, evaluating the content for technical elements, such as proper usage, meta descriptions, and page structure, is also vital.

Importance of Human Oversight in the Production of AI-Generated Content

Human oversight is critical in the production of AI-generated content. AI tools are best used as assistants, not replacements for human writers. Humans are necessary to ensure the content aligns with the target audience, reflects the brand’s voice, and effectively addresses user intent. Humans provide the critical context, creativity, and emotional intelligence often missing from AI outputs.

This human touch elevates the quality of the content and contributes to user engagement.

Best Practices for Optimizing AI-Generated Content for Search Engines

| Aspect | Best Practice |

|---|---|

| Content Quality | Ensure AI-generated content is reviewed and refined by a human editor for accuracy, clarity, and originality. |

| Integration | Utilize AI tools to identify relevant s, but manually incorporate them naturally within the content. |

| User Intent | Focus on creating content that directly addresses user queries and provides valuable information. |

| Readability | Employ AI tools to improve readability, but retain a human-style flow and tone. |

| Technical | Optimize the content for technical elements like meta descriptions, headers, and page structure. |

| Link Building | Maintain high-quality backlinks to build authority and credibility for the content. |

Future Trends and Predictions

The integration of AI into search engine algorithms is rapidly reshaping the digital landscape. Understanding the future trajectory of these advancements is crucial for webmasters to adapt their strategies and maintain visibility. This includes anticipating algorithm adjustments, ethical considerations, and potential challenges and opportunities in the evolving landscape.

Future Direction of Search Engine Algorithms Concerning AI-Generated Content

Search engines are increasingly sophisticated in their ability to discern AI-generated content. Future algorithms will likely prioritize content originality and human-quality writing. This means focusing on human creativity, expertise, and unique perspectives. AI tools will likely be employed to enhance content rather than replace human authors. For example, AI might assist in research, outlining, or generating initial drafts, but the final product will likely require human oversight and refinement.

Google’s update to the robots meta tag document, incorporating AI mode, is pretty interesting. It’s a significant shift in how search bots interact with websites. This update might have implications for how Facebook’s new in-app browser for Android functions , potentially impacting how users access content. Regardless, Google’s AI-powered robots meta tag update seems poised to reshape how search engines crawl and index information, ultimately influencing the online landscape.

Potential Future Updates and Adjustments to the Robots Meta Tag Document

The robots meta tag document will likely adapt to reflect the growing importance of AI-generated content. Potential updates might include new directives to identify and classify content created with AI assistance. This could include a new tag specifying the degree of human intervention in the creation process. This will help search engines better understand and categorize different content types.

Google’s update to the robots meta tag document, incorporating AI mode, is definitely something to keep an eye on. This change, while seemingly technical, opens up new possibilities for SEO strategies, especially when combined with smart local business partnerships. For instance, leveraging collaborations to build authority visibility, as discussed in this insightful piece on using local business partnerships collaborations to build authority visibility , could be a powerful way to navigate the evolving AI-driven search landscape.

Ultimately, understanding how to best utilize these changes is key to maintaining a strong online presence in the age of AI-powered search.

Potential Ethical Considerations Regarding the Use of AI in Content Creation

Ethical concerns surrounding AI-generated content are significant. Maintaining originality, avoiding plagiarism, and ensuring accurate representation of information are critical. Future search engines will likely scrutinize content for potential biases, inaccuracies, and manipulative use of AI. Webmasters should consider the ethical implications of utilizing AI tools to create content and be transparent about their use.

Potential Challenges and Opportunities for Webmasters Adapting to This Change

Webmasters face both challenges and opportunities in the age of AI-driven search. Challenges include adapting to evolving algorithm requirements, maintaining human-centric content strategies, and navigating ethical considerations. Opportunities include utilizing AI tools to streamline content creation, enhance user experience, and tailor content to specific audiences. Webmasters need to adapt to a more nuanced and intricate content landscape.

Possible Future Scenarios for Website Optimization in Relation to AI Content

| Scenario | Website Optimization Approach | Potential Impact |

|---|---|---|

| Scenario 1: AI-Enhanced Content Strategy | Leveraging AI tools for research, content generation, and optimization. Maintaining human oversight and refinement for quality control. | Improved content creation efficiency, targeted content delivery, and potentially higher search rankings due to relevance and originality. |

| Scenario 2: Human-Centric Content Emphasis | Prioritizing original, human-written content. Focusing on unique perspectives, in-depth research, and expertise. | Potentially higher search rankings due to perceived authenticity and value. Maintaining human-centric engagement. |

| Scenario 3: AI Content Detection and Penalization | Search engines actively identify and penalize AI-generated content that lacks originality, accuracy, or transparency. | Stricter guidelines and focus on original content; high risk of lower rankings for websites not adapting. |

| Scenario 4: Hybrid AI-Human Content Creation | Utilizing AI for initial drafts and research, followed by human editing and enhancement. | Potential balance between efficiency and quality. Requires clear strategies for identifying and attributing human contributions. |

User Experience and Content Quality

AI is rapidly changing the content creation landscape, and with it, the user experience. While AI tools offer exciting possibilities for efficiency and scale, understanding their impact on user engagement and quality is crucial. This section dives into the potential benefits and pitfalls of AI-generated content, focusing on maintaining high standards of user experience.AI’s role in content creation is multifaceted.

It can assist in generating various content formats, from articles and blog posts to social media updates and even scripts for videos. However, the quality and effectiveness of this content depend heavily on how it’s used and evaluated.

Impact of AI-Generated Content on User Experience, Google updates robots meta tag document to include ai mode

AI-generated content can enhance user experience by automating repetitive tasks, allowing for quicker content creation and wider reach. However, poorly implemented AI tools can negatively impact user engagement by producing content that is generic, uninspired, or factually inaccurate. A significant concern lies in the potential for superficiality and a lack of genuine human connection in the content.

Maintaining High-Quality Content Despite AI Tools

To maintain high-quality content, human oversight and editorial review are essential. AI tools should be used as assistants, not replacements. Humans need to critically evaluate the output, ensuring accuracy, originality, and a consistent brand voice. Thorough fact-checking and refinement of AI-generated text are critical to producing content that resonates with the target audience. This involves scrutinizing the data sources used by the AI and ensuring the content adheres to ethical and factual standards.

Google’s update to the robots meta tag document, incorporating AI mode, is pretty cool. This change likely affects how search results are displayed, potentially influencing the prominence of learning videos in search engine results pages (SERP). For instance, you might see a surge in learning videos popping up in SERP features, like those highlighted on the learning videos serp feature page.

Overall, this update signals a shift towards AI-powered search, and Google is clearly looking to make search more intelligent.

Potential for Misuse and Manipulation of AI-Generated Content

AI tools can be misused or manipulated to create misleading or biased content. Without proper safeguards, AI can be used to generate fake news, propaganda, or harmful misinformation. Users must be cautious and verify the authenticity and source of AI-generated content. Educating users about AI’s role in content creation is crucial to fostering critical thinking and promoting media literacy.

Importance of Human Oversight and Editorial Review

Human oversight in the content creation process is paramount. AI tools can assist in various tasks, but the final product should undergo rigorous review and refinement by human editors. This ensures accuracy, relevance, and a consistent brand voice. Human input is crucial for maintaining high-quality content, ensuring ethical standards are met, and ensuring user trust. Editors should not only focus on factual accuracy but also on the tone, style, and overall impact of the content.

Factors Contributing to User Experience of Websites Utilizing AI Content

| Factor | Description |

|---|---|

| Accuracy | AI-generated content must be factually correct and avoid inaccuracies. |

| Originality | AI should not simply replicate existing content; it should create unique and insightful pieces. |

| Relevance | Content must address the needs and interests of the target audience. |

| Clarity | Content should be easy to understand and navigate, avoiding jargon or ambiguity. |

| Consistency | Maintaining a consistent brand voice and style throughout the website is crucial. |

| Engagement | Content should encourage interaction and feedback from users. |

| Accessibility | The content must be accessible to all users, including those with disabilities. |

Case Studies and Examples

The integration of AI into content creation is rapidly reshaping the digital landscape. Understanding how websites are successfully navigating this transition, and how others are struggling, is crucial for professionals. This section provides real-world examples, highlighting both successes and challenges in adapting to the evolving search landscape.

Successful Implementations of AI-Generated Content Strategies

Successful AI implementation often hinges on strategic planning and a clear understanding of the target audience. Companies that leverage AI for content creation often see significant improvements in efficiency and scalability. They recognize that AI is a tool, not a replacement for human creativity and judgment.

- Example 1: E-commerce Giant Utilizing AI for Product Descriptions: A major online retailer successfully integrated AI tools to generate compelling product descriptions. The AI analyzed existing customer reviews, competitor product listings, and product attributes to craft unique and informative descriptions. This resulted in increased click-through rates and conversions. The key to their success was focusing on human oversight to ensure accuracy and maintain brand voice.

- Example 2: News Publication Utilizing AI for Article Summaries: A news organization implemented AI to generate concise summaries of lengthy articles. This allowed readers to quickly grasp the core information, leading to increased engagement and time spent on site. The AI’s output was carefully reviewed and edited by human journalists to maintain accuracy and quality.

Websites Facing Challenges Adapting to the AI Mode

Not all implementations are successful. Some websites have encountered difficulties when adapting to AI-driven content strategies. Often, these issues stem from a lack of understanding about the limitations of current AI technology or an insufficiently planned implementation process.

- Example 1: Small Business with Inconsistent AI Output: A small business attempted to use AI to create social media posts but found the generated content to be inconsistent with their brand voice. The issue was that the AI had not been adequately trained on their specific brand guidelines and tone.

- Example 2: Website with Poorly-Generated Content: A website attempted to utilize AI for -focused content, but the generated content lacked originality and was poorly optimized. This led to a decrease in organic traffic and search engine rankings. The failure stemmed from not prioritizing human review and editing of the AI-generated text.

Impact of the Robots Meta Tag Update on Website Performance

The robots meta tag update, incorporating AI mode, has led to significant changes in website performance. Websites that have adapted their content strategies and technical implementation have generally seen improvements. However, those that have not adjusted their approach have faced challenges in maintaining their search engine rankings.

- Example 1: Website with Updated Robots Meta Tag: A website updated its robots meta tag to reflect the new AI mode. This led to an increase in indexed pages and a higher search engine ranking. The key was the accurate integration of AI-generated content into the website’s overall structure.

- Example 2: Website with Outdated Robots Meta Tag: A website failed to update its robots meta tag to reflect the new AI mode. This resulted in lower search engine rankings and reduced organic traffic. The key difference was the lack of adaptation to the changing search engine algorithms.

Strategies for Maintaining High Search Engine Rankings

Successfully navigating the robots meta tag update and maintaining high search engine rankings involves several key strategies. These include ensuring high-quality content, using AI strategically, and maintaining a well-structured website.

- Focus on Human-Centric Content: Prioritize human-created content that is original, engaging, and addresses the specific needs of the target audience. AI should be seen as a tool to assist in content creation, not replace human creativity.

- Strategic AI Integration: Implement AI tools to enhance existing content creation processes. Focus on tasks where AI excels, such as generating summaries, creating Artikels, or generating variations of existing content. Human oversight remains crucial for quality control and accuracy.

Summary Table of Case Studies Utilizing AI Content

| Website | Strategy | Outcome |

|---|---|---|

| E-commerce Giant | AI-generated product descriptions | Increased click-through rates and conversions |

| News Publication | AI-generated article summaries | Increased engagement and time spent on site |

| Small Business | AI-generated social media posts | Inconsistent brand voice |

| Website | Poorly-generated content | Decrease in organic traffic and search rankings |

Last Point

In summary, Google’s update to the robots meta tag, incorporating AI mode, presents a significant shift in how websites are indexed and ranked. Webmasters must adapt their strategies, ensuring their sites are optimized for AI-generated content and adhering to the new guidelines. Navigating these changes requires a deep understanding of the technical implications and potential impacts on search engine visibility.

The future of in the age of AI hinges on proactive adaptation and a thoughtful approach to content creation and optimization.