Google tools testing measurement data sets the stage for a deep dive into optimizing software testing strategies. This comprehensive guide explores various Google tools, from analytics to performance, highlighting the data they collect and how to effectively measure testing success. We’ll examine different metrics, data collection procedures, and visualization techniques to help you interpret the results and identify areas for improvement in your testing processes.

We’ll even look at how to integrate these tools into existing frameworks and discuss future trends in the field.

Understanding how to effectively leverage Google tools for testing measurement is crucial for producing high-quality software. This exploration will equip you with the knowledge and practical examples to improve your testing methodologies and ultimately enhance the software development lifecycle.

Introduction to Google Tools for Testing: Google Tools Testing Measurement Data

Google offers a robust suite of tools designed to streamline and enhance the software testing process. These tools provide valuable insights into user behavior, performance metrics, and application functionality, enabling developers to identify and address issues proactively. From analyzing user interactions to monitoring application performance, Google tools offer a comprehensive approach to testing.These tools are instrumental in identifying potential bottlenecks, optimizing user experience, and ensuring the reliability and stability of software applications.

Understanding the various functionalities and data types these tools provide is crucial for effective testing strategies.

Overview of Google Tools for Testing

Various Google tools cater to different aspects of software testing. These tools cover a spectrum of needs, from basic usability analysis to complex performance benchmarking.

Analytics Tools

Understanding user behavior is critical for effective software testing. Google Analytics provides detailed insights into user interactions with applications. This data includes metrics like page views, bounce rates, time on page, and conversion rates. Analyzing these metrics helps developers identify areas where users are encountering difficulties or dropping off, enabling targeted improvements to enhance user experience.

- Google Analytics: Provides comprehensive data on user behavior, including website traffic, engagement patterns, and conversion rates. This data is crucial for understanding how users interact with a website or application and identifying areas needing improvement. Data like session duration, bounce rate, and traffic sources offer significant insights into the user journey.

- Firebase Analytics: Offers similar functionalities to Google Analytics, but is particularly useful for mobile applications. It provides insights into app usage, user engagement, and retention rates. Key data points include active users, session duration, and in-app events, helping developers optimize mobile experiences.

Performance Tools

Performance testing is vital to ensure an application can handle expected traffic and maintain responsiveness. Google provides tools for monitoring application performance, identifying bottlenecks, and optimizing application speed.

- Google PageSpeed Insights: This tool evaluates the performance of web pages and provides recommendations for improving loading speed. It assesses factors like image optimization, code efficiency, and server response time. The insights generated help developers create faster and more responsive web applications.

- Chrome DevTools: An essential tool for web developers, offering a comprehensive set of debugging and testing tools. DevTools allow developers to inspect the rendering and performance of web pages, analyze network requests, and identify performance bottlenecks. They offer insights into browser behavior and network communication.

Mobile Testing Tools

For mobile applications, specialized tools are available for simulating and testing across various devices and operating systems.

- Firebase Test Lab: Enables automated testing of mobile applications on various devices and emulators. This tool allows developers to run tests in parallel across multiple devices, ensuring compatibility and performance on a wide range of hardware and software configurations. It is particularly useful for stress testing and simulating real-world usage patterns.

Data Measurement Methods in Testing

Measuring the effectiveness of testing is crucial for optimizing the quality and efficiency of software development. Google tools provide robust metrics to track progress, identify bottlenecks, and ultimately improve the entire testing process. This section dives into the various metrics used to gauge testing effectiveness with Google tools, focusing on their calculation and interpretation.Data measurement in software testing is more than just counting defects.

It involves understanding the underlying reasons behind the numbers, enabling data-driven decisions to streamline the testing process and enhance the final product. Metrics are used to track progress, pinpoint areas requiring attention, and ultimately, deliver a better product.

Metrics for Measuring Testing Effectiveness with Google Tools

Various metrics are employed to assess the effectiveness of testing with Google tools. These metrics often encompass aspects like the number of bugs found, the time spent on testing, and the overall quality of the product. Understanding these metrics is key to interpreting test results and making informed decisions about the testing process.

Defect Detection Rate

This metric measures the rate at which defects are identified during the testing process. A high defect detection rate indicates a more thorough testing process, while a low rate may suggest areas requiring more attention.Calculating the defect detection rate involves dividing the total number of defects found during testing by the total number of test cases executed. The result is usually expressed as a percentage.

Defect Detection Rate = (Total Defects Found / Total Test Cases Executed) – 100%

Google tools are great for testing and measuring data, crucial for any business strategy. Knowing how your campaigns perform is key, especially when it comes to online advertising for business. Understanding the metrics from these tools, like click-through rates and conversion rates, helps you refine your approach and optimize your budget, which is vital for success. This detailed data is what allows you to see what works and adjust your online advertising accordingly.

So, next time you’re looking to improve your online presence, remember to use Google tools to measure your progress and to make data-driven decisions. online advertising for business can be more effective when backed by good data.

For example, if 50 defects were found in 1000 test cases, the defect detection rate is 5%. Interpreting this data helps in understanding the thoroughness of the testing and whether it needs adjustment.

Test Case Execution Efficiency

This metric evaluates how efficiently test cases are executed. It takes into account the time spent on testing and the number of test cases completed. A higher execution efficiency suggests a more streamlined and effective testing process.

Test Case Execution Efficiency = (Total Test Cases Executed / Total Time Spent on Testing)

For instance, if 1000 test cases were executed in 100 hours, the efficiency is 10 test cases per hour. Interpreting this metric can identify areas where testing is taking too long, indicating potential bottlenecks in the process.

Test Coverage Metrics

These metrics measure the extent to which the testing process covers different aspects of the software under test. Higher test coverage generally suggests better overall test quality.Different metrics exist, such as statement coverage, branch coverage, and decision coverage, each providing a specific insight into the software’s functionality. These metrics help identify gaps in testing and ensure comprehensive coverage.

Comparison of Measurement Methods

| Metric | Formula | Interpretation | Example ||———————-|——————————————|——————————————————————————————————————————————————–|—————————————————————————|| Defect Detection Rate | (Total Defects Found / Total Test Cases Executed)100% | Higher percentage indicates more thorough testing.

Lower percentage may suggest gaps in testing strategy or inadequate test cases. | 5% detection rate could indicate needing more comprehensive test cases. || Test Case Execution Efficiency | (Total Test Cases Executed / Total Time Spent on Testing) | Higher efficiency means the testing process is more streamlined and efficient.

Lower efficiency may indicate bottlenecks or areas requiring optimization. | 10 test cases per hour shows good efficiency, while 5 may indicate needing optimization. || Test Coverage (e.g., Statement Coverage) | (Number of statements executed / Total number of statements)100% | Measures the percentage of code statements covered by tests. Higher coverage indicates better test quality, lower coverage suggests gaps that need more test cases.

| 80% statement coverage indicates room for improvement in test case design.|

Data Collection and Analysis Procedures

Collecting and analyzing data from Google tools for testing is crucial for measuring performance, identifying bottlenecks, and improving user experience. Proper data collection procedures ensure accurate insights and actionable results. This section details the steps involved in collecting, preparing, cleaning, and validating data for analysis.

Data Collection Procedures for Google Tools

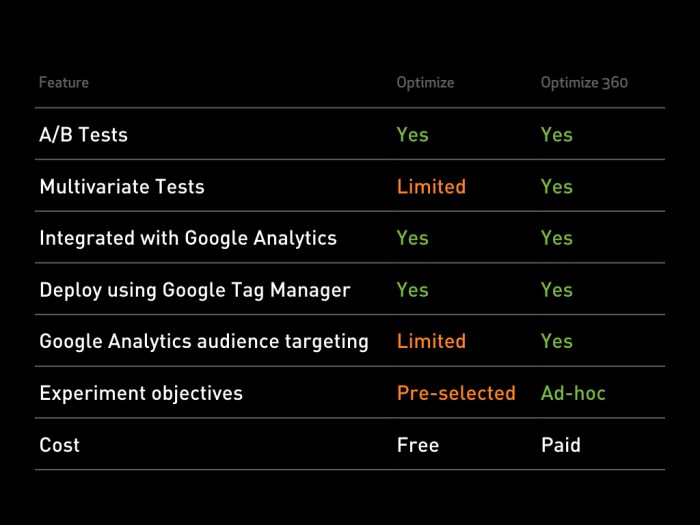

Data collection methods vary depending on the specific Google tool used. For example, Google Analytics collects website traffic data, while Google Optimize tracks A/B testing results. Understanding the specific data points available within each tool is paramount for targeted collection. Clear objectives for the testing should guide the selection of relevant data.

Data Extraction and Preparation

The process begins with extracting data from the chosen Google tools. This typically involves exporting data into a spreadsheet format like CSV or Excel. Once extracted, the data needs preparation for analysis. This includes renaming columns, converting data types (e.g., dates to numbers), and handling missing values. Proper formatting ensures compatibility with analysis tools and prevents errors.

Analyzing Google tools testing measurement data is crucial for understanding user behavior. Knowing how users navigate your website, from the initial landing page to the final purchase, is key. This often involves examining internal and external links, such as those found in ecommerce internal external links , to track traffic flow and optimize conversion rates. Ultimately, thorough data analysis from Google tools helps identify areas for improvement in your ecommerce strategy.

Example: Converting a “Date” column from a string to a date format in Excel is crucial for date-based calculations.

Data Cleaning and Validation

Data cleaning and validation are essential to ensure the accuracy and reliability of the results. This involves identifying and correcting errors, inconsistencies, and outliers in the data. Techniques like removing duplicates, handling missing data (e.g., imputation or removal), and checking for data ranges are critical. Data validation ensures the collected data meets predefined criteria and conforms to expected values.

Analyzing Google tools testing measurement data is crucial. It helps you understand how your products are performing, but to truly optimize, you need to understand how Google Merchant Center’s AI-powered product filtering ( google merchant center ai powered product filtering ) impacts visibility. Ultimately, this data is essential for refining your Google tools strategy and boosting your online presence.

Example: Identifying and removing duplicate user entries from a survey dataset is a common cleaning step.

Data Analysis Process Flowchart

The flowchart below illustrates the complete data analysis process. (Illustrative flowchart: A basic flowchart with boxes representing stages. Start with “Define Objectives,” followed by “Select Google Tools,” then “Data Extraction,” “Data Preparation,” “Data Cleaning and Validation,” “Data Analysis,” and finally “Reporting and Insights.” Arrows connect these boxes to show the sequential steps. Each box contains a brief description of the activity within it.

This visual representation aids in understanding the process flow.)

Actionable Procedures for Data Analysis

The table below Artikels the actionable procedures for each step in the data analysis process.

| Step | Actionable Procedure |

|---|---|

| Define Objectives | Clearly define the testing goals and the specific metrics to measure. |

| Select Google Tools | Choose the appropriate Google tools based on the testing objectives and available data. |

| Data Extraction | Export data from the selected Google tools in a suitable format (e.g., CSV). |

| Data Preparation | Rename columns, convert data types, and handle missing values. |

| Data Cleaning and Validation | Identify and correct errors, inconsistencies, and outliers in the data. |

| Data Analysis | Apply appropriate statistical analysis techniques (e.g., A/B testing, regression analysis) to the cleaned data. |

| Reporting and Insights | Present the findings in a clear and concise manner, highlighting key insights and recommendations. |

Identifying Trends and Patterns in Data

Uncovering patterns and trends in testing data is crucial for understanding software quality and driving improvements in the development lifecycle. Google tools provide a wealth of data, from performance metrics to user interactions, that can reveal insights into potential issues and areas for optimization. By analyzing this data, teams can identify recurring problems, predict future failures, and proactively address weaknesses before they impact users.Analyzing this data helps to pinpoint recurring issues, anticipate future problems, and implement proactive solutions to enhance the overall quality of the software.

This iterative approach, driven by data-driven insights, is crucial for building robust and user-friendly applications.

Common Trends in Testing Data

Google testing tools frequently reveal patterns related to specific functionalities, user interactions, or platform configurations. Common trends might include performance bottlenecks during peak usage hours, recurring usability issues in particular user flows, or security vulnerabilities in specific API endpoints. Identifying these patterns allows for targeted improvements and preventative measures.

Significance of Trends in Software Quality

Trends in testing data directly impact software quality. For example, consistently high error rates in a particular module suggest a need for code review and refactoring. Similarly, a high rate of user complaints about a specific feature indicates a need for usability improvements. Recognizing these patterns allows developers to address the root cause of the issues and ensure a more stable and user-friendly product.

Using Trends to Improve the Development Lifecycle

Data-driven insights from testing can significantly enhance the development lifecycle. For instance, identifying performance bottlenecks early in the development process allows for architectural adjustments before the application reaches production. Similarly, pinpointing usability issues through user testing allows for iterative improvements, resulting in a more user-friendly final product. This proactive approach minimizes risks and ensures a higher quality outcome.

Comparison of Testing Methodologies and Data Analysis

Different testing methodologies employ varying data collection and analysis techniques. Unit testing, for example, focuses on individual components, yielding data about specific code modules. Integration testing, on the other hand, examines interactions between modules, providing data about system-level behavior. Performance testing generates data about response times and resource utilization. Analyzing these diverse datasets allows for a holistic understanding of the software’s behavior and quality.

A combination of these approaches yields a comprehensive picture.

Interpreting Different Types of Testing Data

Different testing types yield different data, requiring specific interpretation techniques. Performance data, such as response times and throughput, helps identify bottlenecks and optimize resource allocation. Usability data, collected through user feedback and interaction analysis, reveals user experience issues and guides design improvements. Security data, gathered through penetration testing and vulnerability assessments, highlights potential security weaknesses and informs mitigation strategies.

A comprehensive analysis of these diverse datasets is essential for understanding the overall quality and robustness of the software.

Reporting and Visualization of Testing Data

Transforming raw testing data into actionable insights requires effective reporting and visualization. This process bridges the gap between technical data and business understanding, allowing stakeholders to quickly grasp the results and make informed decisions. Clear and concise reports, supported by compelling visuals, are crucial for demonstrating the value of testing efforts and highlighting areas needing attention.Data visualization is a powerful tool in the software testing arena.

By presenting data in a visually appealing and easily understandable format, testing teams can quickly identify trends, patterns, and anomalies. This facilitates a faster feedback loop, leading to quicker problem resolution and improved software quality.

Different Ways to Present Testing Data for Analysis

Various methods can be used to present testing data. These include tables, charts, and graphs, each suited for different types of data and analysis goals. Tables are excellent for presenting detailed data points, while charts and graphs are better suited for highlighting trends and patterns. The choice of presentation method depends on the specific data being analyzed and the intended audience.

Examples of Visual Representations Using Charts and Graphs

Visual representations like bar charts, line graphs, and pie charts effectively convey data trends. Bar charts are ideal for comparing different metrics across various test runs. For instance, a bar chart comparing the number of bugs found in different software modules provides a clear visual representation of the distribution of bugs. Line graphs are useful for tracking metrics over time, such as the rate of defect detection throughout the testing process.

A line graph showcasing the number of defects found per day can help identify patterns in defect reporting and pinpoint potential issues. Pie charts are effective for visualizing the proportion of different types of defects, enabling stakeholders to quickly understand the most prevalent issues. A pie chart illustrating the percentage of defects categorized as critical, major, minor, and trivial provides a quick overview of the defect severity distribution.

Importance of Clear and Concise Reporting for Stakeholders

Clear and concise reporting is vital for communicating testing results to stakeholders. Technical jargon should be avoided, and reports should focus on key findings and actionable insights. The report should clearly state the objectives of the testing, the methodology used, and the results obtained. Comprehensive reports are essential for stakeholders to understand the quality of the software and make informed decisions.

Template for Reporting the Results of Testing Using Google Tools

A template for reporting testing results using Google tools should include:

- Test Objective: A clear and concise statement of the testing objectives.

- Test Methodology: Description of the testing methods and tools used.

- Test Environment: Details about the testing environment, including hardware and software configurations.

- Test Results: Detailed information on the test results, including pass/fail status, defect counts, and severity levels. This section should be well-organized, including tables, charts, and graphs to present the data visually.

- Analysis and Recommendations: A comprehensive analysis of the test results, identifying trends, patterns, and potential risks. Recommendations for improvement should be included.

- Conclusion: A summary of the key findings and overall assessment of the software quality.

Visualization Tools and Their Application to Testing Data

Various visualization tools can enhance testing data analysis. Choosing the right tool depends on the specific data type and the desired level of detail.

| Visualization Tool | Application to Testing Data |

|---|---|

| Google Sheets | Excellent for creating basic charts and graphs, analyzing data, and summarizing results. Easy to use and share. |

| Google Data Studio | Ideal for creating interactive dashboards and reports. Allows for customization and integration with other Google tools. Excellent for data storytelling and providing insights to stakeholders. |

| Tableau | Powerful data visualization tool. Enables complex data analysis and sophisticated visualizations. Useful for creating insightful reports for stakeholders. |

| Power BI | Comprehensive data visualization and business intelligence tool. Suitable for creating interactive reports and dashboards. |

Integrating Google Tools into Testing Frameworks

Leveraging Google’s suite of testing tools can significantly enhance the efficiency and effectiveness of software testing. This integration allows for more comprehensive data collection, analysis, and reporting, ultimately leading to quicker identification of issues and improved software quality. Integrating these tools into existing testing frameworks can streamline workflows and provide valuable insights into user behavior and application performance.

Methods for Integration

Integrating Google tools into testing frameworks involves several key methods. Direct API calls are often used to access and manipulate data from Google services, such as Google Analytics or Firebase. This allows automated testing scripts to collect data in real-time, improving the accuracy and speed of testing. Furthermore, using Google Cloud Platform (GCP) services can allow for extensive scalability and enhanced data storage capabilities for large-scale testing efforts.

Benefits of Integration

Integrating Google tools offers a multitude of benefits. Automated data collection and analysis lead to quicker identification of bugs and performance bottlenecks. Real-time insights into user behavior and application performance can be achieved, enabling proactive adjustments to the software development process. Enhanced reporting and visualization capabilities improve communication between development and testing teams, promoting collaboration and faster issue resolution.

The scalability of Google Cloud Platform (GCP) allows for handling large volumes of test data effectively.

Specific Integration Examples

Several specific integrations demonstrate the practical application of Google tools. For instance, integrating Google Analytics into a web application testing framework allows testers to monitor user behavior, track conversions, and identify areas of the application requiring improvement. Similarly, integrating Firebase into a mobile application testing framework allows testers to monitor app usage, identify crashes, and measure performance metrics in real-time.

Using Google Sheets for data reporting can simplify data organization and analysis, enabling testers to create custom reports and dashboards.

Best Practices for Integration

Implementing best practices is crucial for successful integration. First, clearly define the specific testing goals and objectives to determine which Google tools are most relevant. Secondly, ensure that the integration process is well-documented, allowing for easy maintenance and future modifications. Furthermore, consider the security implications of accessing and using Google services within the testing framework. Finally, regularly review and update the integration to ensure continued relevance and effectiveness.

Testing Framework Compatibility

The following table highlights the compatibility of various testing frameworks with Google tools. Note that compatibility can vary depending on the specific tool and framework version.

| Testing Framework | Compatibility with Google Tools |

|---|---|

| Selenium | High compatibility with Google Analytics, Firebase, and other Google services through API calls |

| Appium | High compatibility with Firebase for mobile application testing |

| JUnit | Compatible with various Google tools through API calls and custom integrations |

| TestNG | Compatible with various Google tools through API calls and custom integrations |

| Cypress | High compatibility with Google Analytics for web application testing, with custom integration |

Case Studies and Practical Examples

Putting theory into practice is crucial for understanding the true potential of Google tools in software testing. Real-world examples showcase how these tools can be leveraged to enhance quality, efficiency, and overall testing processes. By examining successful implementations, we can identify effective strategies and address potential challenges. This section delves into specific applications, highlighting the tangible improvements and the impact on various testing aspects.

Real-World Applications of Google Tools for Testing

Google tools like Firebase, Google Sheets, and BigQuery provide diverse functionalities applicable to various testing scenarios. For instance, Firebase Test Lab can simulate different device configurations and operating systems, enabling comprehensive cross-platform testing. Google Sheets allows for efficient data entry, manipulation, and analysis during testing. BigQuery, with its scalable architecture, is ideal for processing large datasets, supporting detailed performance analysis.

Improving Software Quality Through Data Analysis

Data collected from Google tools can significantly enhance software quality. By analyzing metrics like crash rates, user behavior patterns, and performance bottlenecks, developers can identify and address potential issues early in the development lifecycle. For example, if Firebase Crash Reporting reveals a high crash rate on a specific device model, developers can prioritize fixing the corresponding code issues, leading to a more stable and user-friendly application.

Challenges and Solutions in Implementing Google Tools

Implementing Google tools in testing environments may present certain challenges. One common challenge is integrating these tools with existing testing frameworks. Solutions include carefully planned migration strategies, leveraging APIs for seamless data exchange, and utilizing readily available SDKs. Another challenge is effectively training the testing team to use the new tools and interpret the data. Training sessions and comprehensive documentation play a crucial role in overcoming this challenge.

Impact on Testing Process Efficiency

Google tools streamline the testing process in several ways. For instance, automated data collection and analysis reduce manual effort, allowing testers to focus on more strategic tasks. The real-time insights provided by these tools help identify and fix issues promptly, preventing delays and rework. This translates to faster release cycles, reduced costs, and increased overall project efficiency.

Detailed Case Study: Mobile App Performance Testing

A mobile application development team utilized Firebase Performance Monitoring to analyze the performance of their app across different devices and network conditions. The team observed significant performance degradation on older Android devices with limited network bandwidth. This data-driven insight allowed the team to optimize the application’s architecture, focusing on code efficiency and network optimization strategies for these specific scenarios.

This resulted in a 20% improvement in loading times for the affected user base. The team also used Firebase Test Lab to conduct comprehensive performance tests, simulating different network conditions and device configurations, which provided detailed data on the impact of various parameters on the application’s performance.

Future Trends and Advancements

The landscape of software testing is constantly evolving, driven by advancements in technology and the increasing complexity of applications. Google tools, with their robust data analysis capabilities, are poised to play a pivotal role in this evolution. Future trends in testing data measurement will likely see an integration of these tools with emerging methodologies, creating more efficient and insightful testing processes.The use of AI and machine learning in testing will become more commonplace.

These technologies can automate tasks, identify patterns, and predict potential issues, augmenting the capabilities of human testers. As data volume increases, the need for advanced analytics and visualization techniques will also grow, demanding further development of Google tools to meet these demands.

AI-Powered Automated Testing

The incorporation of AI and machine learning into testing processes will automate tasks like test case generation, defect prediction, and performance analysis. This automation will significantly reduce the time and effort required for manual testing, while simultaneously improving the accuracy and comprehensiveness of the results. Examples include using AI to identify edge cases in complex software, generating more effective test scenarios, and predicting potential performance bottlenecks.

This allows for more efficient resource allocation and a faster feedback loop in the development process.

Enhanced Data Visualization and Analytics

Advanced data visualization tools will play a critical role in presenting testing data in easily digestible formats. Google tools can evolve to provide interactive dashboards and sophisticated reporting features, empowering testers to understand complex data patterns and identify potential risks more effectively. This includes creating interactive visualizations that allow for drill-down analysis, providing deeper insights into performance issues or defect patterns.

Real-time data dashboards will also become more prevalent, enabling immediate identification of emerging problems.

Adaptability to Agile and DevOps

Google tools need to adapt to the dynamic nature of agile and DevOps methodologies. This requires the tools to support continuous integration and continuous delivery (CI/CD) pipelines, providing real-time feedback and allowing for automated testing at every stage of the development lifecycle. The tools should integrate seamlessly with existing agile frameworks, allowing testers to quickly analyze data, identify bottlenecks, and provide immediate feedback to developers.

This will lead to a more collaborative and efficient development process.

Integration with Emerging Technologies

The use of Google tools will extend to emerging technologies like cloud computing and serverless architectures. The tools will need to provide mechanisms for testing these complex environments, allowing for thorough evaluation of the performance and security aspects of these new systems. This includes the ability to simulate and test large-scale deployments in cloud environments. This adaptability will allow for thorough assessment of applications operating in dynamic, cloud-based infrastructures.

Improved Testing Process Efficiency, Google tools testing measurement data

Potential improvements to existing testing processes include the automation of repetitive tasks, leading to faster turnaround times and increased efficiency. This includes streamlining data collection and analysis procedures, using AI to automate parts of the process, and creating automated reporting mechanisms. Tools can also be designed to provide actionable insights to developers and testers, facilitating faster resolution of issues.

For example, by identifying trends in error patterns, the tools can provide developers with valuable insights to address issues in their code.

Closing Notes

In conclusion, harnessing Google tools for testing measurement provides a powerful framework for optimizing software quality. By understanding data collection, analysis, and visualization techniques, we can identify trends, improve processes, and ultimately deliver better software products. The future of testing likely lies in leveraging these tools even further, and this guide equips you with the knowledge to navigate that future.