Google removes duplicate organic listings freatured snippets – Google removes duplicate organic listings, featuring snippets, impacting search results in a significant way. This change affects user experience, strategies, and website visibility. Understanding how Google handles duplicates is crucial for maintaining a strong online presence. This exploration delves into the reasons behind this action, its effects on featured snippets, and the implications for website owners.

The removal of duplicate listings forces websites to focus on unique content and optimize for search intent. This update reflects Google’s ongoing commitment to providing high-quality search results, prioritizing the user experience above all else. The impact on SERP visibility is a key consideration, and the strategies for avoiding duplicate content will be discussed.

Techniques for Identifying Duplicate Listings

Duplicate organic listings are a significant concern, often resulting from unintentional or malicious actions. These duplicate entries can harm a website’s search ranking and user experience. Effective identification and removal of these duplicates are crucial for maintaining a healthy online presence.Identifying duplicate listings is a multi-faceted process that involves both manual and automated techniques. Understanding the common methods, the role of automated systems, and the underlying causes of duplication is key to developing effective prevention strategies.

Google’s recent move to remove duplicate organic listings and featured snippets is a significant development in search engine optimization. This highlights the importance of unique content and avoiding any overlap. It’s a reminder of the ever-evolving landscape of SEO, and the importance of keeping up with Google’s changes. A similar lack of attention to security issues, as seen in the FTC’s investigation into GoDaddy hosting’s blind spots to security threats , shows how crucial vigilance is in all aspects of online presence.

Ultimately, maintaining a strong online presence requires staying proactive and ahead of the curve, much like Google’s actions in combating duplicate content.

Common Methods for Duplicate Listing Detection

Various methods are employed to detect duplicate organic listings. These methods include analysis, content similarity comparisons, and URL analysis. analysis involves examining the s used in different listings to identify overlaps. Content similarity comparisons employ sophisticated algorithms to assess the degree of similarity between different pieces of content. URL analysis involves scrutinizing the URLs of different listings to determine if they are pointing to the same or similar content.

Role of Automated Systems in Duplicate Detection

Automated systems play a crucial role in efficiently identifying duplicate organic listings. These systems typically use sophisticated algorithms to compare content and metadata across multiple listings. The systems are designed to detect even subtle variations in content, such as different wordings or minor formatting changes. Automated tools are capable of handling large datasets, making them indispensable for large websites with numerous listings.

Factors Contributing to Duplicate Content Creation

Several factors contribute to the creation of duplicate content. These include unintentional mistakes during content creation, content scraping, and the use of automated content generation tools. Unintentional mistakes may arise from reusing content across different platforms or failing to update content when it is repurposed. Content scraping involves copying content from other websites without permission. Automated content generation tools can create numerous similar articles, leading to duplication.

Techniques for Preventing Duplicate Content Creation, Google removes duplicate organic listings freatured snippets

Implementing a robust content creation process is essential for preventing duplicate content. Maintaining a unique content strategy, avoiding plagiarism, and utilizing a content calendar are key preventative measures. A unique content strategy focuses on developing original content that provides unique value to users. Avoiding plagiarism involves properly referencing and attributing content when necessary. Using a content calendar can ensure that content is well-planned and scheduled to avoid overlap and repetition.

Table of Duplicate Content Types, Origins, and Solutions

| Duplicate Type | Source | Prevention Method | Example |

|---|---|---|---|

| Identical Content | Content copied from other websites or unintentionally duplicated on the same site. | Implement a content originality checker; use a plagiarism checker; ensure all content is unique. | Two articles on the same topic with the exact same wording. |

| Near-Duplicate Content | Minor variations in phrasing or structure, often arising from content repurposing or slight modifications. | Use sophisticated similarity detection tools; ensure content is tailored to specific platforms; update existing content to avoid overlap. | Two articles with similar structure but different wording; two product descriptions on different pages with minor variations. |

| Duplicate Metadata | Inconsistent or repeated meta descriptions, title tags, or other metadata across multiple pages. | Implement a consistent metadata management system; use metadata tools to automatically generate appropriate tags. | Multiple pages with identical or near-identical title tags or meta descriptions. |

| Duplicate URLs | Multiple URLs pointing to the same or near-identical content. | Implement a robust URL management system; use canonical tags to indicate the preferred version of a page. | Two or more URLs linking to the same product page. |

Impact on Featured Snippets

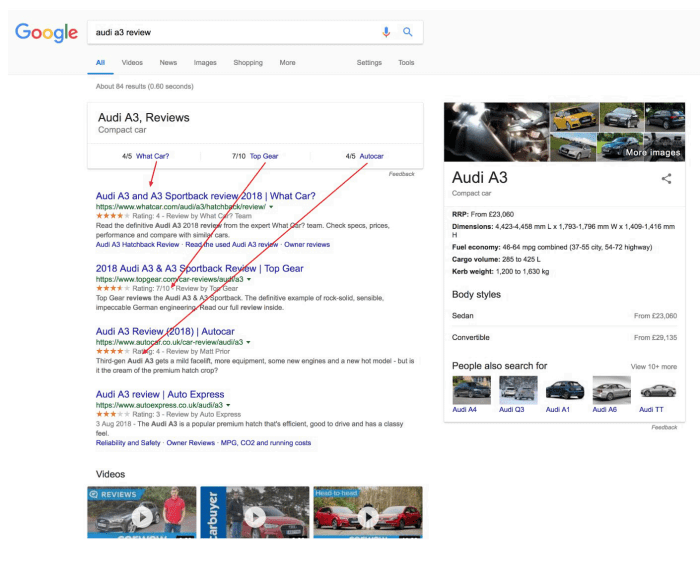

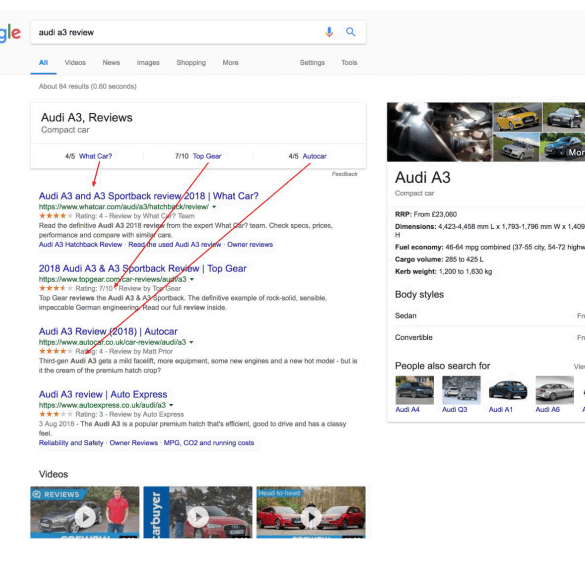

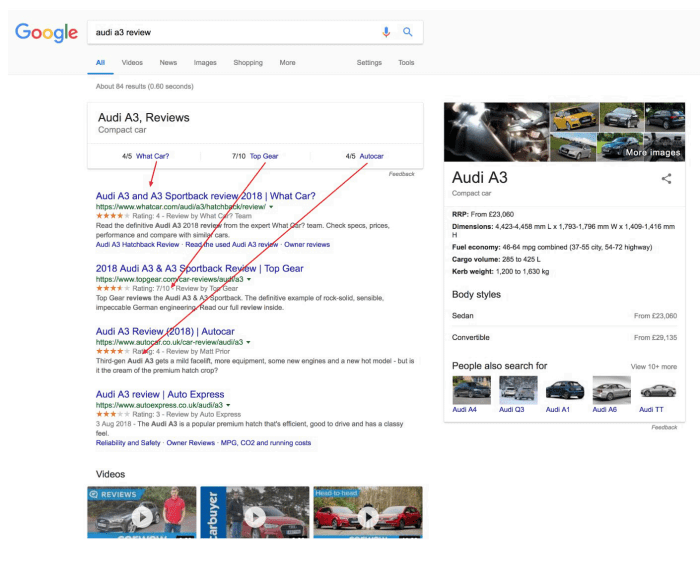

Removing duplicate organic listings can significantly impact featured snippet visibility. When multiple pages on a website present nearly identical content, search engines struggle to determine the most relevant and authoritative source for a query. This ambiguity can lead to the algorithm choosing a less optimal page for the featured snippet, potentially reducing the overall visibility of the site. The presence of duplicate content dilutes the signal the search engine receives, hindering its ability to accurately identify the best representation for the user’s query.Duplicate listings often lead to a struggle for the algorithm to identify the most relevant content for the featured snippet.

Google’s recent move to remove duplicate organic listings and featured snippets is a big deal for SEO. It’s forcing us to think more strategically about how we present information. One crucial aspect of this is optimizing images for web performance without losing quality, which directly impacts user experience and search rankings. Learning how to compress images without sacrificing visual appeal is key to ensuring your site loads quickly and remains competitive in search results.

Check out this helpful guide on how to optimize images for web performance without losing quality to boost your site’s performance and ultimately improve your chances of ranking high in Google’s results. This is all part of the ongoing effort to create a more refined and useful search experience for users.

The algorithm considers various factors, including page authority, user engagement, and content freshness, to select the snippet. With duplicates, the algorithm is presented with conflicting signals, potentially resulting in a featured snippet that doesn’t accurately reflect the site’s best content. This ambiguity can impact both user experience and search engine ranking.

Relationship to Algorithm Selection

The search engine algorithms are designed to provide the most relevant and helpful results to users. When duplicate listings exist, the algorithm’s ability to accurately evaluate the true authority and value of the content is compromised. The algorithm might choose a less relevant page for the featured snippet because it is presented with a conflicting picture of the website’s overall content.

Google’s recent move to remove duplicate organic listings and featured snippets is a significant change for SEO. It’s forcing us to re-evaluate our strategies, and rethinking referral marketing, like rethinking referral marketing demonstrate your expertise , becomes crucial. Ultimately, a focus on unique, high-quality content is paramount to staying competitive in this evolving search landscape.

In this scenario, the featured snippet may not accurately represent the most comprehensive or authoritative information available. This situation can lead to a reduction in user engagement and website ranking.

Improved Placement Potential

Removing duplicate content allows search engines to more accurately identify the most relevant and authoritative source for a query. This clarity helps in selecting the most appropriate page for the featured snippet. The algorithm can then focus on a single, well-optimized page, potentially leading to improved placement for the featured snippet. This improvement often leads to increased user engagement and improved ranking in organic search results.

For example, a company that previously had multiple pages with overlapping content for a specific product could now have a single, well-optimized page dedicated to that product, potentially leading to a higher chance of earning a featured snippet for relevant search queries.

Comparison to Organic Results

The impact of duplicate listings on featured snippets differs slightly from their impact on organic search results. While both suffer from reduced visibility, the effect on featured snippets is more direct. Featured snippets, by their nature, are intended to provide concise answers directly within the search results. Duplicate listings dilute the signal of relevance, which can cause the algorithm to select a less-optimal page for the featured snippet.

In organic results, duplicate content may lead to a decrease in ranking position but does not necessarily directly affect the featured snippet placement as much.

Impact on User Engagement and Ranking

| Featured Snippet Content | Duplicate Listing Content | Impact on User Click-Through Rate (CTR) | Impact on Website Ranking |

|---|---|---|---|

| Concise, relevant answer to a user’s query. | Conflicting or redundant content. | Potentially lower CTR if the snippet is less relevant or if the user is redirected to a less optimal page. | Potentially lower ranking if the featured snippet is less relevant or if the user is redirected to a less optimal page. |

| High-quality, authoritative information. | Low-quality or unoptimized duplicate content. | Increased CTR if the featured snippet is more helpful and accurate. | Increased ranking if the featured snippet is more relevant and accurate. |

| Well-structured, easy-to-read text. | Unstructured or poorly formatted duplicate content. | Higher CTR if the snippet is more engaging and readable. | Improved ranking if the featured snippet is more engaging and readable. |

Consequences for Website Owners

Google’s meticulous approach to maintaining quality search results includes identifying and removing duplicate content. This proactive measure, while upholding search engine integrity, can have significant implications for website owners whose content is flagged as a duplicate. The consequences extend beyond a simple removal notice; they can result in a substantial loss of visibility and revenue.Website owners whose content is flagged as a duplicate risk losing valuable organic traffic.

When search engines deem content redundant, they often remove the listing, effectively hiding the page from users searching for related s. This can lead to a precipitous drop in website visits, impacting businesses reliant on online traffic for sales, leads, or brand awareness. The loss of revenue stems directly from the decline in potential customers or clients reaching the website.

Negative Repercussions of Duplicate Content Removal

The removal of duplicate content listings can severely impact a website’s search engine ranking and organic traffic. Websites heavily reliant on organic traffic will experience a significant decrease in visibility and, subsequently, a reduction in revenue. A loss of organic traffic translates directly to lost opportunities for sales, leads, and brand exposure. This can be particularly damaging to e-commerce sites, blogs, and other online businesses where traffic drives revenue generation.

Importance of Unique Content Creation

Creating unique and original content is paramount for maintaining a strong online presence. Google’s algorithms prioritize content that provides value to users and is not duplicated from other sources. Producing unique content helps establish a website as a credible source of information, which directly impacts search engine ranking. This in turn, leads to increased organic traffic, improved brand reputation, and higher conversion rates.

Strategies for Recovery

If a website’s content has been removed due to duplicates, recovery involves a multifaceted approach. First, identify the duplicate content. Analyze the website’s content for any instances of identical or nearly identical content across different pages or from other websites. Once identified, revise the content to ensure originality. This could involve rewriting existing content, adding unique perspectives, or incorporating new data.

Submitting updated content for re-indexing is crucial for regaining visibility. Website owners can leverage Google Search Console to monitor their website’s performance and identify any further issues.

Table: Duplicate Content Issues, Causes, and Solutions

| Issue Type | Cause | Mitigation Strategy | Expected Outcome |

|---|---|---|---|

| Identical Content on Multiple Pages | Duplicate content across different pages on the same website | Rewrite the content on all duplicate pages, focusing on unique aspects and perspectives. | Improved search engine ranking and reduced duplicate content issues. |

| Content Mirroring from External Sites | Plagiarism or copying content from other websites. | Rewrite the content to incorporate original insights and perspectives. Remove the original copied content. | Maintaining uniqueness and avoiding penalties for duplicate content. |

| Content Syndication Issues | Content shared across multiple platforms, potentially creating duplicates. | Ensure content is properly attributed and unique for each platform. | Avoid duplicate content issues while retaining content visibility on different platforms. |

| Poorly Implemented Canonicalization | Improper use of canonical tags leading to indexing issues. | Correctly implement canonical tags to establish the authoritative version of the content and prevent search engine confusion. | Clearer identification of the main content version, leading to better indexing and reduced duplication concerns. |

Evolution of Search Algorithm

Search engines constantly refine their algorithms to provide users with the most relevant and high-quality results. A crucial aspect of this refinement is the ongoing battle against duplicate content. Duplicate content, whether intentional or accidental, dilutes the search results, making it harder for legitimate, unique content to be found. Understanding the evolution of these algorithms is vital for website owners to maintain a competitive edge in the online world.Search engines have a vested interest in delivering the best possible user experience.

Duplicate content, in all its forms, degrades this experience by creating confusion and cluttering search results. The search engines’ efforts to reduce duplicate content are driven by a desire to provide users with diverse and authoritative sources of information, thereby fostering a healthy and robust online ecosystem.

Historical Overview of Algorithm Updates

Search engine algorithms have undergone significant changes over time, particularly regarding how they identify and handle duplicate content. Early algorithms often struggled to differentiate between legitimate variations of content (e.g., different formats of the same article) and outright duplication. This led to a less effective search experience.

Reasoning Behind Reducing Duplicate Content

Search engines strive to rank pages with unique and high-quality content higher. Duplicate content dilutes the search results, making it difficult for users to find relevant information. The primary motivation behind these updates is to enhance the quality of search results and provide users with a better experience. This includes ensuring that users find trustworthy, authoritative content rather than multiple instances of the same or nearly identical information.

Examples of Algorithm Evolution

One example of algorithm evolution is the increasing sophistication in identifying duplicate content across various platforms. Search engines are now better equipped to detect near-duplicates, paraphrased content, and mirror sites, all of which previously might have evaded detection. These updates have led to a more accurate ranking of web pages, favoring those with unique and original content. Another key example involves the development of advanced algorithms that analyze semantic relationships between pages.

This enables the search engine to determine if pages are actually different expressions of the same information or are truly distinct and valuable sources.

Importance of Unique Content

Maintaining a unique and high-quality website is essential for long-term search engine success. Duplicate content can significantly hinder a website’s ability to rank well and attract organic traffic. A focus on originality, depth, and providing value to users is crucial for sustained success in the competitive online landscape.

Table: Evolution of Search Algorithm Updates

| Algorithm Update Date | Key Changes | Impact on Duplicate Content | User Experience |

|---|---|---|---|

| 2011 | Introduction of more sophisticated algorithms for detecting duplicate content. | Reduced the visibility of low-quality, duplicated content. | Improved search results with a higher proportion of unique content. |

| 2015 | Improved algorithms for identifying near-duplicate content and content mirroring. | Further decreased the prominence of duplicate or near-duplicate content. | Users were more likely to find original content and avoid repetition. |

| 2019 | Focus on semantic analysis and contextual understanding of content. | Improved the ability to distinguish between semantically similar but unique content. | Enhanced user experience by providing more nuanced and relevant results. |

| 2023 | Ongoing refinement of algorithms to handle more complex duplicate content scenarios. | Continued effort to maintain the accuracy and effectiveness of duplicate content detection. | Ongoing improvements in the accuracy of search results, focusing on user needs. |

Epilogue: Google Removes Duplicate Organic Listings Freatured Snippets

In conclusion, Google’s removal of duplicate organic listings and featured snippets underscores the importance of unique content for success. Website owners need to understand the implications of this change to maintain visibility and attract organic traffic. This process is essential for staying competitive in the ever-evolving digital landscape.