Data sampling google analytics – Data sampling in Google Analytics is a powerful technique for analyzing website data quickly and efficiently. It allows you to gain valuable insights without the computational burden of processing every single data point. Understanding how Google Analytics uses sampling, the different methods employed, and the factors influencing these decisions is crucial for interpreting results accurately. This exploration will cover the fundamentals of data sampling in Google Analytics, helping you make the most of this valuable tool.

This in-depth look at data sampling in Google Analytics will cover various aspects, from the fundamental principles of data sampling to the practical implementation of sampling methods. We’ll delve into the different sampling methods, the factors influencing the decision to use sampling, and how to interpret the results correctly, taking into account the inherent limitations and potential biases. The goal is to equip you with the knowledge to confidently use and interpret sampled data for your website analysis.

Introduction to Data Sampling

Data sampling in Google Analytics is a powerful technique that allows website analysts to glean insights from large datasets efficiently. It’s a crucial element in website analysis, enabling a quicker understanding of user behavior and trends. This approach is particularly useful when dealing with massive amounts of data, which can be computationally expensive and time-consuming to process in their entirety.By strategically selecting a representative subset of the complete data, Google Analytics can generate reliable results in a fraction of the time it would take to analyze all the data.

Understanding data sampling in Google Analytics is crucial. While you’re diving deep into your own website’s traffic, knowing how competitors are performing is also key. A helpful resource for this is the competition content stealing cheat sheet , which can give you a head start in analyzing their content strategies. This, in turn, helps you fine-tune your own data sampling approach for a more effective overall analysis.

This speed allows for more frequent and timely analysis, which is often critical for making data-driven decisions in the dynamic world of online businesses.

Definition of Data Sampling

Data sampling in Google Analytics involves selecting a portion of the total user data collected from a website. This subset is representative of the entire user base, allowing analysts to draw conclusions about the broader user behavior without processing the complete dataset. This methodology is especially valuable when dealing with large volumes of data that would be computationally challenging to analyze fully.

Importance of Data Sampling

Data sampling is crucial for website analysis in Google Analytics because it allows analysts to analyze large datasets efficiently and rapidly. This efficiency translates into more frequent and timely insights, empowering faster decision-making and strategic adjustments.

Reasons for Google Analytics Sampling

Google Analytics employs sampling for various reasons, primarily to balance accuracy and speed in the face of substantial data volumes.

- Reduced Processing Time: Analyzing the entire dataset would be incredibly time-consuming. Sampling significantly reduces the processing time, allowing for quicker insights and faster decision-making.

- Lower Computational Costs: Processing the entire dataset demands substantial computational resources. Sampling dramatically decreases the resources required, making it a cost-effective method for analysis.

- Improved Data Accessibility: With sampling, data is available and analyzable within a shorter timeframe. This accessibility is critical for real-time decision-making, especially in dynamic online environments.

Trade-offs Between Accuracy and Speed

There’s a trade-off between the accuracy of the analysis and the speed of data processing when using sampling. While sampling provides speed, there’s a potential for a slight reduction in the precision of results. The extent of this trade-off is contingent on the methodology used for selecting the sample.

Comparison of Sampled and Non-Sampled Data

The following table contrasts sampled and non-sampled data in Google Analytics.

| Feature | Sampled Data | Non-Sampled Data |

|---|---|---|

| Data Volume | Reduced | Full |

| Processing Speed | Faster | Slower |

| Accuracy | Potentially lower | Higher |

| Cost | Lower | Higher |

Types of Data Sampling Methods

Understanding how Google Analytics gathers data is crucial for interpreting insights accurately. A significant part of this process involves sampling, which allows for the analysis of large datasets without processing every single data point. Different sampling methods affect the representativeness and reliability of the results. This section delves into the various techniques employed by Google Analytics, emphasizing the distinctions between probability and non-probability methods and their impact on data reliability.

Probability Sampling Methods

Probability sampling methods ensure every data point in the population has a known chance of being selected. This approach is crucial for ensuring the sample accurately reflects the broader population. It allows for the calculation of sampling error and helps in generalizing findings to the entire dataset. Different types of probability sampling methods employed in Google Analytics include:

- Simple Random Sampling: Each data point has an equal probability of being selected. This method is straightforward to implement, but it can be computationally intensive for enormous datasets.

- Stratified Sampling: This method divides the population into subgroups (strata) based on specific characteristics. Then, a random sample is taken from each stratum, ensuring representation from different segments of the population. For example, in Google Analytics, you might stratify by user demographics (e.g., age groups, location) to ensure diverse segments are represented in the sample.

- Cluster Sampling: The population is divided into clusters, and a random sample of clusters is selected. All data points within the selected clusters are then included in the sample. This is often used when it’s difficult or expensive to identify individual data points, such as when analyzing user behavior across multiple websites or applications. This can significantly reduce the amount of data that needs to be processed, leading to significant time and cost savings.

Non-Probability Sampling Methods

Non-probability sampling methods, on the other hand, don’t assign a known probability of selection to each data point. Consequently, it’s not possible to accurately estimate the sampling error or generalize findings to the entire population. However, these methods are often faster and more convenient for specific research questions. Some non-probability sampling techniques occasionally used in Google Analytics include:

- Convenience Sampling: Researchers select the most accessible data points, which might not accurately represent the population. For example, this could involve using data from only the first few hundred sessions that are available in a particular time frame.

- Quota Sampling: Researchers aim to include a certain number of data points from each subgroup in the sample. While this aims for proportional representation, it does not guarantee a true random selection.

Impact on Data Reliability

The choice of sampling method significantly influences the reliability of the data. Probability methods, due to their random selection process, generally lead to more reliable and representative samples. Non-probability methods, while quicker, may introduce biases and inaccuracies. This is why the selection of an appropriate method is critical for achieving accurate conclusions.

Examples of Sampling Techniques

Google Analytics likely employs stratified sampling to analyze user behavior across different demographic groups. For example, it might divide users by age ranges to understand if there are differences in website engagement across different generations. Simple random sampling might be used for testing the effectiveness of a new marketing campaign. If the data sample is too small, the results could be misleading.

Sampling Methods Comparison

| Method | Description | Pros | Cons |

|---|---|---|---|

| Simple Random Sampling | Each data point has an equal chance of selection. | Unbiased | Can be time-consuming for large datasets |

| Stratified Sampling | Dividing the population into subgroups and selecting samples from each. | Represents the characteristics of subgroups. | Requires knowledge of the population subgroups |

Factors Influencing Data Sampling Decisions

Choosing the right data sampling method in Google Analytics is crucial for accurate insights without overwhelming processing. A well-defined sampling strategy ensures that you extract meaningful information from your data while avoiding unnecessary computational burdens. This careful consideration hinges on several factors, including dataset size, accuracy requirements, and analysis goals.Understanding these factors allows for the creation of a sampling plan that effectively balances data representation with practical constraints.

This tailored approach optimizes the efficiency of your analysis while maintaining the integrity of your findings.

Dataset Size Impact on Sampling Strategies

The sheer volume of data collected by Google Analytics significantly impacts the feasibility of processing the entire dataset. Large datasets often necessitate sampling to perform efficient analyses. Sampling techniques allow for quicker processing times and reduced resource consumption, especially when dealing with massive datasets that can potentially overwhelm processing power and lead to longer analysis times.For example, a website with millions of monthly visitors will likely generate a vast amount of data that requires sampling.

This approach ensures efficient analysis without compromising the integrity of the conclusions drawn. Without sampling, the processing time might extend to an impractical duration, rendering the insights irrelevant.

Understanding data sampling in Google Analytics is crucial for any marketer. It’s all about efficiently analyzing large datasets, which is key when you’re trying to understand the impact of your social media strategies, like social media cold outreach. However, remember that data sampling can sometimes skew results, so it’s vital to consider the sample size and potential biases when interpreting the findings in your Google Analytics reports.

Careful analysis is still necessary for accurate conclusions.

Data Accuracy Requirements Impact on Sampling

The desired level of accuracy in the analysis directly influences the sampling method chosen. If a high degree of precision is required, a larger sample size is necessary to minimize potential sampling errors and ensure that the sample accurately reflects the population. Conversely, less stringent accuracy needs can justify smaller sample sizes, enabling faster processing and analysis.For instance, if the goal is to estimate website traffic trends, a certain level of accuracy might be sufficient.

However, if the goal is to precisely pinpoint the conversion rate for a specific promotional campaign, a more comprehensive and potentially larger sample would be necessary.

Analysis Goals Impact on Sampling Choices

Different analysis goals require different sampling strategies. If the aim is to understand general trends, a stratified random sample might be suitable. However, if the goal is to examine specific user segments, a more targeted approach such as cluster sampling might be necessary.For instance, an analysis aimed at understanding overall user behavior might employ simple random sampling. In contrast, an analysis targeting specific user demographics would likely require a stratified random sample to accurately represent the diverse user groups.

This ensures that the insights derived from the sample are directly relevant to the analysis goals.

Flowchart for Determining Appropriate Sampling Strategies

A flowchart can effectively visualize the process of determining the most suitable sampling strategy.

Start | V Assess Dataset Size (Large/Small) | V Define Data Accuracy Requirements (High/Low) | V Specify Analysis Goals (General Trends/Specific Segments) | V Choose Sampling Method (Simple Random/Stratified/Cluster) | V Determine Sample Size (Based on Accuracy & Goals) | V Implement Sampling Strategy | V Analyze Results | V End

This structured approach guides the selection of an appropriate sampling method, ensuring that the chosen strategy aligns with the dataset characteristics, accuracy requirements, and analytical objectives. This flowchart ensures a systematic approach to sampling, promoting efficient and accurate analysis.

Understanding Sampling Error

Google Analytics, while a powerful tool, relies on samples of user data to provide insights. This sampling method, while efficient, introduces a degree of uncertainty. Understanding this sampling error is crucial for interpreting results accurately and avoiding misleading conclusions. Incorrectly interpreting results due to sampling error can lead to poor business decisions, wasted resources, and ultimately, reduced profitability.

Sampling error, in the context of Google Analytics, represents the difference between the characteristics of the sample data and the characteristics of the entire population of users. Since only a portion of the total user activity is observed, there’s a chance that the sample doesn’t perfectly reflect the true behavior of all users. This inherent variability necessitates a cautious approach to interpreting any insights derived from the data.

Impact of Sampling Error on Results Interpretation

Sampling error directly influences how we interpret Google Analytics data. A high sampling error can lead to inaccurate estimations of key metrics like conversion rates, bounce rates, and user engagement. For example, a sample showing a significantly higher conversion rate than the actual population could misrepresent the true effectiveness of a marketing campaign, leading to an over-allocation of resources or a premature decision to halt a successful campaign.

Conversely, a sample indicating a lower conversion rate than reality could lead to missed opportunities and an underestimation of the campaign’s true potential.

Methods for Estimating Sampling Error

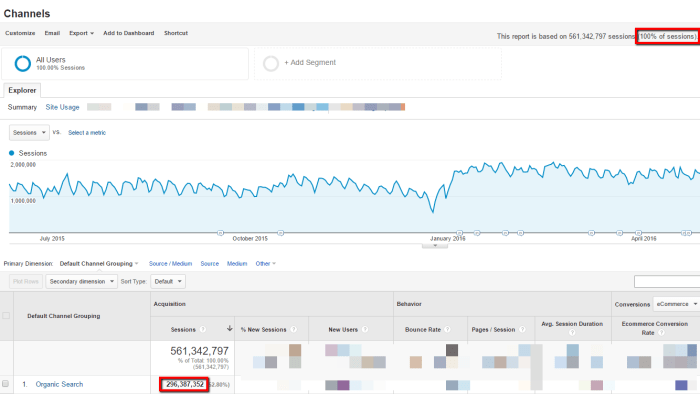

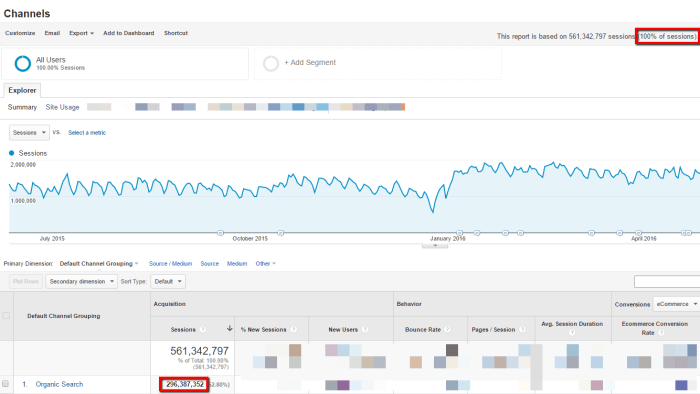

Google Analytics provides built-in mechanisms for estimating sampling error. The “Sample Size” metric displayed in the reports indicates the number of users included in the sample. A larger sample size generally corresponds to a lower sampling error. Moreover, the confidence intervals reported alongside various metrics provide a range within which the true population value likely falls. These intervals account for the inherent variability introduced by sampling.

The wider the interval, the higher the sampling error. For example, if a conversion rate is reported as 10% with a confidence interval of ± 2%, this suggests that the true conversion rate is likely somewhere between 8% and 12%.

Mitigating Sampling Error in Google Analytics

Several strategies can help mitigate the impact of sampling error in Google Analytics:

- Analyzing aggregated data: Focus on high-level trends and patterns rather than individual metrics, as aggregated data are less susceptible to the fluctuations caused by sampling error. For example, looking at overall traffic sources over time rather than specific traffic sources for a single day.

- Employing multiple data points: Review results over several periods, such as weekly or monthly, to identify consistent trends. This helps to reduce the impact of any single sample’s variability and gain a more holistic understanding of the data.

- Understanding confidence intervals: Always consider the confidence intervals reported in the Google Analytics reports. Interpret results within the specified range, recognizing the inherent uncertainty. For example, a confidence interval of ± 5% on a metric indicates that the true population value is likely within 5 percentage points of the sample value.

- Using larger samples where possible: For critical analyses, consider collecting data with a larger sample size if possible, especially when examining segments or specific user groups. This will help to reduce sampling error and provide more reliable results.

Illustrative Table of Sampling Error Levels, Data sampling google analytics

The table below provides a simplified illustration of sampling error levels and their implications:

| Error Level | Description | Impact |

|---|---|---|

| Low | Minimal error; the sample closely represents the population. | Results are highly reliable and can be used with confidence for decision-making. |

| Medium | Moderate error; the sample somewhat represents the population, but some discrepancies exist. | Results are somewhat reliable, but should be interpreted with caution. Additional data points or analysis might be beneficial. |

| High | Significant error; the sample significantly deviates from the population. | Results are unreliable and should not be used for decision-making. Further investigation or collection of a larger sample is required. |

Interpreting Sampled Data in Google Analytics

Navigating Google Analytics reports often involves dealing with sampled data. Understanding how to interpret this data accurately is crucial for making informed decisions. While sampling speeds up report generation, it introduces a degree of uncertainty that must be considered. This section will provide guidelines for effectively interpreting sampled data in Google Analytics, highlighting its limitations and potential biases.

Understanding data sampling in Google Analytics is crucial for analyzing your website traffic effectively. This is especially important when you’re trying to understand how your advertising campaigns are performing. For example, if you’re looking to boost sales through Reddit Ads, a great resource to learn how to do this from start to finish is this start-to-finish guide on using Reddit ads to generate sales.

By properly sampling your data, you can ensure you’re making data-driven decisions for your marketing strategy.

Sampling in Google Analytics allows for rapid data retrieval and processing, which is particularly beneficial for large datasets. However, it’s important to remember that sampled data is a representation of the entire population. This means the figures you see might not precisely reflect the complete picture, and the level of precision is directly related to the sample size.

Guidelines for Interpreting Sampled Data

Understanding the limitations of sampling is paramount when interpreting data. Sampled reports are valuable snapshots but not complete representations. Always review the sample size to assess its reliability. A larger sample size generally provides a more accurate reflection of the overall data.

Limitations of Sampled Data for Specific Analyses

Certain types of analysis are significantly impacted by sampling. For example, highly granular or specific comparisons, like examining user behavior on individual pages, might be less reliable with sampled data. Analysis requiring precise estimations, such as A/B testing, should be approached cautiously. In these cases, it’s recommended to consider a full data report if accuracy is paramount.

Methods for Checking Reliability

Multiple methods can help assess the reliability of sampled data. Firstly, always check the sample size displayed in the report. Secondly, look for any indicators of statistical significance, if available. Thirdly, consider the margin of error associated with the sampled data, and be aware that this margin of error can be larger for smaller samples. A larger margin of error suggests a higher level of uncertainty in the results.

Identifying Potential Biases in Sampled Data

Sampling bias can occur if the sample doesn’t accurately represent the entire population. In Google Analytics, this can happen if the sampling method doesn’t account for variations in user behavior or specific segments. For example, if the sample disproportionately represents users from a specific geographic location or demographic, the data may be skewed. Review the sampling methodology within the report to assess potential bias.

Examples of Misleading Sampled Data

A common example of misleading sampled data occurs when interpreting conversion rates. A seemingly high conversion rate based on a small sample might not hold true for the overall population. Another example involves analyzing website traffic patterns. A sampled report might suggest high traffic from a particular region, but a full data report might reveal this is a temporary trend or specific to a promotion.

The key takeaway is to avoid drawing definitive conclusions from sampled data without considering the sample size and potential biases.

Tools and Techniques for Data Sampling: Data Sampling Google Analytics

Data sampling in Google Analytics allows for analysis of large datasets without processing the entire volume. This significantly speeds up the process and reduces computational costs. Effective sampling strategies are crucial for accurate insights, especially when dealing with massive datasets. Understanding the available tools and techniques empowers analysts to perform efficient and reliable data sampling.

Available Tools for Data Sampling in Google Analytics

Google Analytics itself offers robust capabilities for data sampling. It allows for specifying the sample size, which is a crucial parameter for controlling the degree of detail in the sampled data. Beyond the platform’s built-in features, external tools can further enhance the sampling process. These tools can often provide more detailed or customized sampling options, which can prove invaluable for complex analyses.

Implementing Data Sampling Tools Effectively

To effectively utilize Google Analytics’ built-in sampling capabilities, you need to understand the trade-offs between sample size and accuracy. A larger sample size generally leads to greater accuracy, but it also requires more computational resources. The ideal sample size balances these factors to provide accurate insights without excessive processing time. Furthermore, carefully considering the criteria for selecting the sample is crucial to ensure that the results are representative of the entire dataset.

Setting Up the Tools for Accurate Sampling

Proper configuration of sampling parameters in Google Analytics is essential for obtaining accurate results. This includes specifying the desired sample size and defining the sampling method. By carefully setting these parameters, you can control the level of detail in the data and avoid bias. It’s important to understand how these parameters affect the representativeness of the sample, so that the insights derived from the sampled data accurately reflect the full dataset.

Step-by-Step Guide to Data Sampling in Google Analytics

- Define the Objectives: Clearly articulate the analysis goals. What specific questions are you trying to answer using the sampled data? This clarity guides the sampling strategy.

- Choose the Sampling Method: Select an appropriate sampling method based on the analysis needs. Random sampling is generally preferred for unbiased results.

- Determine the Sample Size: Consider the desired level of accuracy and the resources available. A larger sample size generally provides more accurate results but increases processing time. The sample size should be calculated based on the confidence level required for the analysis.

- Configure Google Analytics Sampling: Utilize the sampling options within the Google Analytics interface to select the desired sample size and methodology. Ensure that the sampling method aligns with the defined objectives.

- Analyze the Sampled Data: Use the sampled data to generate insights. Interpret the results within the context of the sampling method and sample size. Ensure that the conclusions drawn are not overly generalized.

- Validate the Results: If possible, compare the insights derived from the sampled data to results from a complete dataset. This validation step helps to assess the accuracy of the sampled data and the validity of the conclusions.

Best Practices for Data Sampling in Google Analytics

Data sampling in Google Analytics can significantly speed up analysis and reduce processing time, especially when dealing with massive datasets. However, to ensure the accuracy and reliability of the insights gleaned, careful consideration of best practices is crucial. This section Artikels key strategies for effective data sampling, along with common pitfalls to avoid.

Effective data sampling isn’t just about speed; it’s about getting reliable results that accurately represent the larger population. The right approach can provide a comprehensive view of your data, minimizing potential biases and allowing for more in-depth analysis.

Sample Size Determination

Determining the appropriate sample size is paramount for ensuring the representativeness of the sample. A sample size that’s too small might not accurately reflect the characteristics of the entire population, leading to inaccurate conclusions. Conversely, an excessively large sample size can be inefficient and unnecessary, consuming more processing time and resources. Statistical methods, such as confidence intervals and margin of error calculations, play a crucial role in determining the optimal sample size for a given level of confidence and precision.

Consider factors like the variability within your data and the desired level of confidence when making this critical decision. A common rule of thumb is that a sample size of 1000 is generally sufficient for most purposes, but this is dependent on the specifics of the data and the goals of the analysis.

Considering the Nature of the Data

The characteristics of the data being sampled greatly influence the effectiveness of the sampling strategy. Different types of data require different sampling methods. For example, if your data is skewed or has outliers, you might need a more complex sampling approach to avoid skewing your results. Analyzing the distribution of the data and identifying any potential biases is crucial.

Consider the variables you are analyzing, and how they might influence your sampling strategy. If certain segments of your data are disproportionately represented, you may need to use stratified sampling to ensure all relevant segments are adequately represented.

Checklist for Accuracy and Reliability

To ensure accurate and reliable data sampling, a structured approach is necessary. This checklist helps to guide the sampling process and identify potential errors.

- Define Clear Objectives: Clearly define the specific questions you want to answer using the sampled data. This helps ensure the sampling method aligns with the objectives.

- Identify the Population: Clearly delineate the target population you wish to sample. Understanding the population characteristics is crucial.

- Choose an Appropriate Sampling Method: Select the sampling method that best suits the nature of the data and the analysis goals. Common methods include simple random sampling, stratified sampling, and cluster sampling.

- Determine Sample Size: Employ statistical methods to calculate the appropriate sample size based on the desired level of confidence and margin of error. Consider the variability within your data.

- Randomization: Utilize a random number generator to select sample data points to ensure unbiased selection. Manual selection can introduce human bias.

- Data Validation: Verify the sampled data for accuracy and completeness. Look for inconsistencies or errors.

- Review and Revise: Review the results and consider potential biases. If necessary, adjust the sampling strategy for more accurate insights.

Common Mistakes to Avoid

Several common mistakes can undermine the validity of data sampling. These errors can lead to inaccurate conclusions and misleading interpretations.

- Sampling without a clear objective: Without defined objectives, the sampling process lacks direction, making it difficult to determine whether the results are relevant.

- Using an inappropriate sampling method: Choosing a sampling method that does not align with the nature of the data can lead to skewed results.

- Insufficient sample size: A sample size that’s too small can fail to capture the true characteristics of the population.

- Ignoring data distribution: Failing to consider the distribution of the data can introduce significant biases.

- Not considering sampling error: Ignoring sampling error can lead to inaccurate conclusions about the population.

End of Discussion

In conclusion, data sampling in Google Analytics offers a valuable compromise between speed and accuracy. By understanding the principles, methods, and factors influencing sampling decisions, you can leverage this technique effectively to gain actionable insights from your website data. Remember to carefully consider potential sampling errors and interpret results cautiously, especially when making critical business decisions. This knowledge will help you avoid common pitfalls and maximize the benefits of data sampling for your Google Analytics analysis.