AB testing email campaigns is crucial for maximizing email marketing effectiveness. This comprehensive guide delves into the strategies, techniques, and best practices for optimizing your email campaigns through rigorous testing. We’ll explore how to define objectives, craft effective hypotheses, select variables, implement tests, analyze results, and ultimately optimize your campaigns for better engagement and conversions.

From understanding the fundamental principles of A/B testing to mastering advanced techniques like multivariate testing and personalization, this guide equips you with the knowledge to elevate your email marketing efforts. We’ll dissect various email elements that can be tested, like subject lines, preheaders, and calls to action, and examine the key metrics to track for meaningful insights.

Introduction to A/B Testing Email Campaigns

A/B testing in email marketing is a crucial technique for optimizing campaign performance. It involves creating variations of different email elements (subject lines, body content, etc.) and sending them to different segments of your email list. By analyzing the responses and engagement metrics from each variation, you can identify which elements perform best and refine your campaigns for higher open rates, click-through rates, and conversions.Understanding which email elements resonate most strongly with your audience is paramount.

A/B testing provides valuable insights, allowing you to fine-tune your approach and create emails that achieve desired results. This iterative process of experimentation and refinement is essential for achieving success in email marketing.

Importance of A/B Testing for Email Campaigns

A/B testing is essential for maximizing the impact of your email marketing efforts. By identifying what resonates best with your audience, you can optimize your campaigns to generate higher engagement and conversions. This targeted approach helps you improve open rates, click-through rates, and ultimately, drive more conversions. For example, a company that A/B tested subject lines saw a 20% increase in open rates after implementing the winning variation.

Key Benefits of Using A/B Testing for Email Campaigns

A/B testing offers several crucial benefits for email campaigns. It enables data-driven decision-making, leading to higher conversion rates and improved ROI. It allows for continuous improvement, as you can constantly refine your email strategies based on real-time performance data. The process ensures that your email campaigns are optimized for optimal results.

- Increased Engagement: By testing different elements, you can pinpoint what attracts your audience the most, leading to higher open and click-through rates.

- Improved Conversion Rates: Optimizing your email content through A/B testing can directly translate into a significant increase in conversions, from sales to lead generation.

- Enhanced ROI: A/B testing helps you spend your budget effectively by focusing on the most effective elements of your emails, resulting in a higher return on investment.

- Data-Driven Insights: A/B testing provides concrete data on what works best with your audience, enabling informed decisions for future email campaigns.

Types of Email Elements for A/B Testing

Understanding which elements to test is crucial for effective A/B testing. The following table Artikels common email elements that can be tested to improve campaign performance.

| Email Element | Description |

|---|---|

| Subject Lines | The subject line is the first impression. Testing different subject lines can significantly impact open rates. |

| Preheader Text | The preheader text appears below the subject line in many email clients. Testing different preheader texts can influence click-through rates. |

| Call-to-Action (CTA) Buttons | CTAs are essential for driving conversions. Testing different button colors, text, and placement can significantly affect click-through rates. |

| Email Body Content | The main body content of the email should be tailored to the audience. Testing different content variations can increase engagement and conversions. |

| Email Design | Visual elements such as images, layout, and color schemes can affect the overall appeal and engagement of the email. |

| Sending Time | The optimal time to send emails can vary greatly based on your audience. Testing different send times can increase open rates and engagement. |

Defining Objectives and Metrics for A/B Testing

A/B testing email campaigns is more than just sending out different versions of an email. It’s a strategic process that requires careful planning and meticulous measurement. A well-defined objective and a robust set of metrics are crucial for interpreting the results and optimizing future campaigns. Without clear goals and ways to track progress, the value of A/B testing is significantly diminished.Defining specific objectives and relevant metrics allows for a focused and data-driven approach to email campaign optimization.

This ensures that your testing efforts are aligned with your overall business goals and that you’re making decisions based on tangible results, not guesswork.

SMART Objectives for Email Campaigns

To ensure your A/B testing is effective, your objectives must be SMART: Specific, Measurable, Achievable, Relevant, and Time-bound. This framework helps you focus your efforts and measure your success. For example, instead of a vague objective like “improve email engagement,” a SMART objective might be “increase email open rates by 10% within the next quarter by testing subject lines and preheader text.”

Choosing Relevant Key Performance Indicators (KPIs)

Selecting the right KPIs is essential for evaluating the effectiveness of your A/B tests. They should directly reflect the goals you’ve set for your campaigns. A good KPI should be actionable, and its improvement should lead to tangible results in your business.

Comparing Email Campaign KPIs

| KPI | Description | Impact on Campaign Success ||—|—|—|| Open Rate | Percentage of recipients who opened the email. | Higher open rates indicate better subject line performance and improved recipient engagement. || Click-Through Rate (CTR) | Percentage of recipients who clicked on a link within the email. | High CTRs suggest relevant content and compelling calls to action.

|| Conversion Rate | Percentage of recipients who completed a desired action (e.g., making a purchase, signing up for a newsletter). | Conversion rate directly reflects the effectiveness of the email in driving desired outcomes. || Unsubscribe Rate | Percentage of recipients who unsubscribed from the email list. | A high unsubscribe rate may indicate irrelevant content, poor email design, or excessive sending frequency.

|| Bounce Rate | Percentage of emails that failed to be delivered. | High bounce rates suggest problems with email addresses or server issues, impacting the campaign’s reach and overall success. || Delivery Rate | Percentage of emails successfully delivered to recipients’ inboxes. | A low delivery rate signals issues with email sending infrastructure and can significantly affect the effectiveness of the entire campaign.

|

Examples of Measurable KPIs

Open rates, click-through rates, conversion rates, and unsubscribe rates are all common and measurable KPIs. For example, if your objective is to increase sales through email, then the conversion rate is a crucial KPI. Tracking and analyzing these metrics provides valuable insights into the effectiveness of your email campaigns and helps you identify areas for improvement. If the open rate is low, you may need to optimize your subject lines and preheader text.

A high unsubscribe rate might indicate a need to improve the relevance of your content.

Crafting Effective A/B Test Hypotheses

Formulating testable hypotheses is crucial for successful A/B testing of email campaigns. It’s not enough to simply guess what might work; you need a clear, data-driven understanding of the potential impact of changes to your email elements. A well-defined hypothesis allows you to measure the effectiveness of your changes and draw meaningful conclusions.Effective hypotheses are specific, measurable, achievable, relevant, and time-bound (SMART).

A/B testing email campaigns is crucial for optimizing engagement. Understanding what resonates with your audience is key, and often a little experimentation goes a long way. If you’re looking to improve your results, consider re-evaluating your current email strategy and content, like taking a look at want to re share ideas for refreshing your approach.

Ultimately, ongoing A/B testing will refine your campaigns and yield the best possible results.

They predict a specific outcome and allow for testing and analysis to determine whether that prediction is accurate. They provide a roadmap for your A/B testing, guiding your decisions and ensuring your efforts are focused on the most impactful elements.

Defining Testable Hypotheses

A hypothesis is a statement that proposes a possible explanation or prediction about a phenomenon. In the context of email A/B testing, it’s a prediction about how changes to email elements will affect campaign performance. It’s important to base these hypotheses on previous data, industry best practices, and your understanding of your target audience. For example, if you’ve observed that longer subject lines tend to generate higher open rates, you might hypothesize that shorter subject lines will decrease open rates.

Examples of Well-Defined Hypotheses

- Changing the subject line from “Limited-time offer!” to “Unlock exclusive discounts!” will increase open rates by 10% within the next 2 weeks.

- Using a button-style call-to-action instead of a link in the email body will result in a 15% increase in click-through rates.

- Including a personalized greeting in the email body will increase engagement (measured by open and click-through rates) compared to a generic greeting.

Importance of Data-Driven Reasoning

Data-driven reasoning is essential for creating effective hypotheses. By analyzing past email campaign performance data, you can identify trends and patterns that suggest which elements are most likely to influence open rates, click-through rates, or conversions. For example, if your previous campaigns have shown that emails with specific s in the subject line have higher open rates, you can use this information to formulate hypotheses about similar s in future campaigns.

Hypothesis Statements for Email Subject Lines

| Hypothesis Statement | Metric | Predicted Outcome |

|---|---|---|

| Changing the subject line from “Limited-Time Offer!” to “Exclusive Deals for You!” will increase open rates by 5%. | Open Rate | Increase |

| Using a subject line with a question mark (“Looking for a new phone?”) will increase open rates compared to a declarative subject line (“Shop our new phones now!”). | Open Rate | Increase |

| Including a specific product name in the subject line will result in a 10% increase in click-through rate compared to a subject line without a specific product name. | Click-Through Rate | Increase |

| Subject lines containing emojis will increase open rates compared to those without emojis. | Open Rate | Increase |

Selecting Variables for A/B Testing

Choosing the right variables for A/B testing email campaigns is crucial for achieving meaningful results. Without careful selection, you risk wasting time and resources on tests that don’t provide actionable insights. A well-defined testing strategy, focused on the most impactful variables, will significantly increase the chances of optimizing your email performance. This section delves into the process of selecting and prioritizing variables for A/B testing, emphasizing the importance of user segmentation.Identifying the key variables that influence email open rates, click-through rates, and conversions is paramount.

A methodical approach to selecting these variables is essential for generating actionable results from your A/B testing efforts.

Variable Selection Process

The process of selecting variables for A/B testing involves a multi-step approach. Start by identifying the key performance indicators (KPIs) you want to improve, such as open rates or conversion rates. Then, determine the potential variables that might affect these KPIs. This includes elements like subject lines, email content, calls to action, send times, and even preheader text.

Prioritizing Variables Based on Potential Impact

To maximize the effectiveness of your A/B testing, prioritize variables based on their potential impact. Consider which changes are likely to have the largest effect on your KPIs. For example, a poorly written subject line could significantly reduce open rates, making it a high-priority variable for testing. Conversely, minor changes to the email’s background color might have minimal impact.

A structured approach to prioritizing potential variables will guide your testing efforts toward the most impactful areas.

Considering User Segments When Choosing Variables

User segmentation is critical in selecting variables for A/B testing. Different segments of your audience might respond differently to various elements. For example, a subject line that resonates with one segment might not resonate with another. Therefore, tailor your tests to specific user segments to get a more granular understanding of what works best for each group.

Consider variables that might influence different user groups, such as their demographics, purchase history, or engagement level.

Identifying Key Variables for Testing

One method for identifying the most important variables to test involves a structured analysis of your existing email campaigns and performance data. This approach can be broken down into the following steps:

- Review historical campaign data: Analyze open rates, click-through rates, and conversion rates for previous email campaigns. Identify patterns and trends in performance across different campaigns. Did certain subject lines consistently perform better? Did certain calls to action generate higher conversion rates? Identify potential variables that are consistently correlated with positive outcomes.

- Analyze user feedback: Collect feedback from your audience through surveys, feedback forms, or comments. Look for common themes or suggestions related to email design, content, or calls to action. User feedback can highlight areas that need improvement and point to variables worth testing.

- Conduct competitive analysis: Research email campaigns from competitors. Identify elements that seem to resonate well with your target audience and note areas where competitors might be excelling. Identify potential variables you could test that mirror their successful approaches.

- Focus on areas with the biggest potential impact: Prioritize variables based on their predicted effect on your KPIs. Start with variables that are most likely to have a significant impact, like subject lines, calls to action, and email content. This strategic approach will maximize your testing resources and yield more actionable insights.

By combining these steps, you can identify the most important variables for testing in your email campaigns, maximizing the return on your A/B testing efforts.

Implementing and Running A/B Tests

A/B testing email campaigns is a crucial aspect of optimizing email marketing strategies. Understanding how to effectively implement and run these tests ensures that your campaigns resonate with your audience and drive desired results. This section details the practical steps involved, from setup to analysis, helping you make data-driven decisions for improved email performance.Implementing A/B tests involves a structured approach to comparing different versions of your emails.

Careful planning and execution are key to ensuring accurate and reliable results. This section delves into the practical application of A/B testing in email marketing, including the selection of platforms, design considerations, and the significance of sample size.

Setting Up and Running A/B Tests in Email Marketing Platforms

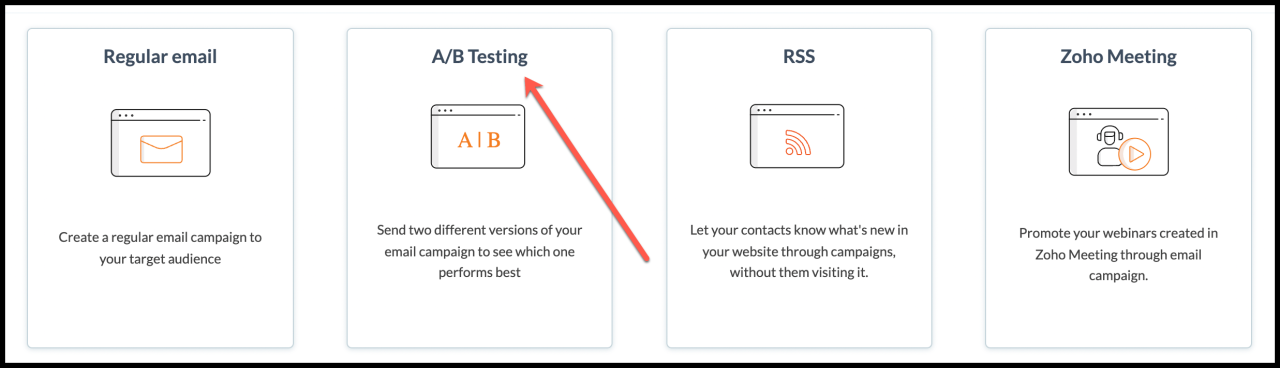

A/B testing tools within email marketing platforms are designed to simplify the process. These tools streamline the process of creating variations, tracking results, and analyzing data. To successfully set up and run A/B tests, follow these steps:

- Define clear objectives: Establish measurable goals for the test. Are you aiming to increase open rates, click-through rates, or conversions?

- Select the variables for testing: Choose specific elements to modify, such as subject lines, call-to-action buttons, or email content.

- Create variation(s): Develop different versions of your email that test the selected variables. Each variation should test only one element.

- Set up the test in your email marketing platform: Utilize the platform’s A/B testing features to split your email list and send variations to different segments.

- Monitor and analyze results: Track key metrics like open rates, click-through rates, and conversions for each variation over a sufficient timeframe.

- Analyze and draw conclusions: Evaluate the results and determine which variation performed best. Document your findings.

Common Email Marketing Platforms and A/B Testing Capabilities

Various email marketing platforms offer A/B testing features. Here are some popular choices and their A/B testing capabilities:

| Platform | A/B Testing Capabilities |

|---|---|

| Mailchimp | Offers A/B testing for subject lines, preheader text, and email content. |

| Campaign Monitor | Provides comprehensive A/B testing, including subject lines, email content, and calls-to-action. |

| Constant Contact | Allows A/B testing for subject lines and email content. |

| Sendinblue | Provides A/B testing capabilities for subject lines, content, and calls-to-action. |

| ActiveCampaign | Offers robust A/B testing with advanced features, including segmentation and personalization. |

Designing Effective Control and Variation Groups

Creating effective control and variation groups is critical for accurate results. A control group receives the original email version, while variation groups receive the modified versions.

A/B testing email campaigns is a great way to optimize your outreach, but understanding why a customer isn’t converting is just as important. Sometimes, even the best-crafted emails fall flat. Identifying the “customer who doesn’t convert” customer who doesn’t convert is key to pinpointing areas for improvement. Analyzing why your recipients aren’t engaging with your emails allows you to tailor future campaigns for better results.

This in turn makes A/B testing more effective.

- Control Group: This group receives the current, proven version of your email. It serves as a benchmark for comparison.

- Variation Groups: These groups receive the modified versions of your email. Variations should focus on a single element for accurate assessment of its impact.

Importance of Sample Size in A/B Tests

The sample size directly impacts the reliability of your A/B test results. A smaller sample size can lead to misleading results. Sufficient sample size ensures statistically significant results. A larger sample size is preferable to minimize the risk of random fluctuations influencing the outcome.

Creating a Well-Structured Email Template for A/B Testing

A well-structured template is essential for effective A/B testing. A template should facilitate easy modification and clear tracking of results.

- Modular design: Break down the email into distinct modules (subject line, header, body, call-to-action). This enables testing specific components independently.

- Clear call-to-action: Use a compelling and easily visible call-to-action button.

- Mobile optimization: Ensure the email renders correctly on various devices, especially mobile phones.

- Consistent branding: Maintain a consistent brand identity throughout the email variations.

Analyzing and Interpreting A/B Test Results: Ab Testing Email Campaigns

Unveiling the winner from your email campaign A/B tests requires careful analysis. Simply observing higher open rates or click-throughs isn’t enough. We need to dig deeper to understandwhy* a variation performed better and if the difference is statistically significant. This involves understanding statistical significance, identifying patterns, and avoiding common pitfalls.A/B testing isn’t about just picking the highest number.

It’s about identifying the best performing variationand* understanding the factors that drove the difference. This analysis provides valuable insights for future campaigns and allows you to make data-driven decisions.

Statistical Significance Explained

Understanding the statistical significance of your results is crucial. A statistically significant difference indicates that the observed difference between the control and variation groups is not due to random chance. It’s not just about one metric being slightly higher; it’s about whether that difference is likely to hold true in a larger population. A common threshold for statistical significance is a p-value of 0.05, which means there’s only a 5% chance the observed difference occurred by chance.

Interpreting Results: Steps to Success

Analyzing A/B test results involves several key steps. First, examine the raw data for each variation, including key metrics like open rates, click-through rates, and conversions. Next, calculate the statistical significance of the difference using appropriate tests (e.g., t-tests or chi-squared tests). Tools like Google Analytics or dedicated A/B testing platforms often provide these calculations. Crucially, evaluate the practical significance alongside statistical significance.

A statistically significant result might not always translate into a meaningful improvement. For example, a variation might have a slightly higher click-through rate, but the increase might be too small to justify the effort. Finally, document your findings and conclusions in a clear and concise manner.

Identifying Patterns and Trends

Beyond individual metrics, look for broader trends. Are certain segments of your audience responding differently to variations? Are there correlations between specific design elements and improved performance? For instance, are users clicking on a button more often when it’s a different color? These patterns can reveal valuable insights that can inform future design decisions and marketing strategies.

Hypothetical A/B Test Results

| Variable Tested | Control Group | Variation Group | Statistical Significance (p-value) |

|---|---|---|---|

| Email Subject Line | “Sale Alert!” (Open Rate: 15%) | “Limited-Time Offer” (Open Rate: 18%) | 0.03 |

| Call-to-Action Button Color | Red (Click-Through Rate: 10%) | Green (Click-Through Rate: 12%) | 0.07 |

| Email Design | Traditional Layout (Conversion Rate: 5%) | Modern Layout (Conversion Rate: 6%) | 0.10 |

This table provides a hypothetical example. Note that the p-value of 0.03 for the subject line indicates a statistically significant improvement, while the other results are not.

Avoiding Common Mistakes

Avoid the temptation to jump to conclusions based on small sample sizes or limited data. Always consider the statistical significance and practical significance of the results. Don’t focus solely on the variation with the highest metric; consider the context of the results. Beware of making decisions based on a single A/B test; replicate tests to confirm results and ensure consistency.

Also, consider external factors that might have influenced the results.

Optimizing Email Campaigns Based on A/B Test Results

A/B testing provides valuable insights into what resonates best with your audience. However, the true power of A/B testing lies in translating those insights into tangible improvements in your email campaigns. This involves more than just identifying winning variations; it’s about strategically integrating those changes and continuously monitoring their impact.Implementing the winning variations effectively is crucial for maximizing return on investment.

This involves a careful and systematic approach, ensuring a smooth transition and minimizing disruption to existing workflows.

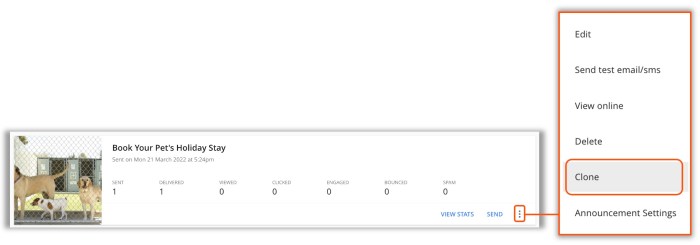

Implementing Winning Variations

Implementing the winning variations requires a methodical approach. First, meticulously analyze the results of the A/B test, focusing on the specific elements that contributed to the success of the winning variation. This includes not only the subject line and email content but also the design, calls to action, and overall user experience. Then, carefully plan the rollout of the winning variations.

Ensure a phased implementation to minimize any negative impact on your email open and click-through rates. This could involve testing on a small segment of your subscriber list before deploying it to the entire list.

Ongoing A/B Testing for Continuous Improvement, Ab testing email campaigns

Ongoing A/B testing is essential for continuous improvement. Email marketing is a dynamic field; audience preferences and engagement patterns constantly evolve. By incorporating A/B testing into your email marketing strategy, you can adapt to these changes and maintain a high level of performance. This includes running regular A/B tests on various elements, such as subject lines, email content, and calls to action.

By proactively monitoring and responding to changes in audience behavior, you can maintain the effectiveness of your email campaigns.

Strategies for Optimizing Future Campaigns

Strategies for optimizing future campaigns should leverage the insights gained from A/B tests. Analyze the winning variations to identify common patterns and trends. This allows for the creation of targeted email campaigns that resonate with specific segments of your audience. For example, if a particular subject line performed exceptionally well in one test, consider using similar phrasing in future campaigns targeting similar demographics.

Examples of Applying A/B Test Results

One example of applying A/B test results is optimizing subject lines. If a shorter, more direct subject line outperformed a longer, more descriptive one, this indicates that brevity is preferred by your audience. Another example involves calls to action. If a specific call to action (CTA) yielded a higher click-through rate, replicate that CTA design and wording in future email campaigns.

Furthermore, consider tailoring content to different segments of your audience. If a specific segment responds better to a certain type of product promotion, tailor future emails specifically to address their needs.

Tracking Performance of Optimized Campaigns

Tracking the performance of optimized email campaigns is crucial for assessing the effectiveness of implemented changes. This involves monitoring key metrics, such as open rates, click-through rates, conversion rates, and unsubscribe rates. Regularly analyzing these metrics allows you to gauge the impact of your A/B test results and identify any areas that need further optimization. Utilize analytics tools to create dashboards that track these metrics.

This enables you to monitor performance over time, identifying any shifts or trends that could influence future A/B tests.

A/B testing email campaigns is crucial for optimizing engagement. Understanding your audience is key, and that often means delving into the “minds of SaaS customers”. Understanding these customer minds helps tailor subject lines, content, and calls to action. Ultimately, this targeted approach leads to higher open and click-through rates in your email campaigns.

Advanced Techniques in Email A/B Testing

Email A/B testing is more than just tweaking subject lines and calls to action. Advanced techniques unlock deeper insights and optimize campaigns for maximum impact. This exploration delves into multivariate testing, personalization, dynamic content, and strategies to boost deliverability, empowering marketers to achieve greater results.Multivariate testing offers a more comprehensive approach than traditional A/B testing by allowing marketers to simultaneously test multiple elements of an email.

Instead of just comparing two variations, multivariate testing evaluates the impact of various combinations of elements, leading to more informed decisions about which elements resonate most effectively with the target audience.

Multivariate Testing in Email Campaigns

Multivariate testing (MVT) allows for the simultaneous evaluation of multiple variations of email elements. Instead of focusing on a single variable, MVT examines different combinations of subject lines, preheaders, calls-to-action, and even email layouts. This comprehensive approach reveals the optimal configuration of elements for higher engagement and conversions. For example, a retailer might test different subject lines, calls-to-action, and product images in combination to find the combination that yields the highest click-through rates.

Personalizing Email Content with A/B Testing

Personalization significantly enhances the effectiveness of email marketing campaigns. A/B testing enables marketers to tailor content to individual preferences and behaviors. By segmenting recipients based on demographics, purchase history, or website interactions, personalized emails can significantly improve engagement. For instance, a company might send different emails to customers who have abandoned their shopping carts, offering various discounts or incentives.

These personalized messages significantly improve the likelihood of converting those potential customers.

Dynamic Content in A/B Testing

Dynamic content in email campaigns allows the content to adapt to the recipient’s characteristics. This adaptability can be based on various factors, including demographics, past purchases, or even real-time interactions. A/B testing dynamic content is crucial to fine-tune the personalization process. By testing different dynamic content variations, marketers can discover which approaches resonate best with different segments of their audience.

For example, a travel agency might dynamically adjust the content of an email based on a customer’s past travel destinations, suggesting similar destinations or package deals.

Advanced A/B Testing Strategies for Email Campaigns

Advanced A/B testing strategies often incorporate multiple variables and dynamic content. For example, testing the combination of a personalized subject line, a tailored product recommendation, and a dynamic call-to-action based on the recipient’s past behavior can provide invaluable insights. This multi-layered approach allows marketers to create highly targeted and effective campaigns.

Improving Email Deliverability Rates Through A/B Testing

Email deliverability rates are critical to the success of any email marketing campaign. A/B testing can be instrumental in identifying and addressing potential deliverability issues. For example, testing different sender names, email subject lines, and email content can help optimize for spam filters. By iteratively testing and refining these elements, marketers can improve the rate at which their emails reach recipients’ inboxes.

Concluding Remarks

In conclusion, mastering AB testing email campaigns is a journey of continuous improvement. By carefully defining objectives, crafting effective hypotheses, and rigorously testing different variables, you can significantly boost your email campaign performance. Remember, the key is to leverage data-driven insights to optimize future campaigns and maximize return on investment. This guide provides a solid foundation for you to embark on this exciting journey.