A b testing can help perfect your pardot emails – A/B testing can help perfect your Pardot emails, unlocking a world of possibilities for email marketing success. This in-depth guide explores how to use A/B testing to optimize your Pardot email campaigns, from crafting compelling subject lines to refining your call-to-actions. We’ll cover everything from the basics of A/B testing to advanced techniques, helping you create emails that resonate with your audience and drive conversions.

By understanding the key variables to test, you can pinpoint what truly resonates with your audience. From subject lines to email body content, we’ll dissect various elements and provide practical strategies for setting up effective A/B test campaigns within Pardot. Learn how to interpret results, adjust your emails, and ultimately, perfect your email marketing strategy.

Introduction to A/B Testing for Pardot Emails

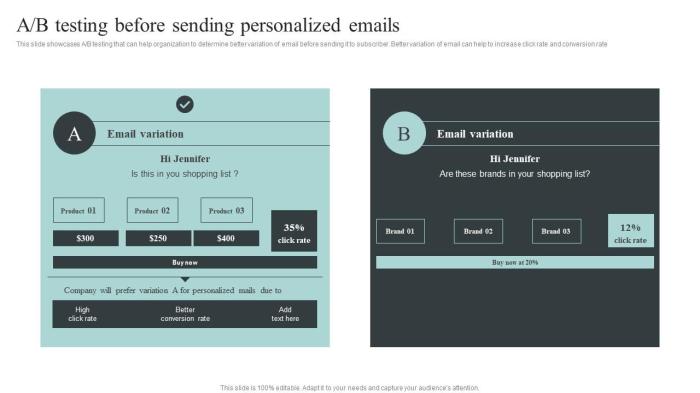

A/B testing is a crucial element of email marketing optimization. It allows marketers to compare different versions of emails to determine which performs best. This data-driven approach is essential for maximizing open rates, click-through rates, and ultimately, conversions within Pardot. A/B testing is not a one-size-fits-all solution; rather, it’s a dynamic process that requires careful planning and execution.By systematically testing different email elements, marketers can identify what resonates best with their target audience.

This, in turn, can lead to significant improvements in email campaign performance. Through A/B testing, Pardot users can refine their messaging, subject lines, calls to action, and overall email design to achieve better results. This iterative process can lead to significant improvements in email marketing ROI.

Key Elements of a Successful A/B Testing Strategy

A successful A/B testing strategy hinges on careful planning and meticulous execution. It’s not enough to simply send out different versions of an email and hope for the best. A structured approach is essential for extracting meaningful insights. The key elements involve choosing the right metrics to track, identifying specific areas for improvement, and creating a well-defined testing plan.

- Defining Clear Objectives: Establish specific, measurable, achievable, relevant, and time-bound (SMART) goals for the A/B test. This clarity ensures that the testing efforts are focused on achieving tangible results. For instance, a goal could be to increase open rates by 15% within the next quarter.

- Selecting Relevant Metrics: Focus on key performance indicators (KPIs) that directly relate to the business objectives. This might include open rates, click-through rates, conversion rates, or even unsubscribe rates. Tracking these metrics accurately provides valuable insights into the effectiveness of each email variation.

- Creating Compelling Variations: The different versions of the email (variants) should focus on specific elements that are believed to influence the target audience. Variations should not be overly complex, ensuring that the focus is on a single element. For instance, one variant might have a different subject line while another might have a different call to action button.

- Controlling for External Factors: A/B testing should account for external factors that could influence the results, such as seasonality, marketing campaigns, or other promotional activities. The testing period should be designed to minimize the impact of these variables.

A Basic Framework for Conducting A/B Tests in Pardot

Implementing A/B tests within Pardot requires a structured approach to ensure that the results are reliable and actionable. A well-defined framework provides a consistent process for running tests and interpreting the findings.

- Identify the Email Element to Test: Determine the specific aspect of the email that will be tested. This could be the subject line, the email body, the call to action button, or the images used. The goal is to focus on one element at a time.

- Create Variations: Design two or more versions of the email, focusing on a specific aspect identified in the first step. Ensure that the variations are distinct and highlight the element being tested. For example, one variation could use a more formal tone, while another uses a more conversational tone.

- Establish a Control Group: A control group is a crucial component of A/B testing. This group receives the original version of the email, providing a baseline for comparison. This allows for a fair assessment of the changes implemented in the variations.

- Select the Target Audience: Define the specific segment of your Pardot audience that will participate in the A/B test. This ensures that the results are relevant to the targeted group.

- Monitor and Analyze Results: Track the key performance indicators (KPIs) closely. Analyze the results to determine which variation performs best based on the metrics established. This analysis will reveal the elements that resonate best with the target audience.

Key Variables to Test in Pardot Emails

Optimizing Pardot email campaigns requires a systematic approach to identifying areas for improvement. A/B testing allows marketers to compare different versions of email elements to see which performs best. Understanding the key variables to test is crucial for achieving the desired results, whether it’s boosting open rates, driving click-throughs, or increasing conversions.Effective A/B testing in Pardot goes beyond simply changing colors or fonts.

It involves meticulously selecting variables that directly impact user engagement and campaign goals. This targeted approach helps refine email strategies for better results.

A/B testing is crucial for optimizing Pardot emails, ensuring they resonate with your audience. However, with Google’s AI-driven updates, like the recent organic ranking overlap and core update, as detailed in this overview , it’s even more important to refine your email strategy. Testing different subject lines, calls to action, and email layouts can help you achieve higher open and click-through rates, which directly translates to better performance in the ever-evolving digital landscape.

So, keep A/B testing those Pardot emails!

Crucial Email Elements for A/B Testing

Choosing the right variables to test is essential for a successful A/B testing campaign. Start by focusing on elements that directly influence user engagement. These variables can significantly impact open rates, click-through rates, and conversion rates.

- Subject Lines: Subject lines are the first impression a recipient gets. Crafting compelling and intriguing subject lines is crucial for encouraging recipients to open your email. A/B testing different subject lines helps determine what resonates best with your target audience.

- Preheaders: Preheaders, the text that appears below the subject line in email clients, provide a concise preview of the email’s content. Testing different preheaders allows you to fine-tune the email’s preview and entice recipients to open it.

- Call-to-Action (CTA) Buttons: CTAs are crucial for driving conversions. Testing different CTA button colors, text, and placements can significantly impact the click-through rate. For example, a clear, compelling CTA button that stands out can lead to higher conversions.

- Email Body Content: The email body’s content should clearly communicate your message and value proposition. Testing different wording, tone, and visuals within the body allows you to tailor the message to resonate with your target audience.

Different Types of Subject Lines

Subject lines play a vital role in email marketing. Testing different subject lines can significantly impact open rates.

- Intriguing Questions: Subject lines that pose questions can pique recipients’ curiosity, prompting them to open the email.

- Benefit-Oriented Subject Lines: Subject lines that clearly state the benefit of opening the email can encourage recipients to take action.

- Urgency-Based Subject Lines: A sense of urgency, whether due to limited-time offers or deadlines, can motivate recipients to open the email promptly.

- Personalization: Personalized subject lines, tailored to individual recipient preferences, can increase open rates and engagement.

Measuring A/B Testing Success

Success in A/B testing is not solely determined by open rates. It’s essential to measure the results against your specific campaign goals. Track metrics like click-through rates, conversion rates, and overall engagement.

| Email Element | Possible Variations |

|---|---|

| Subject Line | Short & sweet, benefit-driven, question-based, personalized, urgent |

| Preheader | Concise preview of email content, enticing description, relevant s |

| Call to Action (CTA) | Different colors, text, placement, size, urgency cues |

| Email Body | Different tone, language, formatting, imagery, length, value proposition emphasis |

Practical Strategies for A/B Testing Pardot Emails

A/B testing is crucial for optimizing Pardot email campaigns, maximizing engagement, and driving conversions. Understanding the best practices for setting up and managing these tests within Pardot is essential for achieving significant improvements in your email marketing strategy. This guide delves into practical strategies for effective A/B testing in Pardot.Effective A/B testing goes beyond simply changing subject lines. It requires a deep understanding of your audience and the specific elements of your emails that impact their behavior.

By meticulously planning and executing these tests, you can gain valuable insights and refine your email campaigns for optimal results.

Setting Up Effective A/B Test Campaigns in Pardot

To successfully implement A/B tests in Pardot, a structured approach is necessary. Start by defining clear objectives for your test, such as increasing open rates, click-through rates, or conversion rates. This focused approach will help you measure the success of your tests effectively. Select specific variables to test, keeping in mind the impact on your target audience.

Consider your target audience’s demographics and behaviors. For example, if you’re targeting a younger audience, a more casual tone in your email copy might be more effective.

Creating and Managing A/B Tests within Pardot

Pardot offers a user-friendly interface for creating and managing A/B tests. The platform guides you through the process, allowing you to easily compare different versions of your emails. Begin by creating two variations of your email. One version serves as the control group, and the other is the variation you’re testing. Next, define the specific criteria for your test, including the sample size and duration.

Crucially, ensure that your test accurately reflects your intended audience and avoids introducing bias. Properly segment your audience before running the test. This ensures that your testing results are relevant to the targeted segment.

Examples of Different A/B Test Scenarios for Pardot Emails

Different A/B test scenarios can yield valuable insights into your audience’s preferences. A common scenario is testing different subject lines to see which resonates best with your audience. Another example involves comparing various call-to-action (CTA) buttons to determine which encourages higher click-through rates. Testing different email layouts or the placement of key information can also provide valuable feedback.

Finally, A/B testing email copy can help optimize messaging to increase engagement and conversion rates.

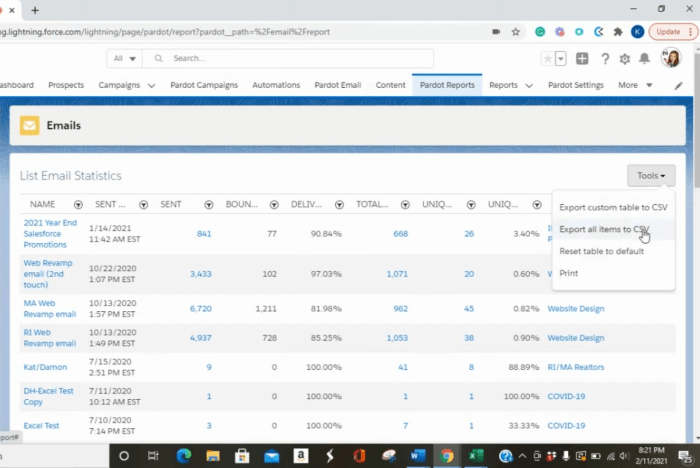

Metrics to Track During an A/B Test Campaign

Several metrics are critical to monitor during an A/B test campaign. Key metrics include open rates, click-through rates, conversion rates, and bounce rates. Analyzing these metrics provides insights into the performance of different email versions and helps determine which version is more effective. Furthermore, you can track the overall engagement of your email campaigns, including the time spent reading the emails.

A/B testing can really refine your Pardot emails, helping you nail down the perfect subject lines and call-to-actions. Understanding how your emails perform in search results is crucial, and the Google Search Console guide provides a fantastic overview of how to track and interpret those results. By analyzing click-through rates and conversion data, you can iteratively optimize your email campaigns for maximum impact.

This ongoing process of A/B testing helps you consistently improve email performance.

Analyzing A/B Test Results and Making Informed Decisions

After the test concludes, carefully analyze the results to identify significant differences between the control and variation groups. Consider using statistical significance to determine if the observed differences are due to chance or a genuine effect. This analysis allows you to make data-driven decisions and optimize your email campaigns for improved performance. If a significant difference is observed, implement the winning variation.

If no significant difference is observed, continue testing or consider other factors influencing campaign performance.

Table Demonstrating Different A/B Testing Scenarios and Corresponding Pardot Email Elements

| A/B Testing Scenario | Pardot Email Element |

|---|---|

| Subject Line Variations | Subject line |

| Call-to-Action Button Variations | Call-to-action button text and design |

| Email Layout Variations | Email structure, content placement, and visual elements |

| Email Copy Variations | Body text, tone, and persuasive language |

| Image Variations | Image choice and placement |

Optimizing Email Content Based on A/B Test Results: A B Testing Can Help Perfect Your Pardot Emails

A/B testing provides valuable data to understand what resonates with your audience. Analyzing the results allows you to fine-tune your Pardot email campaigns for better engagement and conversion rates. This process involves interpreting the data, adjusting email content, and creating more impactful campaigns.By carefully examining the outcomes of A/B tests, you can identify which email variations perform better. This crucial step allows for tailored adjustments to your email content, leading to higher engagement and ultimately, improved results for your marketing efforts.

Interpreting A/B Test Results, A b testing can help perfect your pardot emails

Understanding the metrics behind your A/B tests is key to recognizing patterns and drawing conclusions. Focus on key performance indicators (KPIs) like open rates, click-through rates (CTRs), and conversion rates. Analyze the differences between variations, considering factors like subject lines, email body content, and call-to-actions. Identifying statistically significant differences between variations is crucial. Tools like Pardot’s reporting features will highlight these differences.

This allows you to see what aspects of your emails are resonating with your audience and which need adjustments.

Adjusting Email Content Based on Results

Analyzing A/B test results allows you to make strategic adjustments to your email content. If a variation performs significantly better than another, identify the key differences and replicate the successful elements. For instance, if a subject line with a specific generates a higher open rate, consider using similar s in future campaigns. Focus on the elements that drove the higher performance, such as compelling subject lines, clear calls to action, and visually appealing designs.

Tailor your content to resonate with the specific preferences of your audience segments.

Creating Engaging and Effective Emails

Engaging emails require a combination of compelling copy and attractive design. Based on A/B test results, you can refine the language used in your email content. For example, if a more conversational tone leads to higher engagement, adopt a similar style in future campaigns. Likewise, A/B testing can reveal whether shorter or longer email copy performs better.

The goal is to create content that is not only informative but also engaging and resonates with the reader.

Refining Email Copy and Design

The copy and design of your emails are crucial factors in influencing reader engagement. Refine email copy by focusing on clarity, conciseness, and persuasiveness. A/B testing can reveal which versions of the email copy are more effective. Similarly, analyze the design elements of your emails. For example, if a particular color scheme or image generates a higher click-through rate, incorporate these elements into future campaigns.

Keep an eye on your audience’s preferences to create emails that effectively convey your message.

Applying A/B Testing Insights to Campaigns

Successful email campaigns are built on data-driven decisions. Apply A/B testing insights to future campaigns by incorporating the winning elements from previous tests. For example, if a particular subject line format generates high open rates, use that format consistently. Similarly, if a specific call-to-action style leads to higher conversions, replicate it in future campaigns. This ensures that your email marketing strategy is optimized and results in the best possible outcomes.

Example: Comparing Email Variations

| Variation | Subject Line | Body Content | Open Rate | Click-Through Rate |

|---|---|---|---|---|

| A | “Exclusive Offer – Limited Time” | Detailed product information, limited-time discount | 15% | 5% |

| B | “Get [Product Name] Now” | Concise product description, direct call to action | 20% | 7% |

Variation B shows a statistically significant improvement in both open and click-through rates, indicating that a concise and direct approach resonated better with the audience. These insights should be incorporated into future email campaigns.

Common Mistakes to Avoid in Pardot A/B Testing

A/B testing Pardot emails is a powerful tool for optimizing campaign performance. However, common mistakes can significantly hinder your results. Understanding these pitfalls is crucial for maximizing the effectiveness of your efforts and achieving desired outcomes. By avoiding these errors, you can ensure that your A/B tests are more reliable, yield meaningful insights, and ultimately drive better results for your marketing campaigns.Incorrectly Defining Goals and Metrics can lead to misleading conclusions.

Focusing on the wrong metrics can cause you to optimize for vanity metrics rather than actual campaign success. A clear understanding of the desired outcomes and appropriate KPIs (Key Performance Indicators) is essential.

Common Pitfalls in A/B Testing

Defining a clear objective is paramount for a successful A/B test. Without a well-defined goal, you risk testing irrelevant variables and failing to gather actionable insights. For instance, if your goal is to increase open rates, metrics like click-through rates (CTR) might not be suitable. Focusing solely on clicks, without considering the ultimate goal of conversions, could result in an email that generates high clicks but low conversions.

A/B testing can be a game-changer for perfecting your Pardot emails. Just like understanding user behavior is crucial in web design, as highlighted in the importance of user feedback in web design , testing different subject lines, calls to action, and email layouts can significantly boost open rates and conversions. By analyzing which variations resonate most with your audience, you can optimize your Pardot campaigns for maximum impact.

Likewise, focusing on vanity metrics like “likes” on social media can distract from more critical business goals. Defining the specific desired outcome—whether it’s more leads, higher sales, or increased brand awareness—helps in selecting appropriate metrics.

Testing Too Many Variables at Once

Testing multiple variables simultaneously can complicate the analysis of results. This makes it difficult to isolate the impact of each change on the outcome. A well-structured A/B test should focus on testing one or two variables at a time to gain a clear understanding of the effect of each modification. For example, if you are testing subject lines, don’t also modify the email content, call-to-action buttons, or images.

Focus on a single element, like the subject line, to determine its impact on open rates.

Insufficient Sample Size

A small sample size can lead to unreliable results. Statistical significance is crucial for determining if observed differences between test groups are truly meaningful. Using a larger sample size ensures that the results accurately reflect the overall population and allows for more robust conclusions. Avoid relying on limited data, as it may not provide a true representation of the campaign’s effectiveness.

Always consider the statistical power of your test to determine if the sample size is sufficient.

Ignoring A/B Test Bias

Unintentional bias in the test design can skew the results. For instance, sending one version of the email to a specific segment might introduce bias. Ensuring that both groups are comparable in terms of demographics, engagement history, and other relevant factors is critical for unbiased results. Randomly assigning recipients to test groups is essential to minimize bias and ensure that the results accurately reflect the impact of the tested element.

When A/B Testing Might Not Be the Best Solution

There are scenarios where A/B testing may not be the optimal approach. If the email’s design is fundamentally flawed, or if the email is not tailored to the target audience, A/B testing may not solve the problem. Focus on the fundamental elements of email marketing, such as clarity of message, relevance to the recipient, and proper targeting, before employing A/B testing.

A/B testing should be used to optimize existing elements, not to rectify critical design flaws.

Table of Common Mistakes and Solutions

| Common Mistake | Solution |

|---|---|

| Testing too many variables at once | Focus on testing one or two variables per test to isolate their impact. |

| Insufficient sample size | Increase the sample size to ensure statistically significant results. |

| Ignoring A/B test bias | Randomly assign recipients to test groups to minimize bias. Ensure the groups are similar in demographics and engagement. |

| Incorrectly defining goals and metrics | Define specific, measurable, achievable, relevant, and time-bound (SMART) goals and use appropriate KPIs. |

Advanced A/B Testing Techniques in Pardot

Taking your Pardot email campaigns to the next level requires more than just basic A/B testing. Moving beyond simple subject line or call-to-action variations, advanced techniques like multi-variable testing unlock deeper insights into audience preferences and campaign effectiveness. This allows for more nuanced and impactful optimizations, leading to higher conversion rates and a better return on investment.Advanced A/B testing in Pardot goes beyond simple comparisons, delving into the intricate interplay of multiple variables to fine-tune email performance.

This sophisticated approach empowers marketers to understand how different combinations of elements impact user engagement and conversion rates.

Multi-Variable Testing in Email Campaigns

Multi-variable testing, a powerful extension of A/B testing, allows marketers to simultaneously evaluate the impact of multiple variations across various elements within an email. Instead of focusing on single changes, this method examines the combined effect of modifying subject lines, email body content, calls-to-action, and even send times.This method is crucial because it unveils the intricate relationships between these factors.

For example, a catchy subject line might not perform well if paired with poorly written content, or a compelling call-to-action might be ineffective if it doesn’t align with the recipient’s expectations. Multi-variable testing helps marketers discover these critical interactions and optimize for optimal performance.

Examples of Advanced Techniques in Pardot

Several advanced techniques enhance email campaigns through multi-variable testing within Pardot. These include evaluating variations in email subject lines, preheader text, email content structure, calls-to-action, and even send time. Consider testing different combinations of these elements to identify the optimal combination for your specific audience and goals.For example, you might test variations in the subject line (“Summer Sale” vs.

“Limited-Time Offer”) combined with different calls-to-action (“Shop Now” vs. “Learn More”). This allows you to see which combination yields the highest click-through rates and conversions.

Optimizing Email Performance with Multivariate Testing

Multivariate testing in Pardot is instrumental in refining email performance. By systematically testing different variations, marketers can pinpoint the most effective elements and tailor campaigns for maximum impact. For example, testing different email templates, subject lines, and calls-to-action simultaneously helps uncover the optimal combination.

A/B Testing for Specific Email Marketing Goals

A/B testing, particularly multi-variable testing, can be tailored to specific marketing goals. For instance, if the goal is to increase open rates, testing different subject lines and preheader text variations can be effective. If the aim is to boost click-through rates, then variations in call-to-action buttons, images, and even the design of the email body can be explored. A/B testing provides a structured way to assess what works best to achieve each goal.

Multivariate Testing Scenarios and Impact on Email Performance

| Scenario | Variations Tested | Potential Impact on Email Performance |

|---|---|---|

| Subject Line & Preheader Text | Different phrasing, urgency, and tone in subject lines and preheader text | Increased open rates, higher click-through rates |

| Email Body Content & Call-to-Action | Different content formats, lengths, and call-to-action placements | Improved conversion rates, increased sales |

| Email Design & Visuals | Different color palettes, image placements, and layout structures | Enhanced engagement, improved brand perception |

| Send Time & Frequency | Different times of day and days of the week for email delivery | Higher open and click-through rates, better audience engagement |

Outcome Summary

In conclusion, mastering A/B testing in Pardot is key to achieving optimal email marketing results. By understanding the nuances of different testing approaches and avoiding common pitfalls, you can significantly improve your Pardot email campaigns. This guide has provided a comprehensive overview, equipping you with the knowledge and strategies to create highly effective and engaging emails. So, go forth and refine your Pardot emails with the power of A/B testing!