7 technical issues that kill seo and how to spot them – 7 technical issues that kill and how to spot them sets the stage for a deep dive into crucial website health. Poor technical can significantly hinder your site’s visibility and user experience, impacting your bottom line. Understanding these technical aspects is key to achieving high search rankings and driving organic traffic.

This post breaks down seven common technical problems that often go unnoticed, from slow loading speeds to mobile-friendliness issues, and provides actionable solutions for each. We’ll examine how each problem impacts your site’s performance, along with practical examples and actionable steps you can take to fix them. Get ready to optimize your site for search engines and boost your online presence!

Introduction to Technical Issues: 7 Technical Issues That Kill Seo And How To Spot Them

Technical is the backbone of a website’s visibility in search engine results pages (SERPs). It focuses on optimizing the technical aspects of a website to ensure search engines can easily crawl, index, and understand its content. A strong technical foundation is crucial for organic traffic, as search engines prioritize websites that are easily navigable and understandable. Without proper technical optimization, even the most compelling content might not rank well or be discovered by potential visitors.Technical is more than just s and meta descriptions.

It encompasses the underlying infrastructure of a website, including site architecture, crawlability, and website speed. These elements significantly impact how search engines perceive and rank a site. Ignoring these technical aspects can lead to poor search engine rankings, reduced organic traffic, and ultimately, a diminished online presence. Furthermore, poor technical can negatively affect user experience, resulting in higher bounce rates, lower time on site, and a less engaging user journey.

Ever wondered why your SEO efforts aren’t paying off? Seven technical issues can be major roadblocks. One common culprit is the use of numbers in your URLs, which can sometimes confuse search engines. For example, check out how numbers in the url can affect your site’s crawlability. Ultimately, understanding these technical issues is key to fixing your SEO problems and boosting your rankings.

This impacts not just search engine rankings but also the overall success of the website.

Impact of Technical on Search Rankings

Technical aspects of a website play a vital role in search engine rankings. Search engines like Google use sophisticated algorithms to assess various factors, including website structure, page speed, and mobile-friendliness. A well-structured site, optimized for speed and mobile devices, is more likely to rank higher than a poorly structured, slow, or non-mobile-friendly one.

| Technical Aspect | Explanation of the Aspect | How it affects search rankings | Example of a fix |

|---|---|---|---|

| Website Speed | Page load time directly influences user experience and search engine rankings. Slow loading pages lead to high bounce rates and poor user engagement. | Slower loading times result in lower rankings. Search engines prioritize websites that load quickly. | Optimize images, use a content delivery network (CDN), and leverage browser caching. |

| Mobile Friendliness | Mobile-friendliness is critical as a growing number of users access websites from mobile devices. Responsive design ensures optimal viewing and navigation on various screen sizes. | Websites not optimized for mobile devices may experience lower rankings, as search engines favor mobile-friendly sites. | Implement a responsive web design, use mobile-first indexing, and test website responsiveness across various devices. |

| Crawlability | Search engine crawlers need to access and understand the website’s content. Technical issues like broken links, server errors, or poor site architecture can prevent crawlers from fully indexing the site. | Poor crawlability hinders search engine indexing. Unindexed pages cannot rank in search results. | Fix broken links, optimize robots.txt, and ensure proper site structure. |

| Site Structure | A well-organized site structure facilitates navigation for users and crawlers. Clear hierarchies, logical navigation, and intuitive page linking improve user experience and site architecture. | A complex or illogical site structure makes it harder for search engines to understand the site’s content, potentially leading to lower rankings. | Implement a clear sitemap, use descriptive URLs, and ensure logical internal linking. |

| Schema Markup | Schema markup provides structured data that helps search engines better understand the content on a webpage. This can improve visibility in search results and increase click-through rates. | Websites with proper schema markup can receive rich snippets, which often increase click-through rates, resulting in higher rankings. | Implement schema markup for relevant content types (e.g., products, articles) on your website. |

Consequences of Overlooking Technical Issues

Ignoring technical issues can have serious consequences for a website’s visibility and success. Reduced organic traffic, lower search rankings, and a decline in overall online presence are just a few potential outcomes. Moreover, poor technical can lead to a frustrating user experience, driving visitors away and potentially damaging brand reputation. Addressing these issues proactively is essential for maintaining a strong online presence and achieving desired search engine rankings.

Slow Website Loading Speed

Slow website loading speed is a significant hurdle for any online business. A sluggish website negatively impacts user experience, leading to higher bounce rates and ultimately, lower search engine rankings. Users are increasingly impatient, and a slow website can quickly drive them away, losing potential customers and revenue. Search engines, too, penalize slow-loading sites, as they prioritize user experience in their ranking algorithms.Website loading speed is a crucial factor in determining user satisfaction and search engine rankings.

A fast-loading website ensures a seamless browsing experience, encourages user engagement, and boosts search engine rankings. Conversely, a slow website can significantly reduce user engagement, leading to high bounce rates and decreased visibility in search results. This impact is directly correlated with lost opportunities for conversions and revenue generation.

Identifying Slow Loading Speed Issues

Various tools and methods can pinpoint loading speed bottlenecks on a website. Website performance monitoring tools, like Google PageSpeed Insights, provide detailed reports on loading times and identify specific areas needing optimization. These tools often offer recommendations for improvements, making them valuable resources for identifying and resolving issues. Using these tools, you can pinpoint slow-loading elements, including images, scripts, and server response times.

Common Causes of Slow Loading Speed

Several factors contribute to slow website loading speeds. Image optimization is often a major culprit. Large, unoptimized images can significantly increase loading times. Inefficient code, including excessive CSS and JavaScript, can also hinder page load speeds. Furthermore, slow server response times can also be a major bottleneck.

Image Optimization Techniques

Optimizing images is crucial for improving website loading speed. Appropriate image formats, reduced file sizes, and strategic use of image dimensions can significantly reduce loading times. Choosing the right image format is paramount. For example, using WebP format, which is supported by modern browsers, can reduce file sizes without sacrificing quality.

Figuring out those pesky SEO technical issues can be a real headache, but knowing how to spot them is key. Understanding these problems is crucial for a successful online presence, and a great way to attract clients is by leveraging various lead generation sources. For example, checking out the 10 best lead generation sources visible experts use to attract clients can help you identify effective strategies.

Ultimately, fixing these technical SEO problems will improve your website’s ranking and visibility, leading to more traffic and conversions.

| Technique | Explanation | Impact on Loading Speed | Example Implementation |

|---|---|---|---|

| Image Compression | Reducing the file size of images without significant loss of quality. | Significant reduction in loading time. | Using image optimization tools like TinyPNG or ImageOptim. |

| Choosing the Right Format | Selecting appropriate image formats like WebP or JPEG. | Potentially large reduction in file size compared to other formats. | Converting images to WebP where possible. |

| Image Dimensions | Optimizing image dimensions to match the intended display size. | Reduces file size by only loading the necessary pixels. | Using responsive image techniques, like srcset or picture elements. |

| Lazy Loading | Loading images only when they are in the viewport. | Significant improvement in initial page load time. | Implementing lazy loading with JavaScript or browser-specific features. |

Image optimization tools, like TinyPNG, can compress images without losing quality, saving considerable bandwidth and reducing loading times.

Ever wondered why your SEO efforts aren’t paying off? Seven technical issues can silently sabotage your rankings. Learning to spot these problems is crucial for a successful online presence. For instance, understanding how Salesforce Cloud can streamline your marketing automation and data migration processes can significantly boost your SEO strategy. discover how salesforce cloud powers seamless marketing automation and data migration transform your marketing strategy today to see how.

These technical issues, like slow page load times or broken links, are often the hidden culprits behind disappointing SEO results. Knowing how to identify and fix them is key to unlocking your website’s full potential.

Mobile Friendliness Issues

Mobile-friendliness is no longer a nice-to-have but a fundamental requirement for any website aiming for success in today’s digital landscape. A website that doesn’t adapt seamlessly to different screen sizes and devices not only frustrates users but also negatively impacts its search engine rankings. Google prioritizes mobile-first indexing, meaning it primarily uses the mobile version of a website for ranking and indexing purposes.

This prioritization underscores the critical importance of a mobile-friendly website for and user experience.Ensuring your website renders flawlessly on various mobile devices is crucial for both user engagement and search engine optimization. Poor mobile experiences can lead to high bounce rates, low time on site, and ultimately, lower search rankings. A well-optimized mobile site contributes to a positive user experience, which is a significant factor in search engine algorithms.

Mobile-Friendly Design Considerations

Mobile-friendliness encompasses a range of design elements crucial for a positive user experience. Responsive design is paramount, allowing the website to adjust its layout and content based on the screen size of the device. This adaptability is essential for displaying information clearly and efficiently on different screen sizes. Poorly implemented responsive design can lead to overlapping elements, unreadable text, and difficult navigation.

Common Mobile-Friendliness Issues

Several factors can hinder a website’s mobile-friendliness. Responsive design problems often stem from improper implementation of CSS frameworks, resulting in content that doesn’t adapt correctly. Incorrect viewport settings can lead to scaling issues, making content either too small or too large for comfortable viewing. Inadequate media queries, which are crucial for tailoring the display to different screen sizes and orientations, can result in a poor mobile experience.

Failing to optimize images for mobile devices can also significantly impact loading times.

Ensuring Mobile-Friendliness

Implementing a robust responsive design framework is essential. This involves using flexible layouts, fluid grids, and media queries to adjust the website’s structure based on the screen size. Carefully crafted viewport meta tags are essential to control the scaling of the website within the browser. Using CSS media queries allows for targeting specific screen sizes and orientations, enabling customized display adjustments for optimal viewing.

Images should be optimized for mobile devices, with appropriate dimensions and compression to minimize loading times.

Testing Mobile-Friendliness

Several tools can assist in evaluating the mobile-friendliness of your website. Google’s Mobile-Friendly Test is a straightforward tool that analyzes the mobile-friendliness of your site. Other testing tools, such as browser developer tools and specialized mobile testing platforms, offer detailed insights into the website’s performance on various mobile devices. Utilizing these tools allows for identification of issues and subsequent improvements.

Mobile-Friendly Design Elements and Their Impact

| Design Element | Explanation | Impact on Rankings | Example Implementation |

|---|---|---|---|

| Responsive Design | Adapting website layout and content to various screen sizes. | Significant improvement in rankings. Google prioritizes mobile-first indexing. | Using CSS media queries to adjust elements based on viewport width. |

| Viewport Meta Tag | Controls how the website scales within the browser window. | Ensures proper scaling and prevents zooming issues. | “ |

| Optimized Images | Images compressed and optimized for mobile devices to reduce loading time. | Faster loading times improve user experience and potentially lead to higher rankings. | Using appropriate image formats (WebP, AVIF), compressing images, and using responsive image techniques. |

| Fast Loading Times | Critical for providing a smooth user experience. | Faster loading speeds contribute to better user engagement and higher rankings. | Optimize images, minimize HTTP requests, leverage browser caching, and use a Content Delivery Network (CDN). |

Crawlability and Indexability Problems

Search engine optimization () hinges on search engine crawlers effectively accessing and understanding your website’s content. Crawlability refers to the ability of these bots to navigate your site, while indexability concerns their capacity to add the content to their database. Problems in either area can significantly hinder your site’s visibility in search results.Understanding crawlability and indexability is crucial for effective .

A website that’s not crawlable by search engine bots can’t be indexed, meaning its pages won’t appear in search results. Conversely, a website that’s crawlable but not indexable might have pages that aren’t included in the search engine’s index, even though bots can access them.

Crawlability Explained

Crawlability is the ability of search engine bots to traverse and access all the pages on your website. Think of these bots as digital spiders, meticulously following links to discover new content. A well-structured site with clear internal linking and proper robots.txt directives makes the task easy for these bots. Conversely, complex site structures, broken links, and errors in the robots.txt file can hinder crawlability, preventing search engines from fully understanding your website’s content.

Indexability Explained

Indexability refers to a search engine’s ability to add a page’s content to its index. While crawlability ensures bots can reach the page, indexability ensures that the search engine recognizes and stores the content for later retrieval in search results. Duplicate content, thin content, and pages with low-quality or irrelevant content are less likely to be indexed. A website with well-optimized pages and a clear site structure increases the chances of its content being indexed and found by users.

Identifying Crawlability and Indexability Issues

Identifying crawlability and indexability issues often involves using tools specifically designed for this purpose. Search console tools, for example, provide detailed reports on crawl errors, helping you pinpoint problems. Checking for broken links, verifying robots.txt directives, and ensuring proper sitemap implementation are also essential steps. Manual inspection of site structure, content quality, and sitemap implementation also play a significant role.

Common Causes of Crawlability and Indexability Issues

Several factors can hinder crawlability and indexability. One major cause is errors in the robots.txt file. Incorrect directives can prevent search engine bots from accessing specific parts of your website. Broken internal links, leading to dead ends or 404 errors, also impede crawlability. Furthermore, duplicate content, which often results from poor content management or similar content across various pages, can lead to search engines not properly indexing the valuable content.

Fixing Robots.txt Issues

The robots.txt file acts as a guide for search engine crawlers, specifying which parts of your site they should or shouldn’t crawl. Ensuring the file is correctly structured and doesn’t block essential pages is vital. An error in this file can prevent search engines from indexing critical content, affecting your website’s overall visibility. A well-structured robots.txt file, with clear instructions for crawlers, is essential for efficient crawling.

Proper Sitemap Implementation

Sitemaps are essential for guiding search engine crawlers through your website’s structure. Implementing a sitemap helps search engines understand the hierarchy and organization of your content. This clear structure allows search engines to quickly and easily discover new and updated pages. A proper sitemap is a crucial tool for improving crawlability and indexability.

Table: Crawl Errors and Solutions

| Crawl Error | Explanation | Impact on Crawlability | Solution |

|---|---|---|---|

| 404 Errors | Broken links leading to pages that no longer exist. | Prevents crawlers from reaching intended content. | Fix broken links, redirect to appropriate pages, or remove non-existent pages. |

| Robots.txt Issues | Incorrect directives in the robots.txt file preventing access to specific pages. | Blocks crawlers from accessing critical content. | Review and correct the robots.txt file, ensuring necessary pages are accessible. |

| Duplicate Content | Similar content appearing on multiple pages. | Search engines may not index the content properly, potentially penalizing the site. | Ensure unique content on each page, use canonical tags to specify the preferred version of the content. |

| Slow Page Loading Speed | Pages take an excessively long time to load. | Crawlers may not complete crawling the site due to time constraints. | Optimize images, minimize HTTP requests, leverage browser caching, and improve server response time. |

Broken Links and 404 Errors

Broken links and 404 errors are more than just minor inconveniences for users; they significantly impact your website’s search rankings and overall user experience. These errors signal to search engines that your website might not be well-maintained, which can lead to lower rankings in search results. A user encountering a broken link is likely to lose interest in your site, potentially impacting conversions and future engagement.A well-functioning website seamlessly guides users through the content they seek.

Broken links disrupt this flow, leaving users stranded and potentially losing trust in your brand. Addressing broken links proactively is crucial for maintaining a positive user experience and improving your site’s overall health.

Identifying Broken Links

Regularly checking for broken links is essential for maintaining a healthy website. Failure to address these issues can lead to a negative user experience and potentially harm your search engine rankings. Automated tools and manual audits are effective methods for identifying these problems.

- Automated tools, such as Screaming Frog, offer comprehensive crawls of your website, automatically detecting broken links. These tools allow you to quickly pinpoint and analyze various broken link types, providing insights into the extent of the problem.

- Manual audits, involving manually navigating through your website and clicking on internal and external links, can identify broken links not caught by automated tools. This method allows you to explore the site from a user’s perspective, revealing areas where navigation might be problematic.

Fixing Broken Links

Fixing broken links involves various strategies, depending on the type of link and its intended destination. Common solutions include redirecting the broken link to a relevant, working page or removing the link entirely if the content is no longer relevant.

- Redirecting: Redirecting a broken link to a relevant, active page is a common method for maintaining a smooth user experience. This is particularly useful for updating content or reorganizing website structure. 301 redirects are crucial for maintaining value from the old URL to the new one. For example, if a product page has been moved, a 301 redirect will ensure search engines and users are sent to the correct, updated page.

- Removing the link: Removing a link is an appropriate strategy when the content is no longer relevant or available. This approach avoids confusing users and keeps the site structure clean.

- Updating the link: When possible, updating a broken link to a functioning one is the most effective method for maintaining the user experience. This ensures the link’s purpose remains intact and leads users to the intended resource.

Preventing Future Broken Links

Proactive measures can help prevent broken links from appearing on your site. Implementing robust content management systems (CMS) and processes will help minimize this issue.

- Regular Content Audits: Regular content audits help you identify outdated or deleted content, preventing broken links to those resources. This proactive approach helps avoid the issue of broken links and ensures your site remains user-friendly.

- Implementing a robust CMS: A robust CMS facilitates easier management of content and links. Features like version control and automated backups can help minimize errors and ensure that content changes don’t lead to broken links.

- Link Review Process: Establishing a link review process, whether automated or manual, ensures that links are checked for accuracy and validity before they are published or updated. This process reduces the risk of introducing broken links.

Broken Link Types and Solutions, 7 technical issues that kill seo and how to spot them

| Broken Link Type | Explanation | Impact on User Experience | Solution |

|---|---|---|---|

| 404 Error (Not Found) | The requested page cannot be found on the server. | Users are presented with an error message, disrupting the browsing experience. | Redirect to a relevant page or remove the link. |

| Broken External Link | The linked-to external website is unavailable. | Users are directed to an error page or a blank page. | Update the link to a working external page or remove the link. |

| Removed Internal Page | A page on your website has been deleted or moved. | Users are presented with an error page or a blank page. | Redirect to a relevant page or remove the link. |

| Typographical Error in Link | The link contains a typo, leading to a different or non-existent URL. | Users are directed to an incorrect page or an error page. | Correct the typo in the link. |

Structured Data Markup Issues

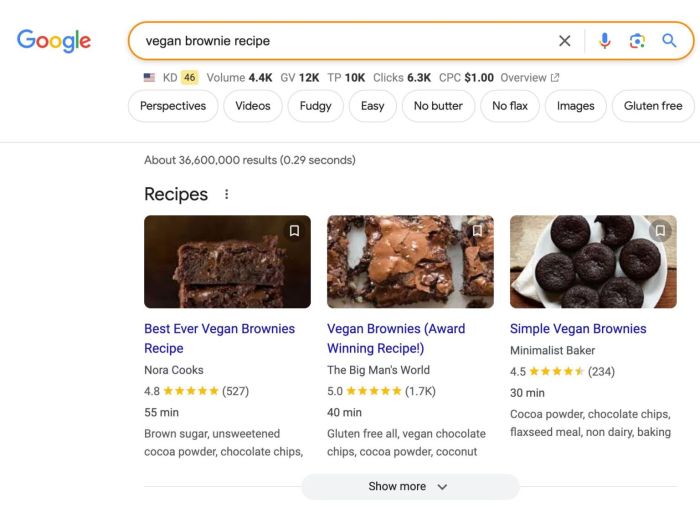

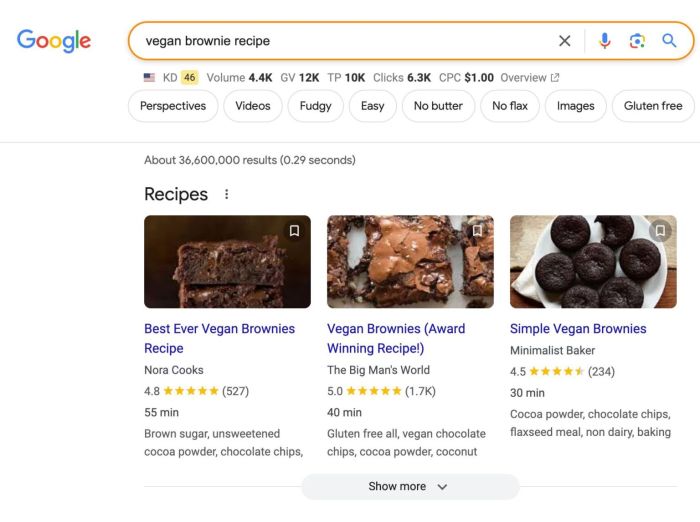

Structured data markup is a crucial element of technical . It provides a standardized way for webmasters to communicate information about their content to search engines, enabling them to better understand the context and meaning of web pages. This enhanced understanding often leads to improved search rankings and the display of rich results in search engine results pages (SERPs).

Properly implemented structured data markup can significantly boost a website’s visibility and attract more qualified traffic.

Structured Data Markup and Search Engine Understanding

Structured data markup is a standardized format for providing context and meaning to the content on a webpage. Search engines utilize this data to better understand the information presented, enabling them to deliver more relevant search results to users. This includes details like product information, events, recipes, and reviews, all of which can be explicitly described to search engines through structured data markup.

For example, marking up a product page with structured data about price, availability, and features allows search engines to present this information in a rich snippet, making the listing more appealing and informative to users.

How Structured Data Improves Search Rankings and Rich Results

Structured data markup directly contributes to improved search rankings by enabling search engines to comprehend the context of the content. The more accurate and detailed the structured data, the more likely search engines are to recognize the content’s value and relevance to specific search queries. This improved understanding translates into better search rankings, placing the website higher in search results.

Furthermore, structured data markup can trigger the display of rich results in SERPs. Rich results are enhanced search results that showcase additional information, such as images, stars, and prices, directly within the search results page. This increased visibility often leads to higher click-through rates and increased traffic to the website.

Common Structured Data Markup Errors and How to Fix Them

Common structured data markup errors often stem from incorrect implementation, incorrect schema types, or improper use of attributes. Incorrect schema types lead to search engines failing to properly understand the data. For instance, using the incorrect schema for a product might result in search engines not displaying the product information in rich results. Issues with data formatting or missing fields also contribute to problems.

These errors can be rectified by double-checking the implementation, ensuring that the correct schema type is utilized, and carefully filling out all required fields with accurate information. Using schema validation tools can help identify and fix these errors, ensuring structured data is properly formatted.

Different Structured Data Types

Various structured data types cater to different content types. Each type defines a specific vocabulary for describing particular information, ensuring search engines understand the data. Understanding these different types allows webmasters to choose the appropriate markup for their content.

| Structured Data Type | Explanation | Example Markup | Use Cases |

|---|---|---|---|

| Product | Describes product details like name, price, availability, and features. | “`html”@context”: “https://schema.org”,”@type”: “Product”,”name”: “Example Product”,”price”: “99.99”,”availability”: “InStock”“` | E-commerce websites, product listings |

| Event | Describes events like dates, times, locations, and descriptions. | “`html”@context”: “https://schema.org”,”@type”: “Event”,”name”: “Example Event”,”startDate”: “2024-10-27″,”location”: “@type”: “Place”,”name”: “Example Venue”“` | Event listings, calendars, ticketing websites |

| Recipe | Describes recipes with ingredients, instructions, and preparation time. | “`html”@context”: “https://schema.org”,”@type”: “Recipe”,”name”: “Example Recipe”,”recipeIngredient”: [“Ingredient 1”, “Ingredient 2″],”recipeInstructions”: [“Instruction 1”, “Instruction 2”]“` | Food blogs, recipe websites |

HTTPS Issues

Ensuring your website is secure is paramount in today’s digital landscape. A critical aspect of this security is the implementation of HTTPS. This protocol, built on top of the standard HTTP, encrypts communication between your website and users’ browsers, protecting sensitive data like login credentials and personal information. Beyond security, HTTPS is now a significant ranking factor for search engines, impacting your website’s visibility and organic traffic.HTTPS is more than just a security protocol; it’s a fundamental element of a trustworthy online presence.

Search engines, recognizing the importance of user safety, reward websites that prioritize HTTPS by boosting their rankings. Conversely, websites without HTTPS face potential penalties and reduced user trust, which can severely impact their online visibility and potential revenue. This section delves into the significance of HTTPS for both security and , outlining how to implement it and troubleshoot any potential issues.

Importance of HTTPS for Security and

HTTPS is crucial for safeguarding user data transmitted to and from your website. Encryption prevents unauthorized access and manipulation of sensitive information. This enhanced security directly fosters user trust, a key element in driving engagement and conversion. Search engines like Google prioritize secure websites, recognizing that they contribute to a safer online environment for users.

Impact of HTTP Issues on Search Rankings and User Trust

Websites lacking HTTPS certification can experience a negative impact on search engine rankings. Search engines penalize websites that do not prioritize security, potentially relegating them to lower positions in search results. Furthermore, a lack of HTTPS can erode user trust, leading to a decrease in website traffic and conversion rates. Users are increasingly aware of security risks and are more likely to avoid websites without HTTPS.

Methods to Ensure Your Website is Using HTTPS

Implementing HTTPS involves obtaining a valid SSL (Secure Sockets Layer) certificate, a digital document that verifies the identity of your website and establishes an encrypted connection. This certificate is issued by a trusted Certificate Authority (CA). Most web hosting providers offer services to manage SSL certificates, simplifying the process.

Troubleshooting HTTPS Implementation Issues

Troubleshooting HTTPS issues often involves verifying the SSL certificate’s validity and checking for any configuration errors. Common issues include incorrect certificate installation, misconfigured server settings, or problems with the DNS record. Detailed logs from your web server and browser developer tools can provide insights into specific problems.

Example of a Detailed Process for Migrating from HTTP to HTTPS

Migrating from HTTP to HTTPS involves several crucial steps:

- Obtain an SSL certificate: Contact your hosting provider or a Certificate Authority to acquire a suitable SSL certificate. Consider factors like the type of certificate (e.g., DV, OV, EV) and the needs of your website.

- Configure your web server: Modify your web server configuration (e.g., Apache, Nginx) to correctly handle HTTPS requests. This includes setting up virtual hosts, enabling SSL, and configuring the SSL certificate.

- Update all links: Carefully update all internal and external links on your website to use HTTPS. Failing to do so can lead to broken links and a negative user experience.

- Redirect HTTP traffic to HTTPS: Implement a redirect mechanism to automatically forward all HTTP requests to their HTTPS counterparts. This ensures that users are always directed to the secure version of your website.

- Test the HTTPS implementation: Thoroughly test the HTTPS implementation to ensure that all pages load correctly, links function properly, and that no errors are encountered.

By following these steps and thoroughly testing the process, you can effectively migrate your website from HTTP to HTTPS, enhancing security and improving your performance.

Outcome Summary

In conclusion, mastering technical is crucial for website success. By addressing these seven key issues, you can significantly improve your website’s performance in search results and enhance the user experience. From optimizing images and ensuring mobile-friendliness to fixing broken links and implementing structured data, the steps Artikeld in this post provide a solid foundation for building a high-performing and user-friendly website.

Stay tuned for more insights!